CPC Definition - Subclass G06T

This place covers:

- Processor architectures or memory management for general purpose image data processing

- Geometric image transformations

- Image enhancement or restoration

- Image analysis

- Image coding

- Two-dimensional image generation

- Animation

- Three-dimensional image rendering

- Three-dimensional modelling for computer graphics

- Manipulating three-dimensional models or images for computer graphics

G06T is the functional place for image data processing or generation. Image data processing or generation specially adapted for a particular application is classified in the relevant subclass. Documents which merely mention the general use of image processing or generation without detailing of the underlying details of such, are classified in the application place. Where the essential technical characteristics of an invention relate both to the image processing or generation and to its particular use or special adaptation, classification is made in both G06T and the application place.

Attention is drawn to the following places, which may be of interest for search:

Apparatus for radiation diagnosis | |

Aspects of games using an electronically generated display having two or more dimensions | |

Measuring, by optical means, length, thickness or similar linear dimensions, angles, areas, irregularities of surfaces or contours | |

Reading or recognising printed or written characters or recognising patterns, e.g. fingerprints | |

Coding, decoding or code conversion | |

Pictorial communication, television systems |

Symbols under G06T 1/00 - G06T 19/20 may only be allocated as invention information.

Whenever possible, additional information should be classified using one or more of the Indexing Codes from the range of G06T.

The indexing codes under G06T 2200/00 - G06T 2219/2024 may only be allocated to documents to which a symbol under G06T 1/00 - G06T 19/20 is allocated as invention information as well.

The following list of symbols from the series G06T 2200/00 are for allocation to documents within the whole range of G06T except G06T 9/00:

Indexing scheme for image data processing or generation, in general - Not used for classification | |

involving 3D image data - processing of 3D image data, i.e. voxels; relevant for G06T 3/00, G06T 5/00, G06T 7/00 or G06T 11/00 | |

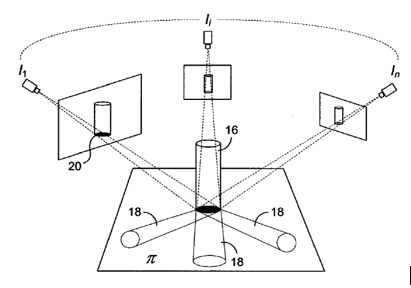

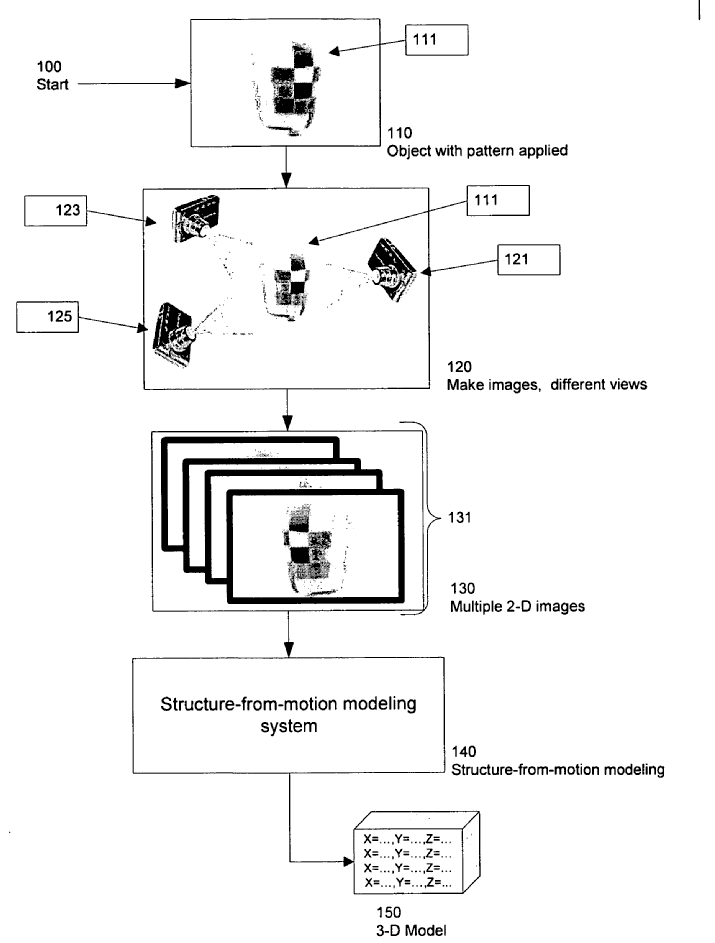

involving all processing steps from image acquisition to 3D model generation - complete systems from acquisition to modelling | |

involving antialiasing - dejagging, staircase effect | |

involving adaptation to the client's capabilities - adapting the colour or resolution of an image to the client's capabilities | |

involving computational photography | |

involving graphical user interfaces [GUIs] | |

involving image processing hardware - relevant for groups not directly related to hardware; not used in G06T 1/20, G06T 1/60, G06T 15/005 | |

involving image mosaicing - image mosaicing, panoramic images | |

Review paper; Tutorial; Survey - basic documents describing the state of the art. |

There are further series of symbols for G06T whose use is reserved to particular maingroups or ranges of maingroups and whose full list and description are given in the FCRs of the respective maingroups:

G06T 2201/00 for G06T 1/0021 only

G06T 2207/00 for G06T 5/00 and G06T 7/00 only

G06T 2219/00 for G06T 9/00 only

G06T 2210/00 for G06T 11/00 - G06T 19/00 only; see list below

G06T 2211/40 for G06T 11/003 only

G06T 2213/00 for G06T 13/00 only;

G06T 2215/00 for G06T 15/00 only;

G06T 2219/00 for G06T 19/00 only;

G06T 2219/20 for G06T 19/20 only

Symbols from the series G06T 2210/00 for allocation in the range of G06T 11/00 - G06T 19/00 only:

Indexing scheme for image generation or computer graphics - Not used for classification | |

architectural design, interior design - interior/garden/facade design, architectural layout plans | |

bandwidth reduction | |

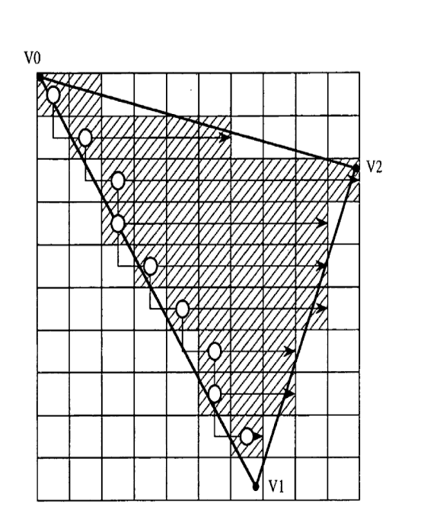

bounding box - convex hull for polygons or 3D objects | |

cloth - animation, rendering or modeling of cloth/garment/textile, virtual dressing rooms | |

collision detection, intersection - intersection/collision detection of 3D objects | |

cropping - cropping of image borders | |

fluid dynamics - animation, rendering or modelling of fluid flows | |

force feedback - virtual force | |

image data format - conversion between different image or graphics formats | |

level of detail - level of detail, also for textures (e.g. mip-mapping) | |

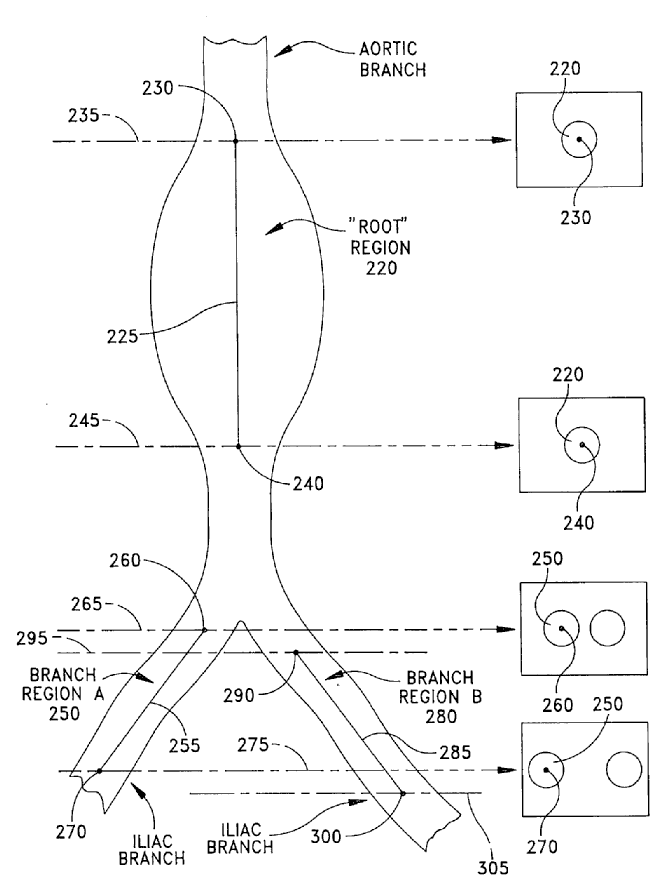

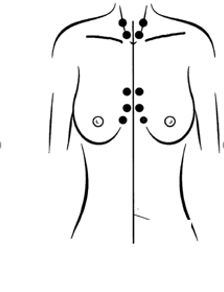

medical - medical applications concerning e.g. heart, lung, brain, tumors | |

morphing - morphing or warping | |

parallel processing | |

particle system, point based geometry or rendering - rendering and animation of particle systems (e.g. fireworks, dust, clouds), point clouds, splatting | |

scene description - scene graphs, scene description languages, e.g. VRML | |

semi-transparency - screen-door effect, change of transparency values | |

weathering - weathering effects like e.g. aging, corrosion |

In this place, the following terms or expressions are used with the meaning indicated:

2D | Two-dimensional |

3D | Three-dimensional |

4D | Four-dimensional, 3D in time |

CAD | Computer-Aided Design (in computer graphics); Computer-Aided Detection (in image analysis) |

MR | Magnetic Resonance (in image analysis); Mixed Reality (in computer graphics) |

Stereo | Treatment of the images of exactly two cameras in a pairwise manner |

In patent documents, the following abbreviations are often used:

ANN | Artificial Neural Network |

AR | Augmented Reality |

CT | Computed Tomography |

DCE-MRI | Dynamic Contrast-Enhanced Magnetic Resonance Imaging |

DCT | Discrete Cosine Transform |

DRR | Digitally Reconstructed Radiograph |

DTS | Digital Tomosynthesis |

GUI | Graphical User Interface |

IC | Integrated Circuit |

ICP | Iterative Closest Point |

LCD | Liquid Crystal Display |

MRF | Markov Random Field |

MRI | Magnetic Resonance Imaging |

PCB | Printed Circuit Board |

RGB | Red, Green, Blue |

ROI | Region of Interest |

SLAM | Simultaneous Localisation And Mapping |

SNR | Signal-to-Noise Ratio |

SPECT | Single Photon Emission Computed Tomography |

US | Ultrasound |

VOI | Volume of Interest |

VR | Virtual Reality |

This place covers:

Capturing or storing images from or to memory

This place does not cover:

Scanning, transmission or reproduction of documents or the like | |

Television cameras |

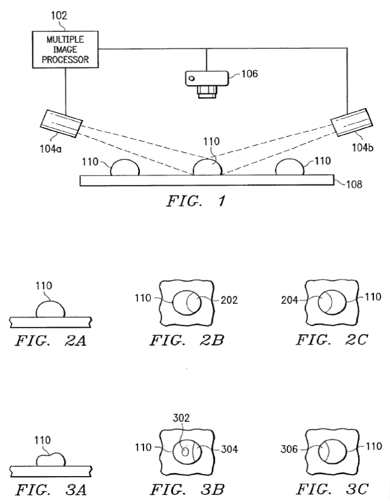

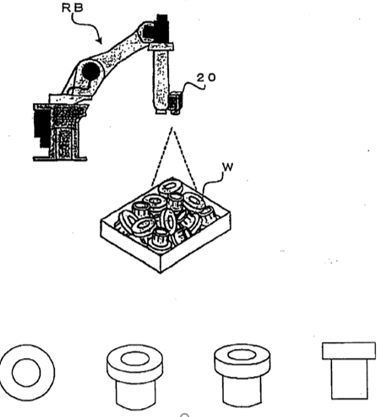

This place covers:

- Machine vision or tool control

- Image feedback for robot navigation or walking

- 3D vision systems.

This place does not cover:

Vision controlled manipulators | |

Accessories fitted to manipulators including video camera means | |

Control of position, course, altitude or attitude of land, water, air or space vehicles using means capturing signals occurring naturally from the environment for determining position or orientation |

This place covers:

- Image watermarking in general.

- Applications or software packages for watermarking.

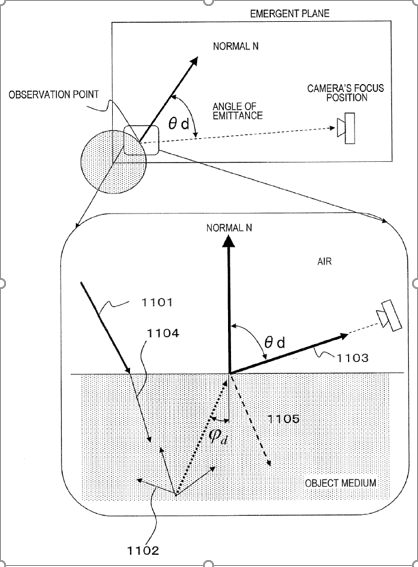

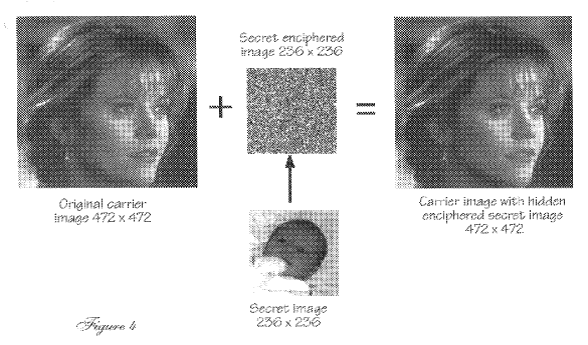

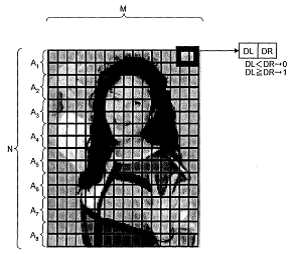

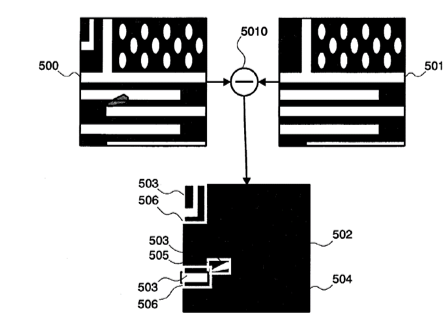

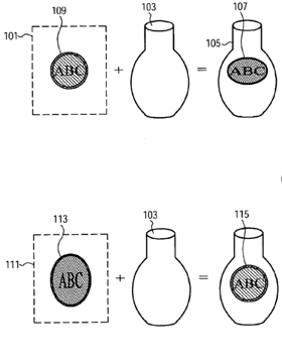

Illustrative example - Hiding a digital image (message) into another digital image (carrier) (US6094483 - UNIV NEW YORK STATE RES FOUND):

This place does not cover:

Testing specially adapted to determine the identity or genuineness of paper currency or similar valuable papers | |

Audio watermarking | |

Arrangements for secret or secure communication using encryption of data | |

Arrangements for secret or secure communication using electronic signatures |

Attention is drawn to the following places, which may be of interest for search:

Security arrangements for protecting computers or computer systems against unauthorised activity | |

Circuits for prevention of unauthorised reproduction or copying | |

Scanning, transmission or reproduction of documents involving image watermarking |

This place covers:

- Adaptations based on Human Visual System [HVS].

- Perceptual masking.

- Preservation of image quality; Distortion minimization.

- Methods to measure quality of watermarked images.

- Measuring the balance between quality and robustness, i.e., fixed robustness, adapting quality, or vice versa.

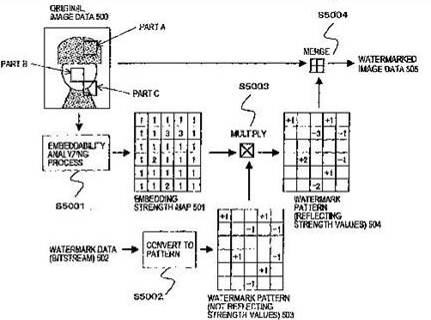

Illustrative example - Changing a portion of an image based on an embedding strength map (EP1170938 - HITACHI LTD):

This place covers:

- Embedding without modifying the size of input.

- Embedding or modifying the watermark directly in a coded image or video stream, without decoding first.

This place covers:

- Birthday attacks.

- Forgery.

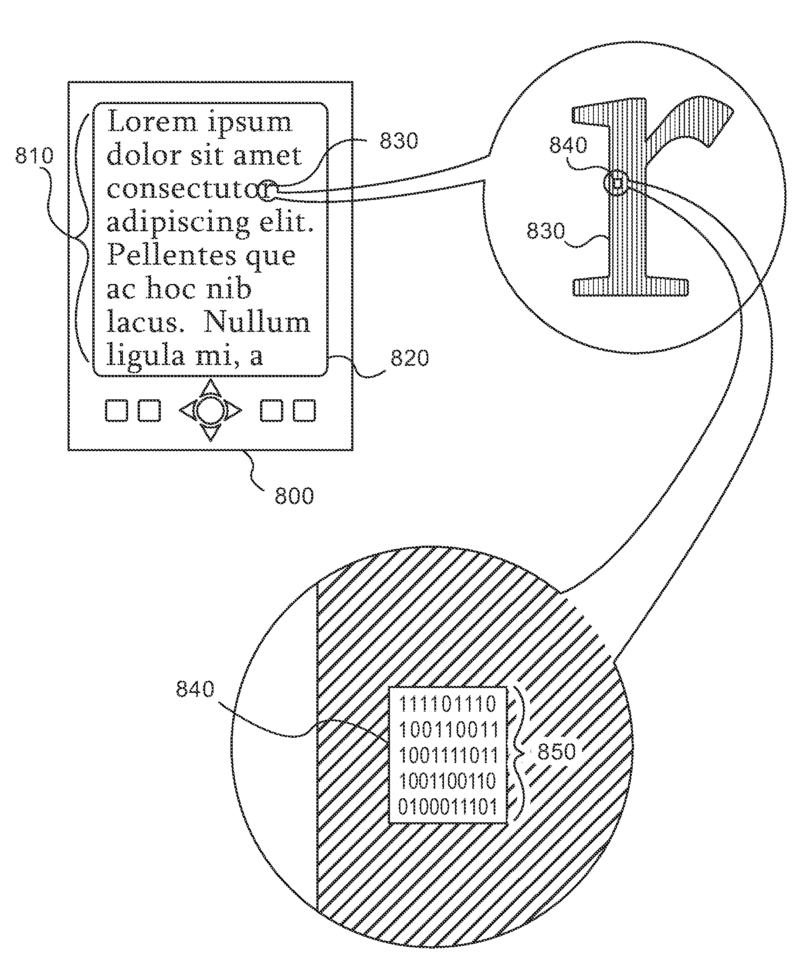

Illustrative example - Changing pixels at selected positions according to a replacement table (WO2011021114 - NDS LIMITED):

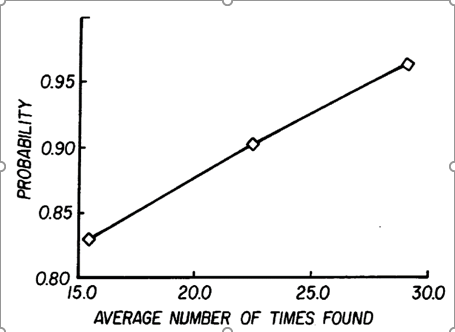

This place covers:

- Resistance; Resistance to attacks or distortions; Distortion compensation.

- Strength.

- Collusion attacks; Average attacks; Averaging.

- Reliable detection, e.g. with reduced likelihood of false positive/negative.

Illustrative example - Watermarking an image using the difference of average intensity of two adjacent blocks (EP1927948 - FUJITSU LTD):

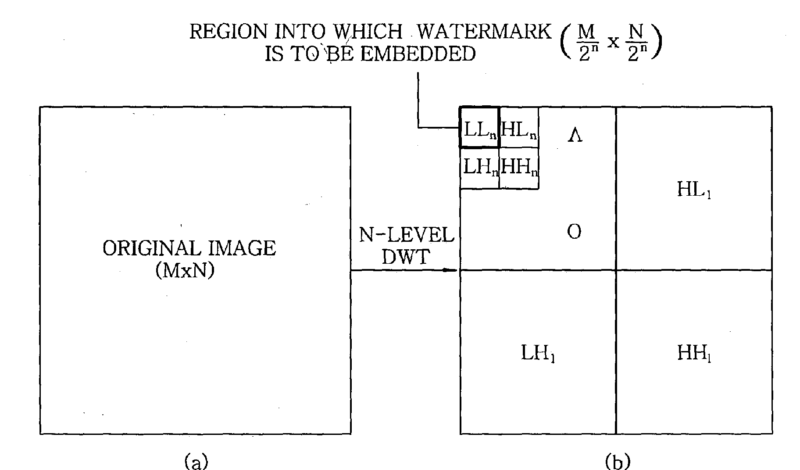

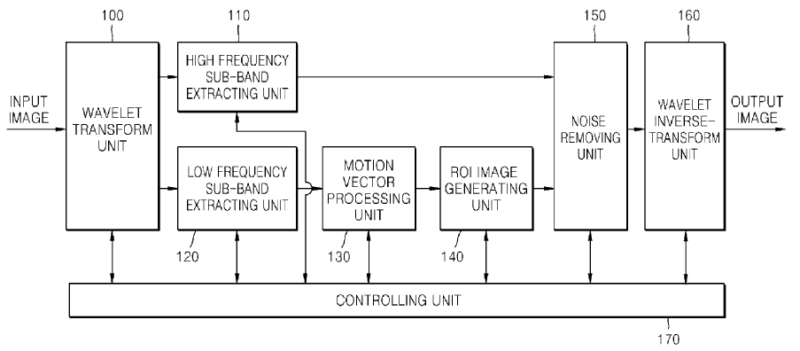

This place covers:

Watermarking techniques for JPEG or MPEG or for a wavelet transformed image.

Illustrative example - Embedded a watermark in a DC component region of a wavelet transformed image (US2004047489 - KOREA ELECTRONICS TELECOMM):

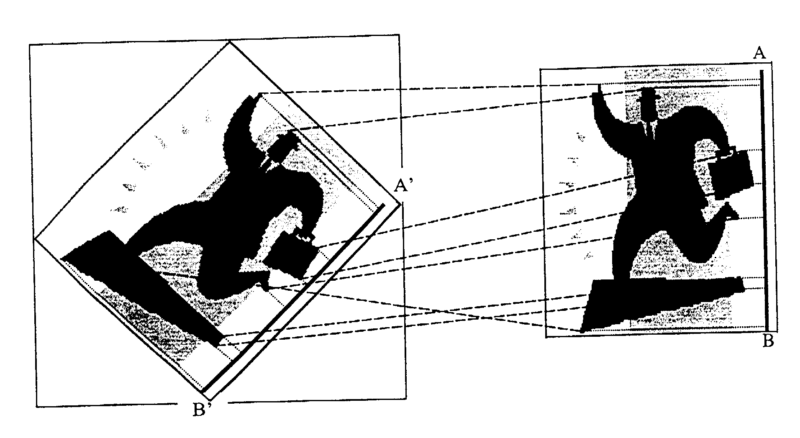

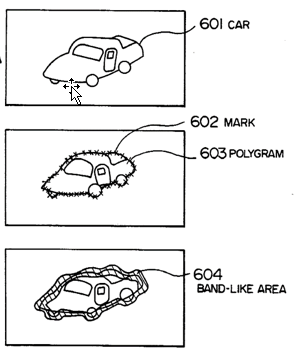

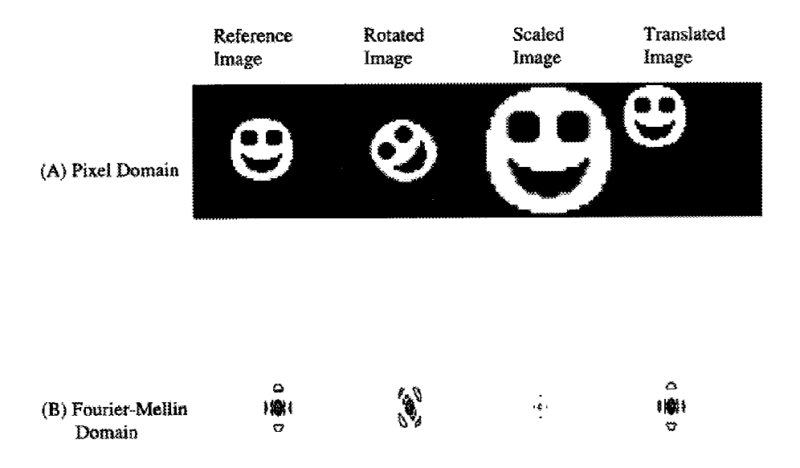

This place covers:

- Robust against resizing or rotation or cropping, etc.

- Determining the rescaling factor or rotation angle by using the watermarks so as to compensate the image, i.e. as a calibration signal.

- Desynchronization attacks.

Illustrative example - Combining a reference mark with an identification mark and embedding them in image textures to detect the applied transformations (GB2378602 - CENTRAL RESEARCH LAB LTD):

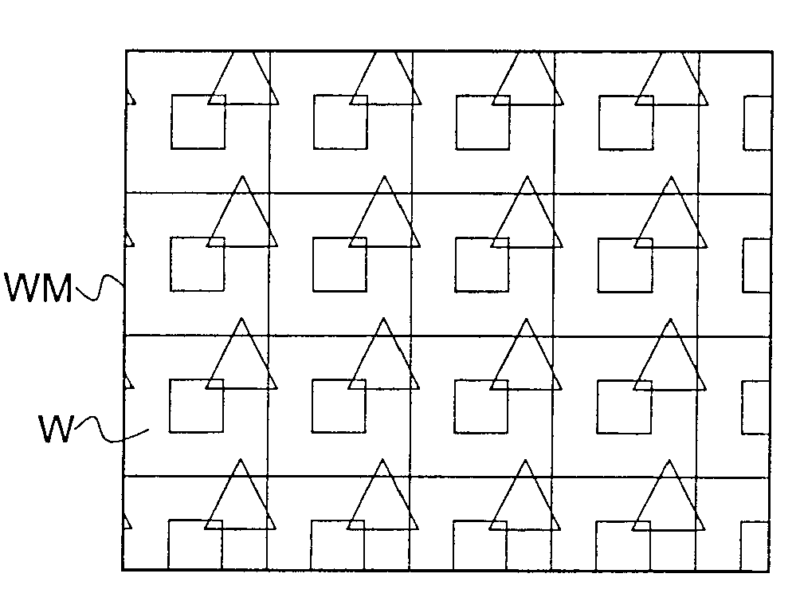

This place covers:

- Many, possibly different, watermarks on the same image, e.g. for copy or distribution control.

- Same watermark repeated on different parts of the image.

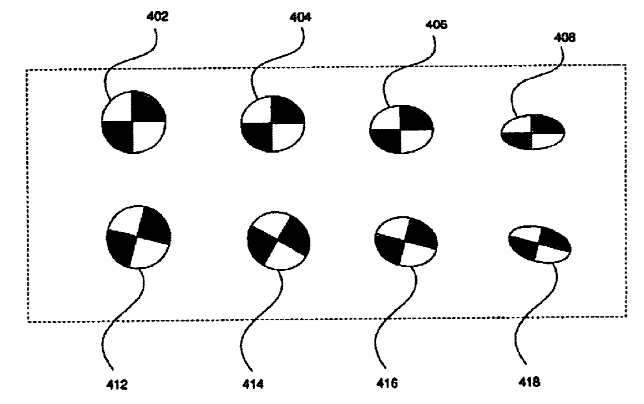

Illustrative example - Encoding payload in relative positions and/or polarities of multiple embedded watermarks (WO0111563 - KONINKL PHILIPS ELECTRONICS NV):

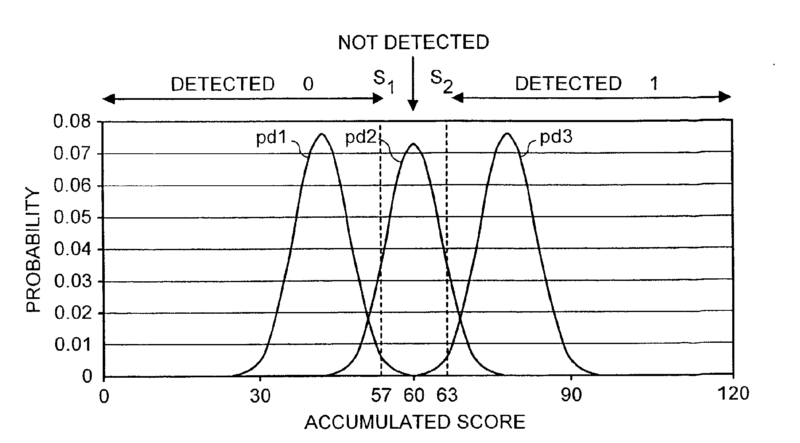

This place covers:

Using thresholds to define ranges of detection probability or ranges of robustness.

Illustrative example - Multiple thresholds for reducing false detection likelihood

(EP1271401 - SONY UK LTD):

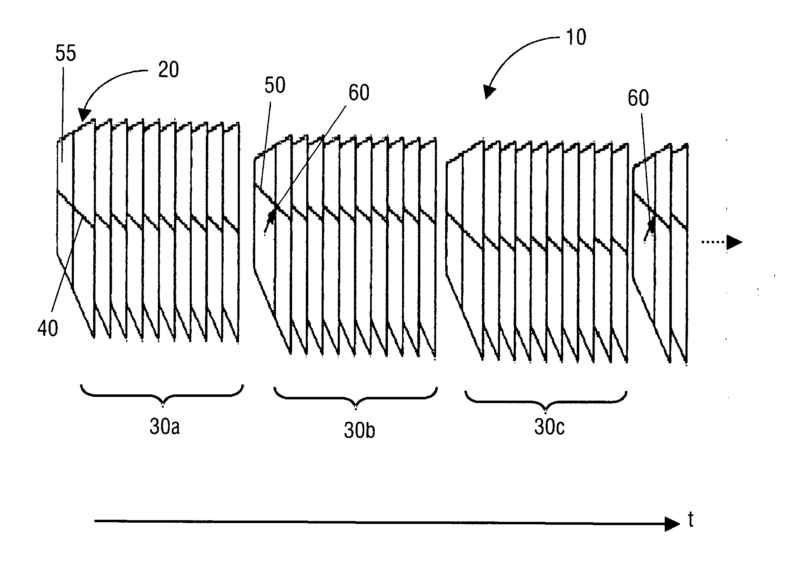

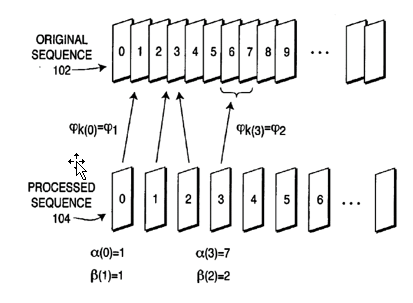

This place covers:

Watermarks spread over several images or frames or a sequence.

Illustrative example - Alternating watermark patterns (e.g. by translation, mirror, rotation) to improve the reliability of scale factor measurement (WO2005109338 - KONINKL PHILIPS ELECTRONICS NV):

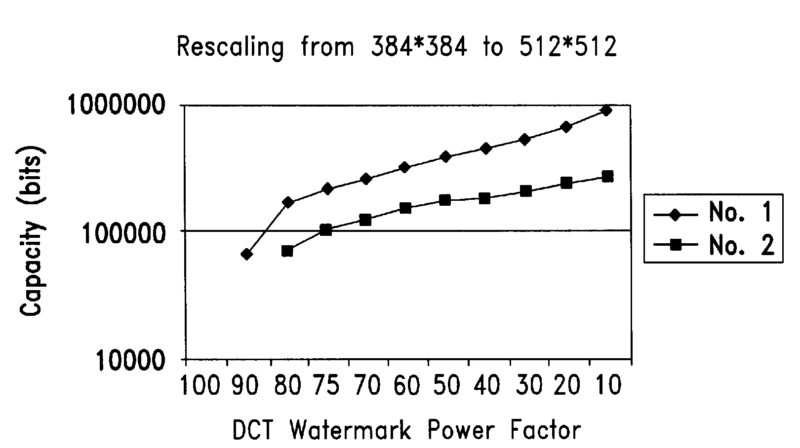

This place covers:

Illustrative example - Calculating capacity of DCT coefficients of a digital image file and selecting the ones apted to embedding, thereby providing robustness (US6724913 - HSU WEN-HSING):

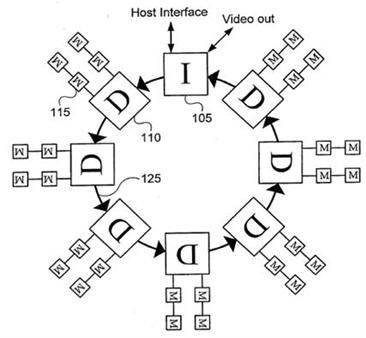

This place covers:

- Graphics accelerators; Graphic processing units (GPUs).

- Graphics pipelines.

- Parallel or massively parallel data bus specially adapted for image data processing.

- Architecture or signal processor specially adapted for image data processing.

- VLSI or SIMD or fine-grained machines specially adapted for image data processing.

- Multiprocessor or multicomputer or multi-core specially adapted for image data processing.

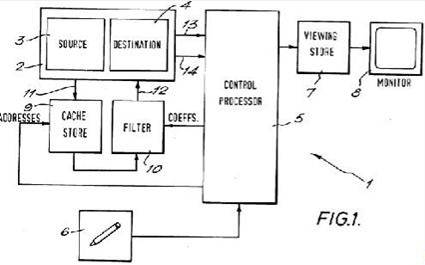

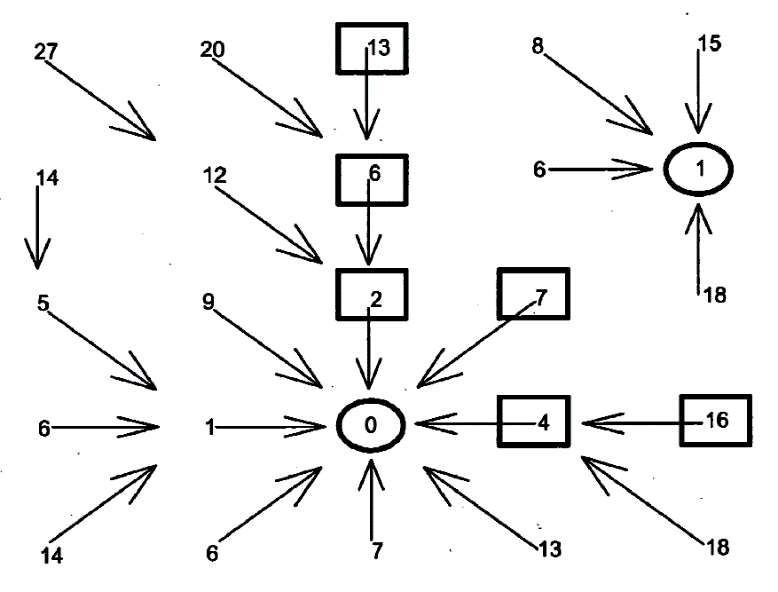

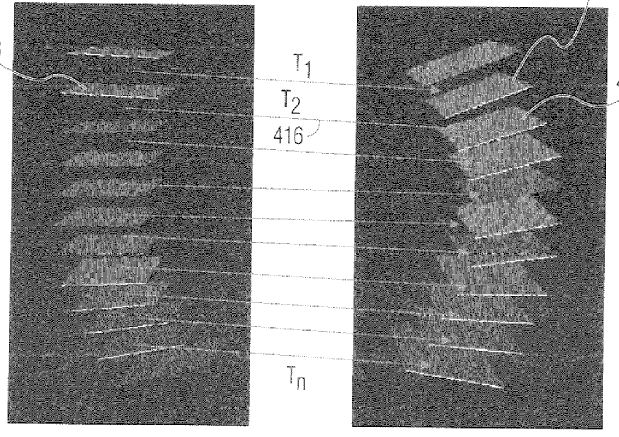

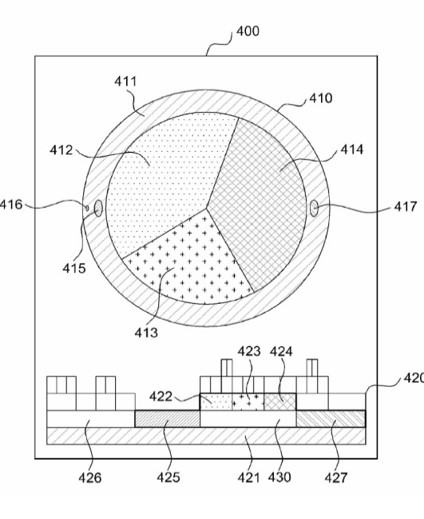

Illustrative example - Ring architecture for image data processing:

Attention is drawn to the following places, which may be of interest for search:

Architectures of general purpose stored program computers |

In this place, the following terms or expressions are used with the meaning indicated:

Pipelining | the use of a sequence (pipeline) of image processing stages for execution of instructions in a series of units, arranged so that several units can be used for simultaneously processing appropriate parts of several instructions. |

Multiprocessor | processor arrangements comprising a computer system consisting of two or more processors for the simultaneous execution of two or more programs or sequences of instructions. |

In patent documents, the following abbreviations are often used:

GPU | Graphics Processing Unit |

This place covers:

- Address generation or addressing circuit or BitBlt for image data processing.

- 3D or virtual or cache memory specially adapted for image data processing.

- Frame or screen or image memory specially adapted for image data processing.

Illustrative example - Cache memory for image processing (EP0589724 - QUANTEL LTD)

This place does not cover:

Accessing, addressing or allocating within memory systems or architectures | |

Ping-pong buffers | |

Arrangements for selecting an address in a digital store | |

Digital stores characterised by the use of particular electric or magnetic storage elements |

This place covers:

Geometric image transformations in the plane of the image.

Attention is drawn to the following places, which may be of interest for search:

Image enhancement or restoration | |

Image animation | |

Geometric effects for 3D image rendering | |

Perspective computation for 3D image rendering | |

Geographic models in 3D modelling for computer graphics | |

Matrix or vector computation | |

Conversion of standards for television systems |

This place covers:

- Affine transformations not further specified.

- Combinations of affine transformations including rotation, scaling or shear.

This place does not cover:

Transformations for image registration using affine transformations | |

Image mosaicing, e.g. composing plane images from plane sub-images |

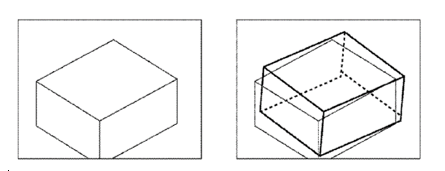

This place covers:

- Selective warping according to an importance map; Smart image reduction.

- Seam carving; Liquid resizing; Image retargeting.

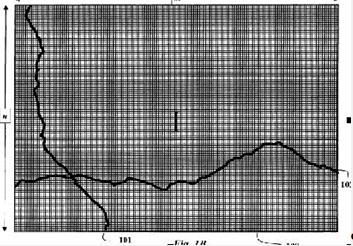

Illustrative example of subject matter classified in this place:

This place does not cover:

Panospheric to cylindrical image transformation |

This place covers:

Establishing a lens for a region-of-interest.

Illustrative example of subject matter classified in this place:

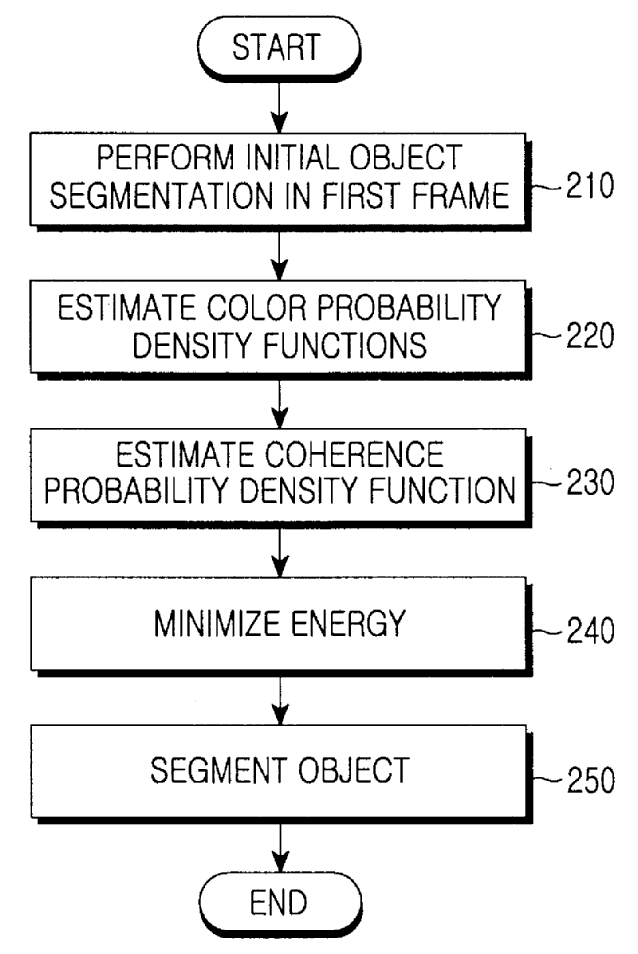

This place covers:

- Side or corner panels; Perspective wall.

- Document lens.

Illustrative example of subject matter classified in this place:

This place does not cover:

Fisheye, wide-angle transformation |

This place covers:

Flattening the scanned image of a bound book.

Illustrative example of subject matter classified in this place:

Attention is drawn to the following places, which may be of interest for search:

Panospheric to cylindrical image transformation | |

Texture mapping | |

Manipulating 3D models or images for computer graphics |

This place covers:

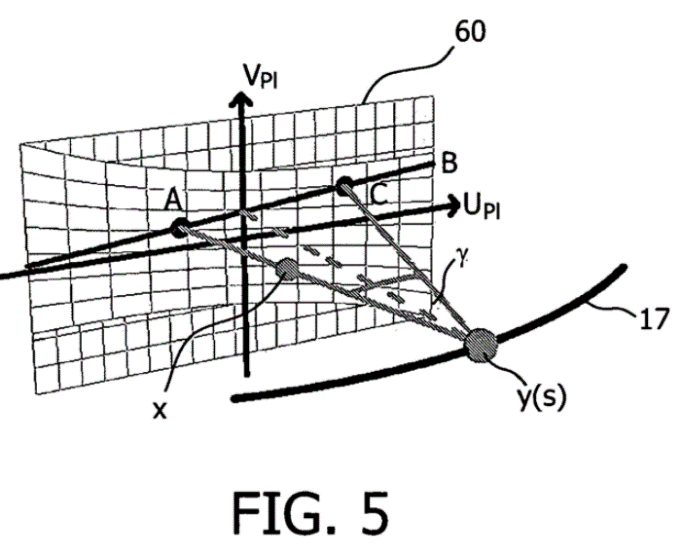

Curved planar reformation [CPR].

Attention is drawn to the following places, which may be of interest for search:

Manipulating 3D models or images for computer graphics |

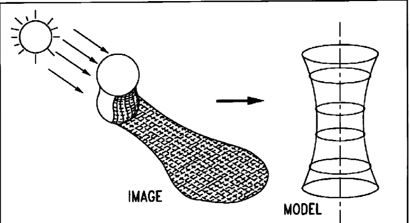

This place covers:

Mapping a surface of revolution to a plane, e.g. mapping a pot or a can to a plane.

Illustrative example of subject matter classified in this place:

This place covers:

- Geometric image transformation for projecting an image on a multi-projectors system or on a geodetic screen; Dome imaging.

- Geometric image transformation for projecting an image through multi-planar displays.

Attention is drawn to the following places, which may be of interest for search:

Texture mapping |

This place covers:

- Selecting the interpolation method depending on the scale factor.

- Selecting the interpolation method depending on media type or image appearance characteristics.

Illustrative example of subject matter classified in this place:

This place covers:

- Omnidirectional or hyperboloidal to cylindrical image transformation or mapping; Catadioptric transformation, e.g. images from surveillance cameras.

- Panospheric image transformation or mapping by using the output of a multiple cameras system.

Illustrative example of subject matter classified in this place:

This place covers:

Geometric image transformations:

- for iterative image registration;

- for spline-based image registration;

- for mutual-information-based registration;

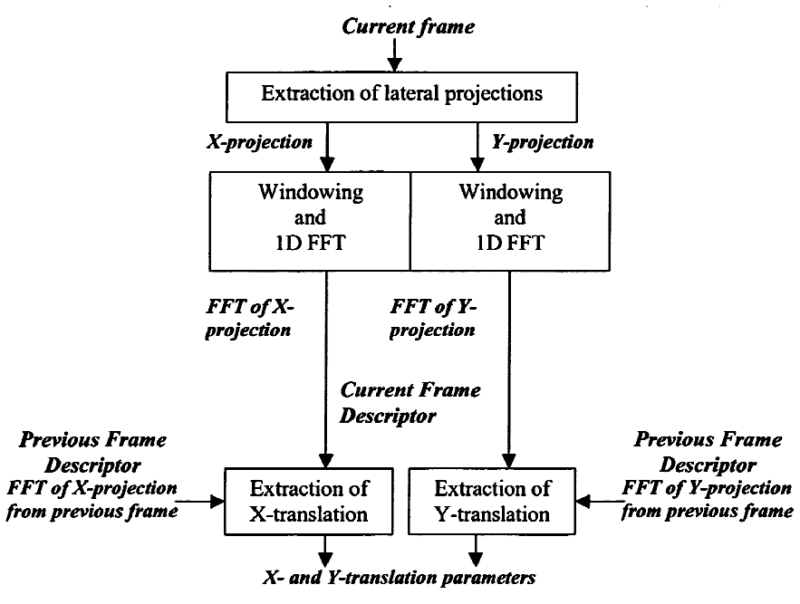

- for phase correlation or FFT-based methods;

- using fiducial points, e.g. landmarks;

- for maximised mutual information-based methods.

Attention is drawn to the following places, which may be of interest for search:

Determination of transform parameters for the alignment of images, i.e. image registration |

This place covers:

- Elastic mapping or snapping or matching; Deformable mapping.

- Diffeomorphic representations of deformations to control the image registration process.

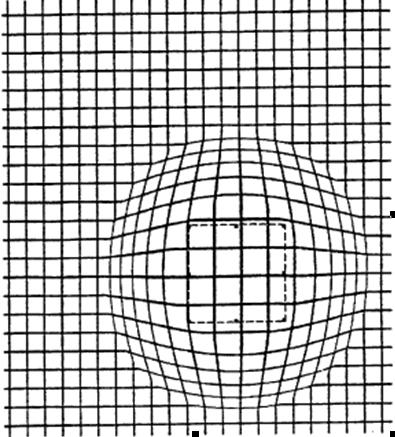

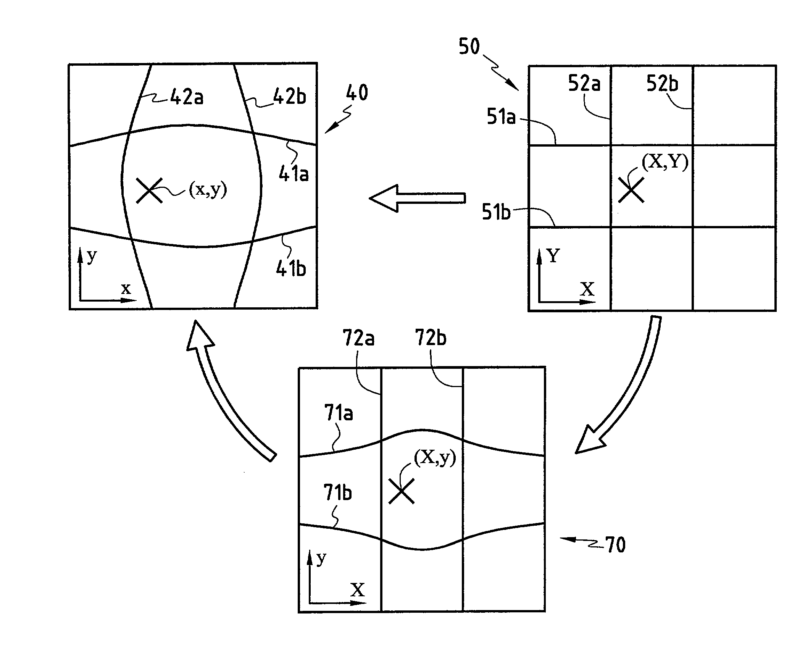

Illustrative example of subject matter classified in this place:

This place covers:

- Video cubism; Video cube.

- Dynamic panoramic video.

- Stylized video cubes.

Attention is drawn to the following places, which may be of interest for search:

Image animation |

This place covers:

- Resampling; Resolution conversion.

- Zooming or expanding or magnifying or enlarging or upscaling.

- Shrinking or reducing or compressing or downscaling.

- Pyramidal partitions; Storing sub-sampled copies.

- Area based or weighted interpolation; Scaling by surface fitting, e.g. piecewise polynomial surfaces, B-splines or Beta-splines.

- Two-steps image scaling, e.g. by stretching.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Control of means for changing angle of the field of view, e.g. optical zoom objectives or electronic zooming |

Attention is drawn to the following places, which may be of interest for search:

Polynomial surface description for image modeling | |

Enlarging or reducing for scanning, transmission or reproduction of documents or the like, e.g. facsimile transmission | |

Studio circuits for television systems involving alteration of picture size or orientation | |

Frame rate conversion; De-interlacing | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using predictive coding involving spatial sub-sampling or interpolation, e.g. alteration of picture size or resolution |

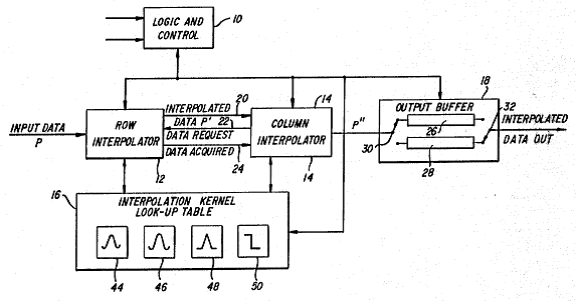

This place covers:

- Linear or bi-linear or tetrahedral or cubic image interpolation.

- Adaptive interpolation, e.g. the coefficients of the interpolation depend on the pattern of the local structure.

Illustrative example of subject matter classified in this place:

This place does not cover:

Image demosaicing, e.g. colour filter arrays [CFA] or Bayer patterns | |

Edge-driven scaling; Edge-based scaling |

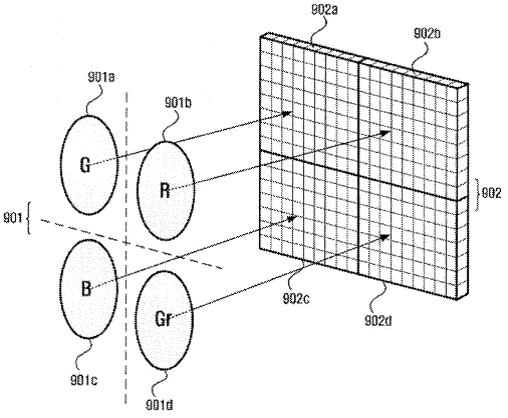

This place covers:

- CFA demosaicing or demosaicking or interpolating.

- Bayer pattern.

- Colour-separated images, i.e. one colour in each image quadrant.

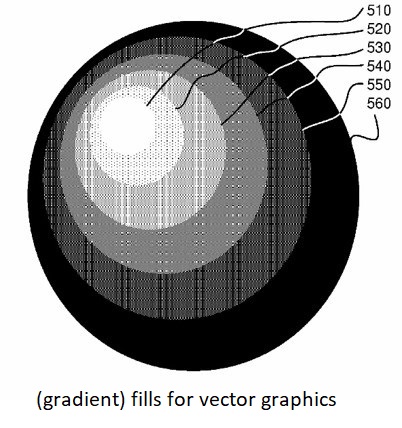

Illustrative examples of subject matter classified in this place:

1. Image demosaicing

2. Colour-separated image

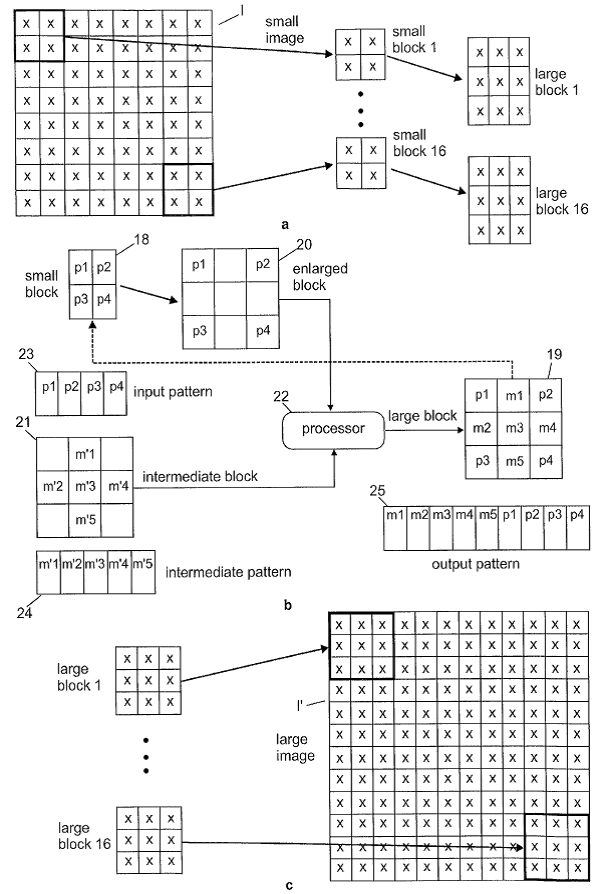

This place covers:

- Pixel or row deletion or removal.

- Pixel or row insertion or duplication or replication.

- Decimating FIR filters.

- Array indexes or tables, e.g. LUT.

Illustrative example of subject matter classified in this place:

Decimating by using two arrays of indexes

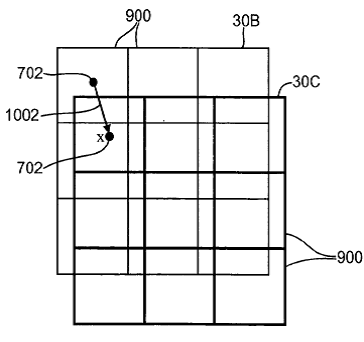

This place covers:

- Edge adaptive or directed or dependent or following or preserving interpolation; Edge preservation.

- Edge map injecting or projecting or combining or superimposing.

Illustrative example of subject matter classified in this place:

Correcting for abnormalities next to boundaries

This place covers:

- Image mosaicing or mosaiking.

- Panorama views.

- Mosaic of video sequences; Salient video still; Video collage or synopsis.

Illustrative example of subject matter classified in this place:

Image mosaicing for microscopy applications

Attention is drawn to the following places, which may be of interest for search:

Image processing arrangements associated with discharge tubes with provision for introducing objects or material to be exposed to the discharge |

This place covers:

- Using neural networks specially adapted for image interpolation.

- Using neural networks specially adapted for interpolation coefficient selection.

Illustrative example of subject matter classified in this place:

Using a neural network to select the coefficients of a polynomial interpolation

Attention is drawn to the following places, which may be of interest for search:

Image enhancement or restoration using machine learning, e.g. neural networks | |

Neural networks | |

Machine learning | |

Arrangements for image or video recognition or understanding using pattern recognition or machine learning using neural networks |

This place covers:

- Super resolution by fitting the pixel intensity to a mathematical function.

- Super resolution from image sequences; Images or frames addition, coaddition or combination.

- Super resolution by iteratively applying constraints, e.g. energy reduction, on the transform domain and inverse transforming.

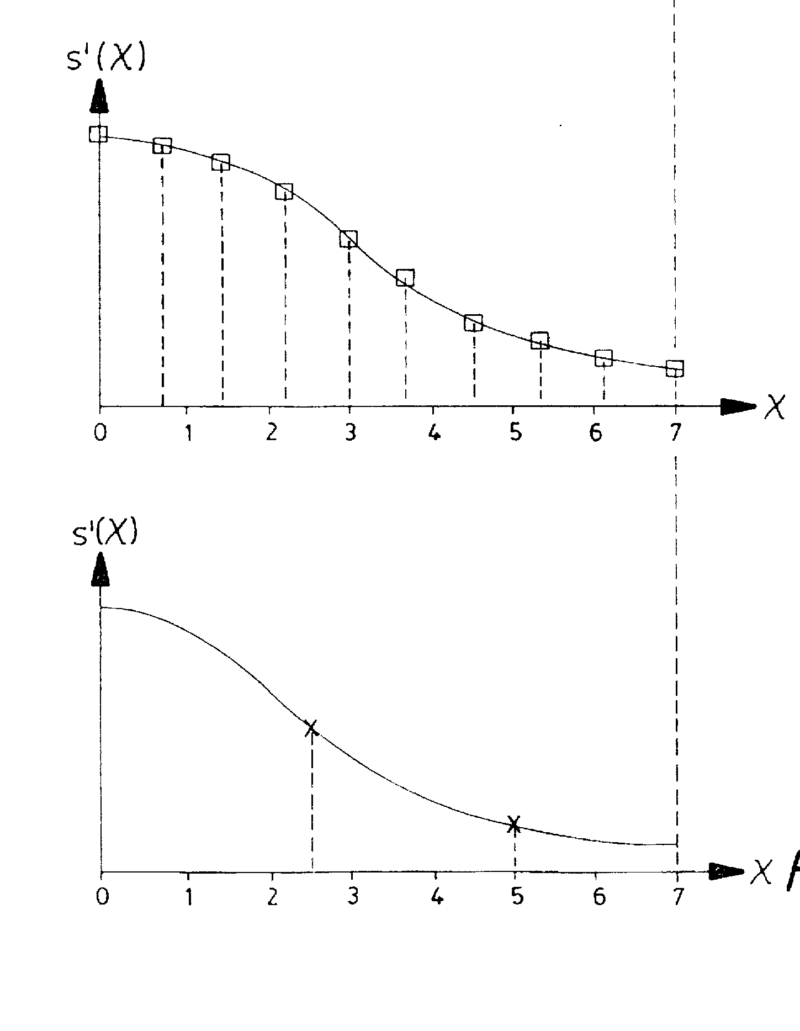

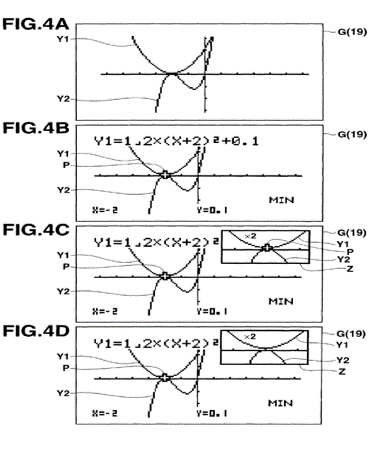

Illustrative example of subject matter classified in this place:

Fitting a mathematical function and resampling:

Attention is drawn to the following places, which may be of interest for search:

Image enhancement or restoration using two or more images, e.g. averaging or subtraction |

This place covers:

Fusion of multi-sensor or multiband images fusion.

This place covers:

Illustrative example of subject matter classified in this place:

Displaying sub-frames at spatially offset positions

This place covers:

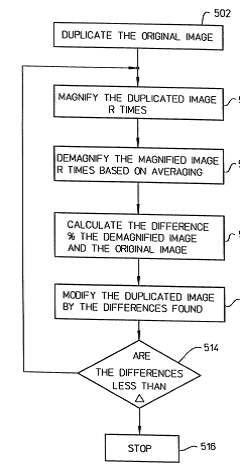

Illustrative example of subject matter classified in this place:

Iterative correction of the high-resolution image:

This place covers:

- DCT coefficients decimation or insertion for image scaling.

- Zero padding DCT coefficients for image scaling.

- Downscaling by selecting a specific wavelet sub-band.

Illustrative example of subject matter classified in this place:

Enlargement/reduction through DCT interpolation/decimation

This place covers:

Adapting the image resolution to the client's capabilities.

Illustrative example of subject matter classified in this place:

In the figure above, the processing unit is coupled downstream from video cross-point switcher for generating additionally scaled video streams by additional video scaling on initially scaled video stream.

Attention is drawn to the following places, which may be of interest for search:

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using adaptive coding | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using video transcoding, i.e. partial or full decoding of a coded input stream followed by re-encoding of the decoded output stream | |

Server adapted for processing of video elementary streams, involving reformatting operations of video signals for distribution or compliance with end-user requests or end-user device requirements | |

Selective content distribution in client devices adapted for processing of additional data | |

Selective content distribution in client devices adapted for processing of video elementary streams involving reformatting operations of video signals for household redistribution, storage or real-time display |

This place covers:

- Transpose or continuous write-transpose-read.

- Mirror.

- Rung-length (RL) rotation.

Attention is drawn to the following places, which may be of interest for search:

Scanning, transmission or reproduction of documents involving composing, repositioning or otherwise modifying originals | |

Studio circuits for television |

This place covers:

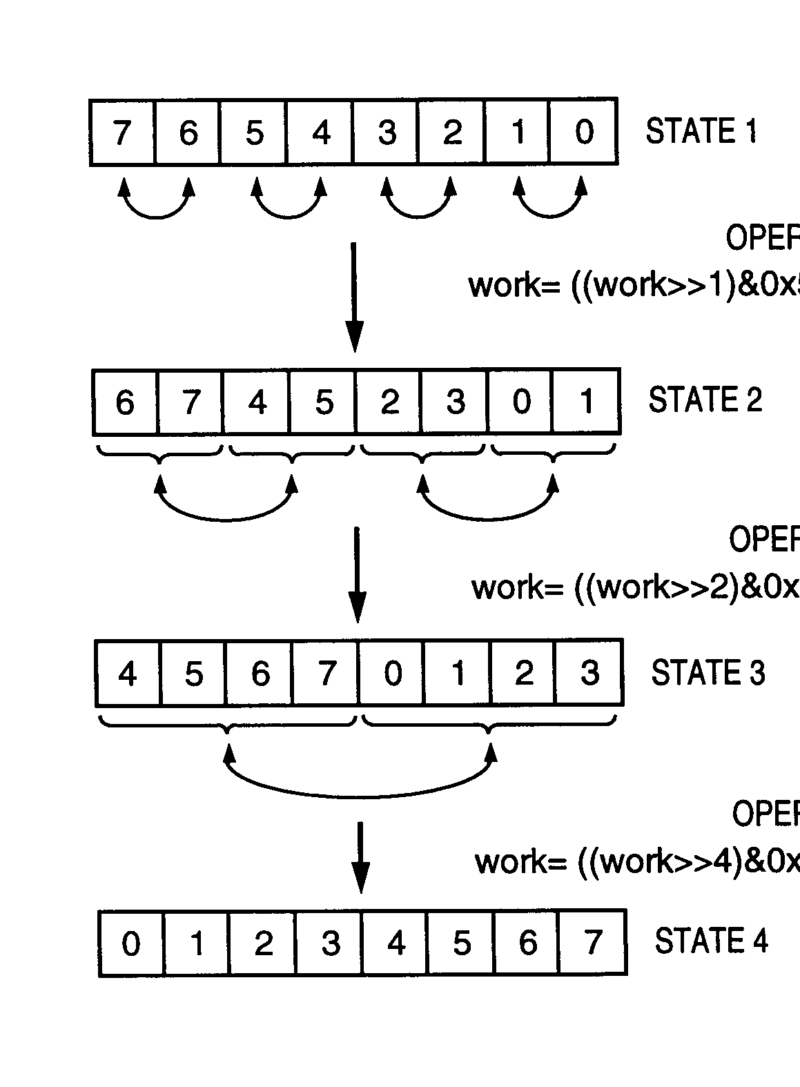

Illustrative example of subject matter classified in this place:

Rotation by recursive reversing

This place covers:

Illustrative example of subject matter classified in this place:

Continuous read-transpose-write

This place covers:

- Shift processing;

- Rotation by shearing.

Illustrative example of subject matter classified in this place:

Image rotation by two-pass de-skewing

This place covers:

Image enhancement or restoration:

- using non-spatial domain filtering;

- using local operators;

- using morphological operators, i.e. erosion or dilatation;

- using histogram techniques;

- using two or more images, e.g. averaging or subtraction;

- using machine learning, e.g. neural networks;

- Denoising; Smoothing;

- Deblurring; Sharpening;

- Unsharp masking;

- Retouching; Inpainting; Scratch removal;

- Geometric correction;

- Dynamic range modification of images or parts thereof.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuitry for compensating brightness variation in the scene in cameras or camera modules comprising electronic image sensors | |

Camera processing pipelines in cameras or camera modules comprising electronic image sensors | |

Noise processing, e.g. detecting, correcting, reducing or removing noise in circuitry of solid-state image sensors [SSIS] |

Attention is drawn to the following places, which may be of interest for search:

Neural networks | |

Image preprocessing for image or video recognition or understanding | |

Image processing adapted to be used in scanners, printers, photocopying machines, displays or similar devices, including composing, repositioning or otherwise modifying originals | |

Picture signal circuits adapted to be used in scanners, printers, photocopying machines, displays or similar devices | |

Processing of colour picture signals in scanners, printers, photocopying machines, displays or similar devices | |

Computational photography systems, e.g. light-field imaging systems |

This group focuses on image processing algorithms. Although such algorithms sometimes need to consider characteristics of the underlying image acquisition apparatus, inventions to the image acquisition apparatus per se are outside the scope of this group.

Whenever possible, additional information should be classified using one or more of the indexing codes from the ranges of G06T 2200/00 (see definitions re. G06T) or G06T 2207/00 (see definitions re. G06T 2207/00).

The classification symbol G06T 5/00 should be allocated to documents concerning:

- Interactive / multiple choice image processing, e.g. choosing outputs from multiple enhancement algorithms;

- Image restoration based on properties or models of the human vision system [HVS]

In patent documents, the following abbreviations are often used:

HDR | high dynamic range |

HDRI | high dynamic range imaging |

HMM | hidden Markov model |

PSF | point spread function |

SDR | standard dynamic range |

This place covers:

All transform domain-based enhancement methods, e.g. using:

- Fourier transform, discrete Fourier transform [DFT] or fast Fourier transform [FFT];

- Hadamard transform;

- Discrete cosine transform [DCT];

- Wavelet transform, discrete wavelet transform [DWT].

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture signal generating by scanning motion picture films or slide opaques, e.g. for telecine | |

Circuitry for compensating brightness variation in the scene in cameras or camera modules comprising electronic image sensors | |

Camera processing pipelines in cameras or camera modules comprising electronic image sensors | |

Noise processing, e.g. detecting, correcting, reducing, or removing noise in circuitry of solid-state image sensors [SSIS] |

This place covers:

- Convolution with a mask or kernel in the spatial domain;

- High-pass filter, low-pass filter;

- Gauss filter, Laplace filter;

- Averaging filter, mean filter, blurring filter;

- Differential filters (e.g. Sobel operator);

- Median filter;

- Bilateral filter;

- Minimum, maximum or and rank filtering;

- Wiener filter;

- Phase-locked loops, detectors, mixers;

- Recursive filter;

- Distance transforms;

- Local image processing architectures.

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture signal generating by scanning motion picture films or slide opaques, e.g. for telecine | |

Circuitry for compensating brightness variation in the scene in cameras or camera modules comprising electronic image sensors | |

Camera processing pipelines in cameras or camera modules comprising electronic image sensors | |

Noise processing, e.g. detecting, correcting, reducing, or removing noise in circuitry of solid-state image sensors [SSIS] |

Attention is drawn to the following places, which may be of interest for search:

Applying local operators for during image preprocessing for image or video recognition or understanding |

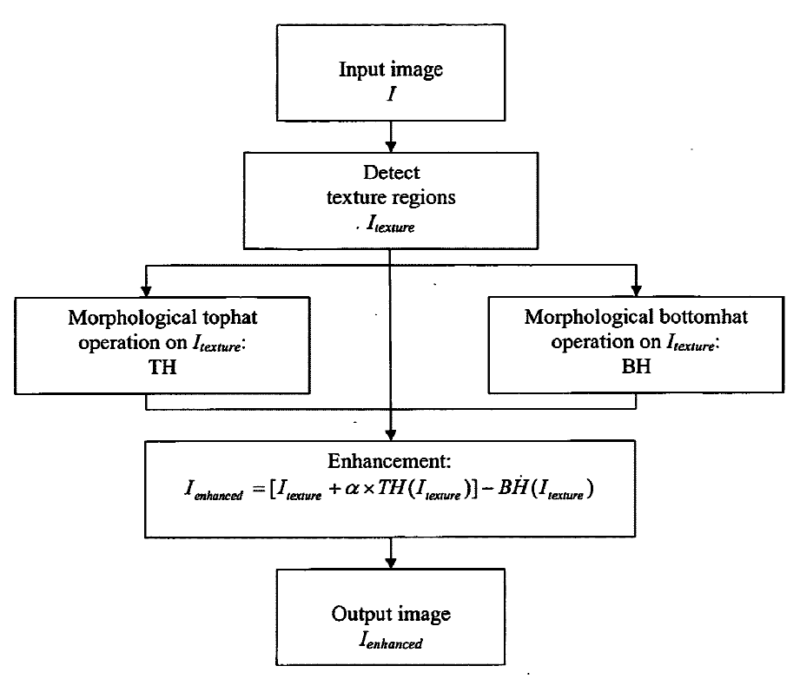

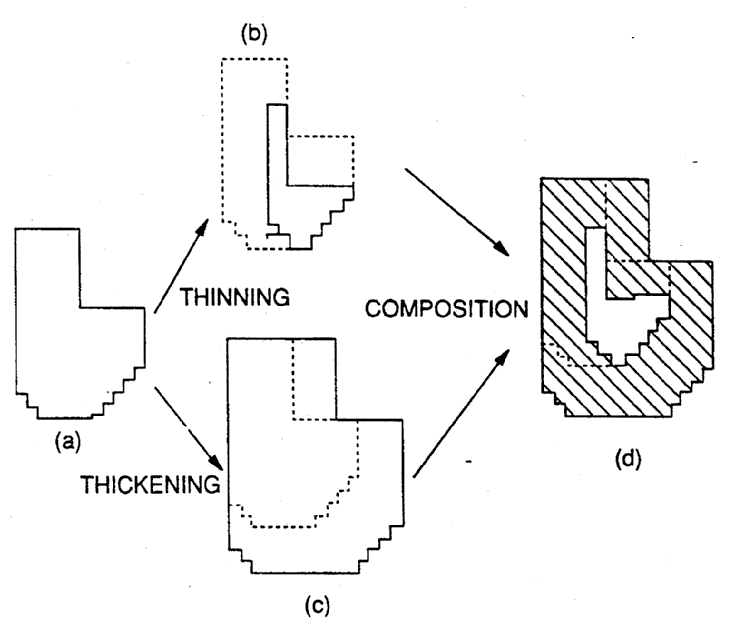

This place covers:

All morphology-based operations for image enhancement, e.g. using:

- Thickening, thinning;

- Opening, closing;

- Erosion, dilation;

- Structuring elements;

- Skeletons;

- Geodesic transforms.

Illustrative examples of subject matter classified in this place:

1.

2.

Attention is drawn to the following places, which may be of interest for search:

Segmentation or edge detection involving morphological operators | |

Smoothing or thinning of patterns during image preprocessing for image or video recognition or understanding |

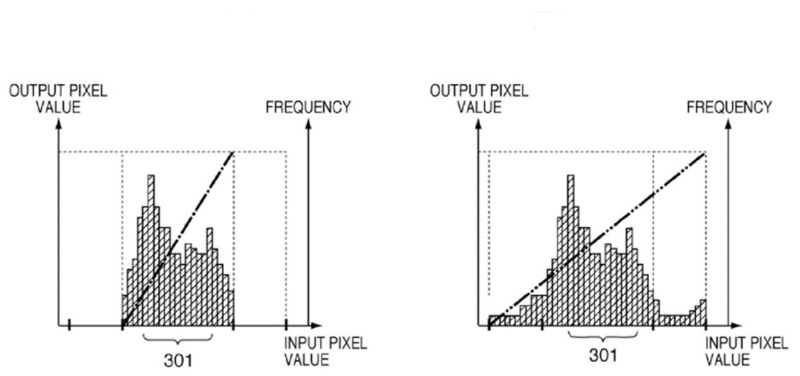

This place covers:

All histogram-based image enhancement methods.

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuitry for compensating brightness variation in the scene in cameras or camera modules comprising electronic image sensors | |

Camera processing pipelines in cameras or camera modules comprising electronic image sensors |

Attention is drawn to the following places, which may be of interest for search:

Dynamic range modification of images or parts thereof | |

Histogram techniques adapted to be used in scanners, printers, photocopying machines, displays or similar devices | |

Equalising the characteristics of different image components, e.g. their average brightness or colour balance, in stereoscopic or multi-view video systems |

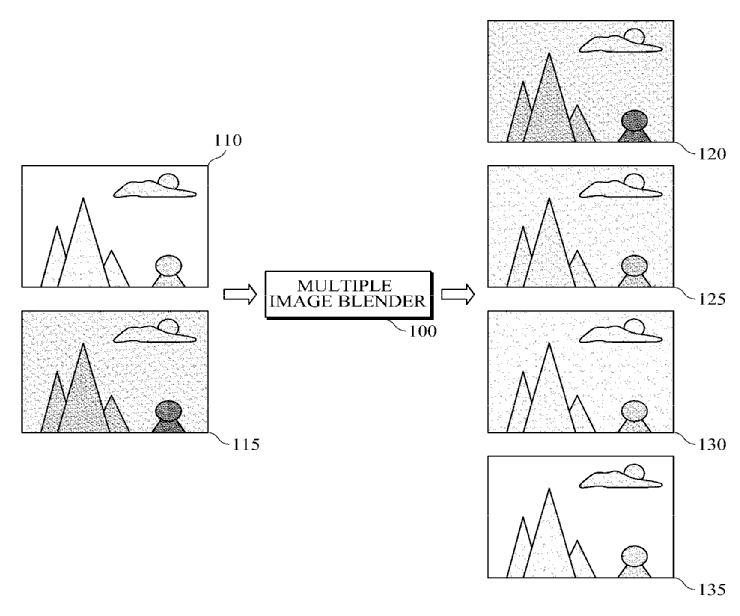

This place covers:

- Image averaging;

- Image fusion, image merging;

- Image subtraction;

- Enhanced final image by combining multiple, e.g. degraded, images, while maintaining the same number of pixels (for increased number of pixels: see G06T 3/40);

- Full-field focus from multiple of depth-of-field images, e.g. from confocal microscopy;

- Processing of synthetic aperture radar [SAR] images;

- Energy subtraction;

- Bright field, dark field processing;

- Angiography image processing;

- High dynamic range [HDR] image processing;

- Multispectral image processing;

- Computational photography, e.g. coded aperture imaging.

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuitry for compensating brightness variation in the scene in cameras or camera modules comprising electronic image sensors | |

Camera processing pipelines in cameras or camera modules comprising electronic image sensors |

Attention is drawn to the following places, which may be of interest for search:

Scaling of whole images or parts thereof based on super-resolution | |

Unsharp masking | |

Radar or analogous systems, specially adapted for mapping or imaging using synthetic aperture techniques | |

Spatial compounding in short-range sonar imaging systems | |

Confocal scanning microscopes | |

Computational photography systems, e.g. light-field imaging systems |

This place covers:

All machine learning-based image enhancement methods, e.g. using:

- artificial neural networks [ANN], convolutional neural networks [CNN], generative adversarial networks [GAN] or deep learning;

- decision trees;

- support-vector machines;

- regression analysis;

- Bayesian networks;

- Gaussian processes;

- genetic algorithms.

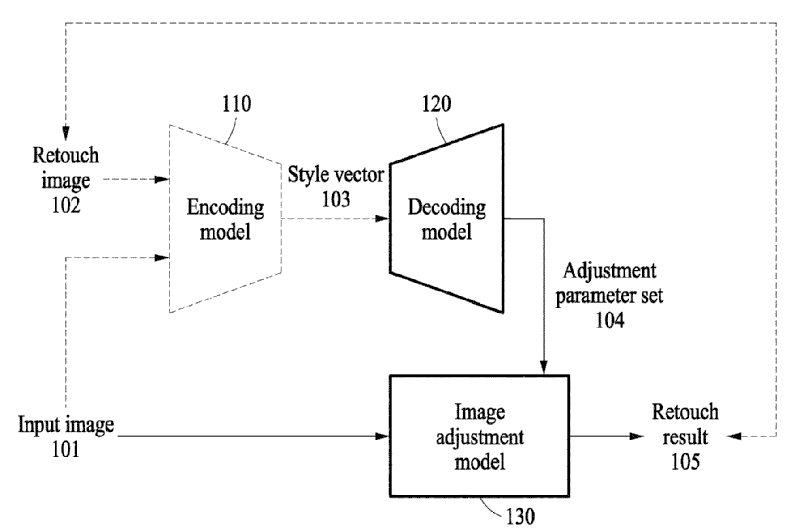

Illustrative example of subject matter classified in this place:

Attention is drawn to the following places, which may be of interest for search:

Neural networks | |

Learning methods | |

Machine learning | |

Arrangements for image or video recognition or understanding using pattern recognition or machine learning using neural networks | |

Arrangements for image or video recognition or understanding using pattern recognition or machine learning using probabilistic graphical models from image or video features, e.g. Markov models or Bayesian networks |

This place covers:

- Removing noise from images;

- Temporal denoising, spatio-temporal noise filtering;

- Removing pattern noise from images;

- Image smoothing;

- Image blurring, adding motion blur to images, adding blur to images;

- Edge-adaptive smoothing;

- Smoothing of depth map in stereo or range images;

- Antialiasing by image filtering;

- Denoising or smoothing using singular value decomposition [SVD].

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Camera processing pipelines for suppressing or minimising disturbance in the image signal generation | |

Noise processing in circuitry of solid-state image sensors [SSIS], e.g. detecting, correcting, reducing or removing noise |

Attention is drawn to the following places, which may be of interest for search:

Antialiasing during drawing of lines | |

Antialiasing during filling a planar surface by adding surface attributes, e.g. colour or texture | |

Noise filtering in image pre-processing for image or video recognition or understanding | |

Noise or error suppression in colour picture communication systems | |

Processing image signals for flicker reduction in stereoscopic or multi-view video systems |

This place covers:

- Deblurring;

- Removing motion blur from images;

- Point-spread function [PSF] model of blurring;

- Deconvolution;

- Modulation transfer function [MTF];

- Sharpening, crispening;

- Edge enhancement, edge boosting.

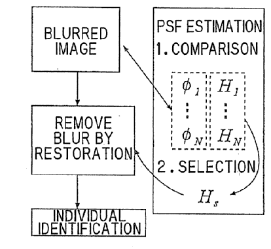

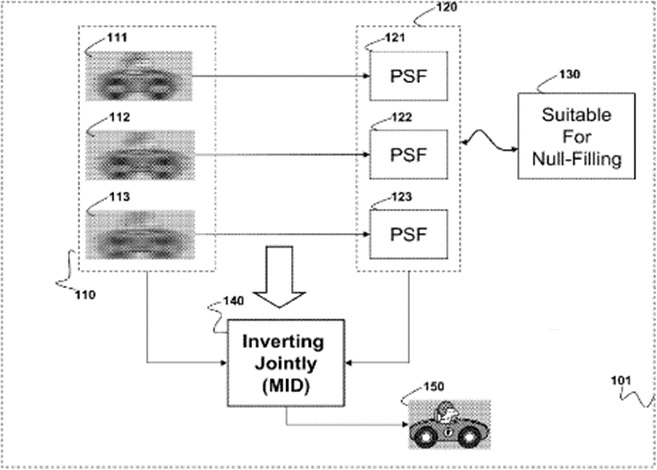

Illustrative examples of subject matter classified in this place:

1.

2.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Vibration or motion blur correction for stable pick-up of the scene in cameras or camera modules comprising electronic image sensors |

Attention is drawn to the following places, which may be of interest for search:

Edge-driven scaling | |

Edge or detail enhancement for scanning, transmission or reproduction of documents or the like, e.g. facsimile transmission | |

Edge or detail enhancement in colour picture communication systems |

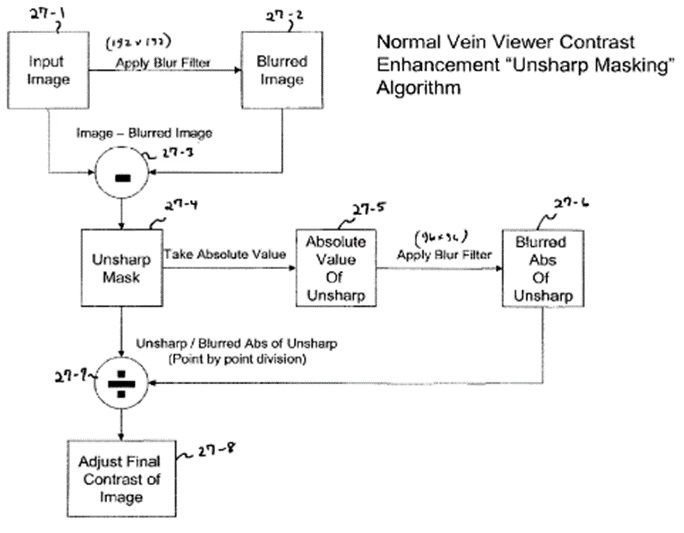

This place covers:

- Unsharp masking;

- Adding or subtracting a processed version of an image to or from the image.

Illustrative example of subject matter classified in this place:

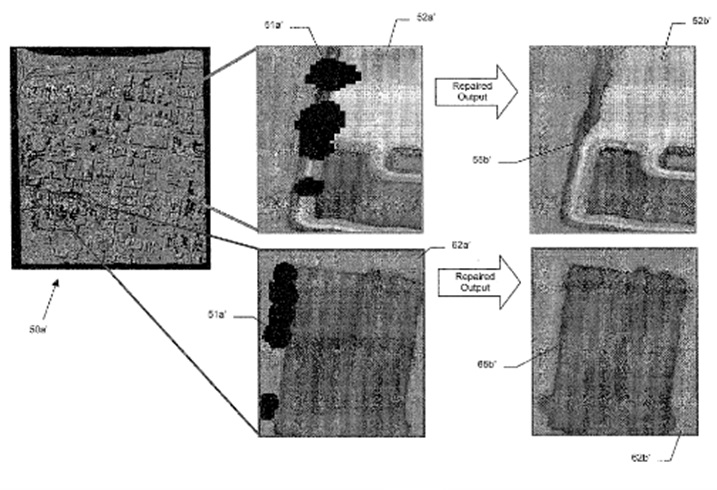

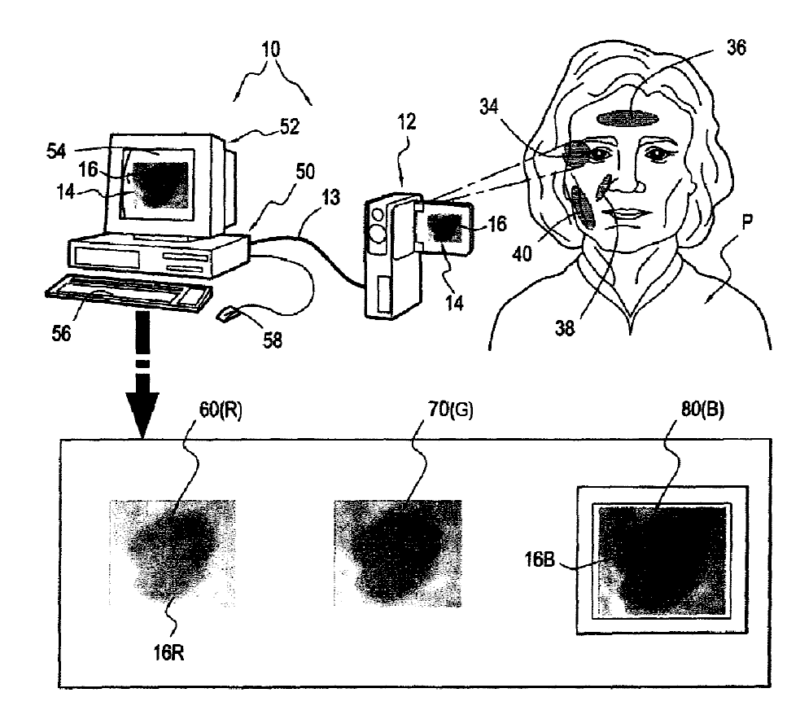

This place covers:

- Concealing defective pixels in images;

- Scratch removal;

- Inpainting by image filtering or by replacing patches within an image using a generated image or texture patch, or a patch retrieved from another source, e.g. image databases or the internet;

- Correcting red-eye defects.

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Scratch removal adapted to be used in scanners, printers, photocopying machines, displays or similar devices | |

Picture signal generating by scanning motion picture films or slide opaques, e.g. for telecine | |

Noise processing, e.g. detecting, correcting, reducing or removing noise in circuitry of solid-state image sensors [SSIS] |

Attention is drawn to the following places, which may be of interest for search:

Segmentation or edge detection in image analysis | |

Analysis of geometric attributes in image analysis | |

Determining position or orientation of objects or cameras in image analysis | |

Determination of colour characteristics in image analysis | |

Texture generation as such | |

Recognition of eye characteristics | |

Modification of content of picture, e.g. retouching | |

Retouching colour images adapted to be used in scanners, printers, photocopying machines, displays or similar devices | |

Red-eye correction adapted to be used in scanners, printers, photocopying machines, displays or similar devices |

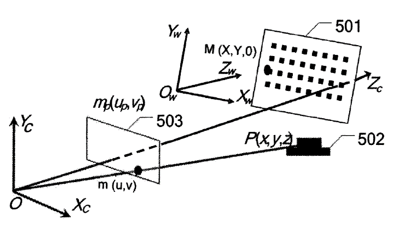

This place covers:

- Correcting lens distortions or aberrations;

- Correcting pincushion, barrel, trapezoidal or fish-eye distortions;

- Calibrating parameters of lens distortion;

- Reference grids, coordinate mapping.

Illustrative example of subject matter classified in this place:

Attention is drawn to the following places, which may be of interest for search:

Geometric image transformations in the plane of the image | |

Analysis of captured images to determine intrinsic or extrinsic camera parameters, i.e. camera calibration | |

Normalisation of the pattern dimension during image preprocessing for image or video recognition |

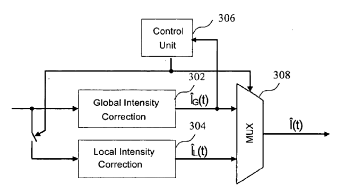

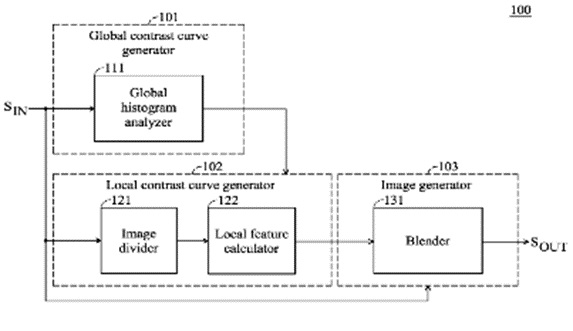

This place covers:

Contrast enhancement based on a combination of local and global properties.

Illustrative examples of subject matter classified in this place:

1.

2.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuitry for compensating brightness variation in the scene by increasing the dynamic range of the image compared to the dynamic range of the electronic image sensors | |

Bracketing, i.e. taking a series of images with varying exposure conditions | |

Control of the dynamic range in Circuitry of solid-state image sensors [SSIS] |

Attention is drawn to the following places, which may be of interest for search:

Equalising the characteristics of different image components of stereoscopic or multi-view image signals, e.g. their average brightness or colour balance |

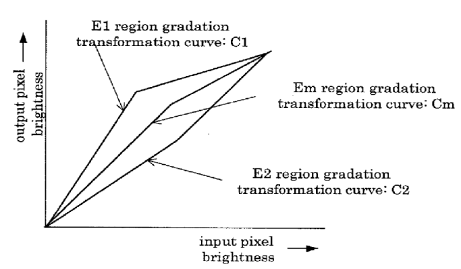

This place covers:

- Global contrast enhancement or tone mapping to increase the dynamic range of an image, based on properties of the whole image, e.g. global statistics or histograms;

- Contrast stretching, brightness equalisation;

- Gamma and gradation correction in general;

- Tone mapping for high dynamic range [HDR] imaging;

- Intensity mapping, e.g. using lookup tables [LUT].

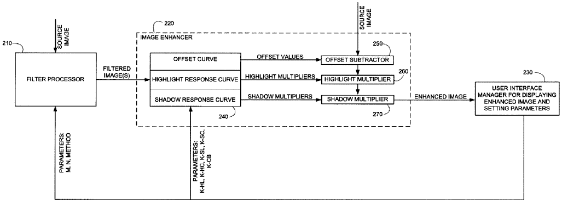

Illustrative example of subject matter classified in this place:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture signal circuitry for controlling amplitude response in television systems | |

Gamma control in television systems | |

Circuitry for compensating brightness variation in the scene | |

Camera processing pipelines comprising electronic image sensors |

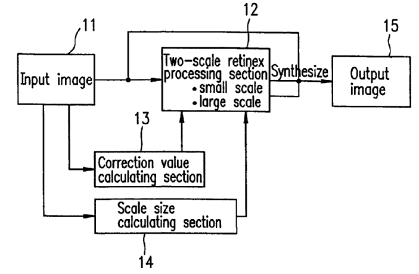

This place covers:

- Local contrast enhancement, e.g. locally adaptive filtering;

- Retinex processing.

Illustrative examples of subject matter classified in this place:

1.

2.

Attention is drawn to the following places, which may be of interest for search:

Unsharp masking |

This place covers:

- Analysis of motion, i.e. determining motion of an image subject, or of the camera having acquired the images; Tracking; Change detection; e.g. by block matching, feature-based methods, gradient-based methods, hierarchical or stochastic approaches, motion estimation from a sequence of stereo images.

- Analysis of texture, i.e. analysis of colour or intensity features which represent a perceived image texture, e.g. based on statistical or structural descriptions.

- Analysis of geometric attributes, e.g. area, perimeter, diameter, volume, convexity, concavity, centre of gravity, moments or symmetry.

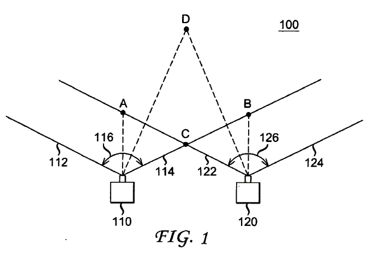

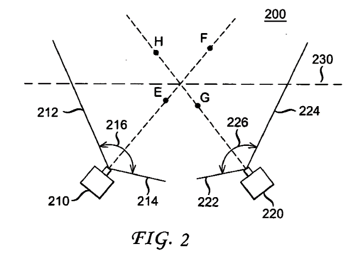

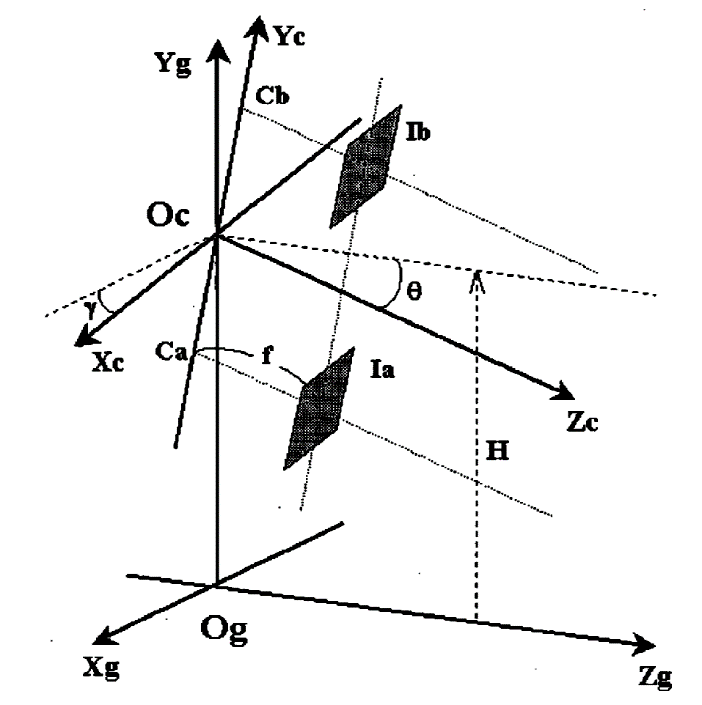

- Analysis of captured images to determine intrinsic or extrinsic camera parameters, i.e. camera calibration; Calibration of stereo cameras, e.g. determining the transformation between left and right camera coordinate systems

- Computational analysis of images to determine information, e.g. parameters or characteristics, therefrom

- Inspection-detection on images, e.g. flaw detection; Industrial image inspection using e.g. a design-rule based approach or an image reference. Industrial image inspection checking presence / absence; Biomedical image inspection.

- Segmentation, i.e. partitioning an image into regions, or edge detection, i.e. detection of edge features in an image, e.g. involving probabilistic or graph-based approaches, deformable models, morphological operators, transform domain-based approaches or the use of more than two images.

- Motion-based segmentation.

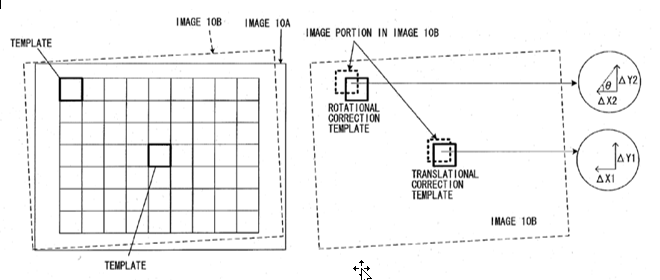

- Determination of transform parameters for the alignment of images, i.e. image registration, e.g. by correlation-, feature- or transform domain-based or statistical approaches.

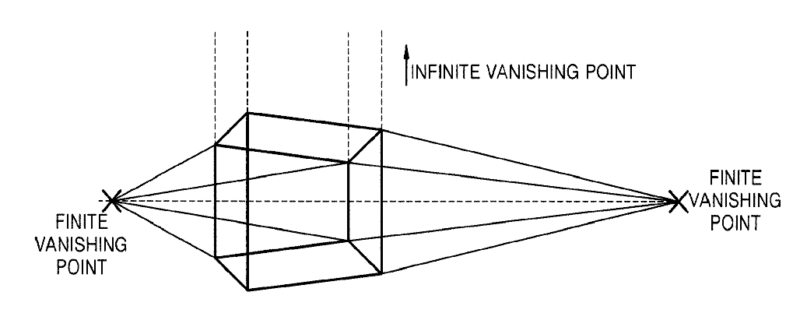

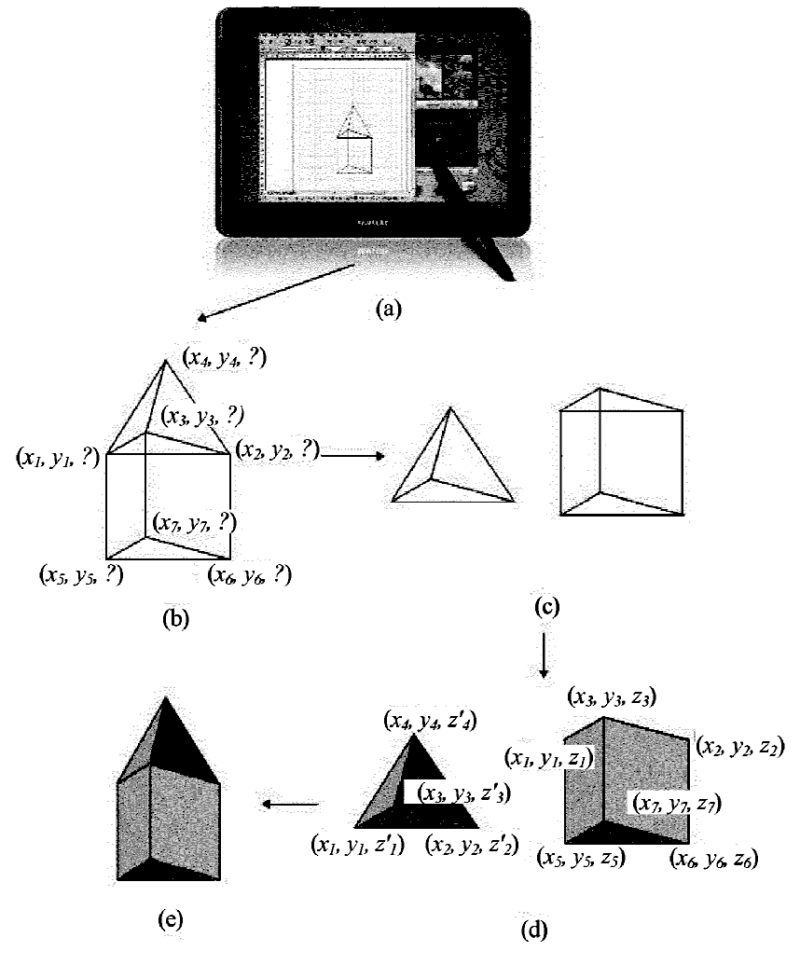

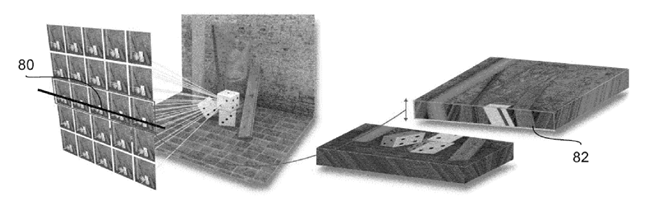

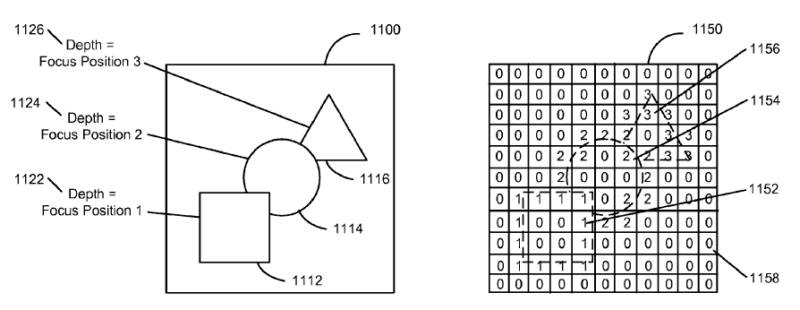

- Depth or shape recovery, i.e. determination of scene depth parameters by consideration of image characteristics; Depth or shape recovery from shading, specularities, texture, perspective effects, e.g. vanishing points, or line drawings; Depth or shape recovery from multiple images involving amongst others contours, focus, motion, multiple light sources, photometric stereo or stereo images.

- Determining the position or orientation of objects, e.g. by feature- or transform domain-based or statistical approaches.

- Determination of image colour characteristics.

G06T 7/00 covers the details of image analysis algorithms, insofar as it deals with the related image processing algorithms per se. Documents which merely mention the general use of image analysis, without details of the underlying image analysis algorithms, are classified in the application place. Where the image analysis is functionally linked and restricted to specific image acquisition or display hardware or processes, it is classified in the application place; otherwise, it is classified in G06T 7/00. Where the essential technical characteristics relate both to the image analysis detail and to its particular use or special adaptation, classification is made in both G06T 7/00 and the application place.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Computed tomography | |

Signal processing for Nuclear Magnetic Resonance (NMR) imaging systems | |

ICT specially adapted for processing medical images, e.g. editing 30/40 | |

Scanning, transmission or reproduction of documents or the like | |

Stereoscopic television systems | |

Methods of arrangements for coding, decoding, compressing or decompressing digital video signals | |

Transforming light or analogous information into electric information using solid-state image sensors |

Attention is drawn to the following places, which may be of interest for search:

Image Acquisition | |

Processor architectures; Processor configuration, e.g. pipelining | |

Processing seismic data | |

Methods or arrangements for reading or recognising printed or written characters or for recognising patterns | |

Bioinformatics | |

Medical informatics |

Where the essential technical characteristics of the invention relate both to the image analysis detail and to its particular use or special adaptation, classification is made in both G06T 7/00 and the relevant application place in other subclasses.

G06T 7/00 focuses on image processing algorithms. Although such algorithms sometimes need to take into account characteristics of the underlying image acquisition apparatus, inventions to the image acquisition apparatus per se are outside the scope of this group.

Additional information should be classified using one or more of the Indexing Codes from the ranges of G06T 2200/00 or G06T 2207/00. Their use is obligatory.

The classification symbol G06T 7/00 is allocated to documents concerning:

- Architectures of image analysis systems, fif not provided for elsewhere

- Extraction of MPEG7 descriptors, if not provided for elsewhere

In this place, the following terms or expressions are used with the meaning indicated:

Stereo | Treatment of two images, e.g. from two cameras or a single camera that is displaced, in a pairwise manner |

Feature | a significant image region or pixel with certain characteristics, for example a feature point, landmark, edge, corner or blob, typically determined by image operators. |

Image analysis | the extraction of information from images through the use of image processing techniques acting upon image data, such as intensity, colour, motion and spatial frequency characteristics. |

In patent documents, the following abbreviations are often used:

AAM | Active appearance model |

ASM | Active shape model |

HMM | Hidden Markov Model |

LBP | Local Binary Pattern |

LPE | ligne de partage des eaux (French expression for watershed segmentation) |

RANSAC | Random Sampling (and) Consensus |

CAD | Computer-Aided Detection |

SLAM | Simultaneous Localization and Mapping |

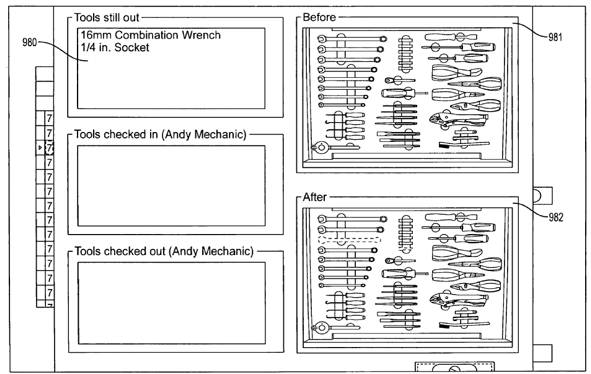

This place covers:

- Quality, conformity control

- Defects, abnormality, incompleteness

- Acceptability determination

- User interface for automated visual inspection

- Database-to-object inspection

- Image quality inspection

Attention is drawn to the following places, which may be of interest for search:

Determining position or orientation of objects | |

Validation, performance evaluation or active pattern learning techniques | |

Pattern matching criteria, e.g. proximity measures | |

Clustering techniques for pattern recognition | |

Classification techniques for pattern recognition | |

Image or video pattern matching | |

Pattern recognition or machine learning using clustering within arrangements for image or video recognition or understanding | |

Detection or correction of errors in pattern recognition | |

Evaluation of the quality of the acquired pattern in pattern recognition |

In relation to the remaining, function-oriented groups of G06T 7/00, this subgroup is an application-oriented group. Therefore, documents classified herein should also be classified in a function-oriented group under G06T 7/00, if they contain a considerable contribution on the respective function.

For image quality inspection G06T 2207/30168 (Image quality inspection) should be added.

This place covers:

- Quality, conformity control in industrial context

- Defects, abnormality in industrial context

- Acceptability determination in industrial context

- User interfaces for automated visual inspection in industrial context

- "Teaching" (macros for inspection algorithms)

- Database-to-object inspection in industrial context

- Printing quality

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Investigating the presence of flaws or contamination on materials |

Attention is drawn to the following places, which may be of interest for search:

Contactless testing using optical radiation for printed circuits | |

Contactless testing using optical radiation for individual semiconductor devices | |

Photolithography mask inspection | |

Component placement (in PCB manufacturing) |

When classifying in this group, the use of the indexing scheme G06T 2207/30108 - G06T 2207/30164 is mandatory for additional information related to industrial image inspection.

For user interfaces for automated visual inspection in industrial context, Indexing code G06T 2200/24 (involving graphical user interfaces [GUIs]) should be added.

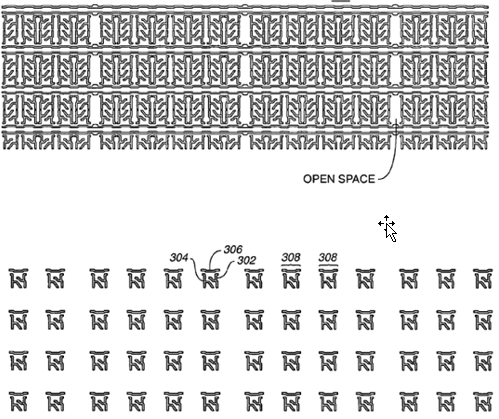

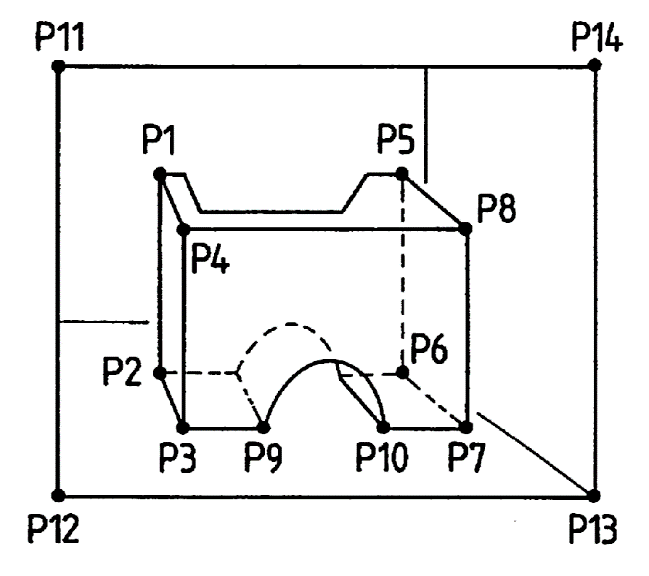

This place covers:

Verifying geometric design rules or known geometric parameters, e.g. width or spacing of structures, repetitive patterns

Illustrative example:

This place covers:

- Detecting the absence of an item that should be there

- Detecting incompleteness

Illustrative examples:

This place covers:

- Industrial image inspection where an image is compared to a reference image, standard image, ground truth image, gold standard: either by image comparison at image level, e.g. by image correlation, or by comparison of parameters extracted from the images

- Reference images originated from an image acquisition apparatus or derived from computer-aided design data

Illustrative examples:

Attention is drawn to the following places, which may be of interest for search:

Determining representative reference patterns or generating dictionaries | |

Determining representative reference patterns or generating dictionaries for image or video recognition or understanding |

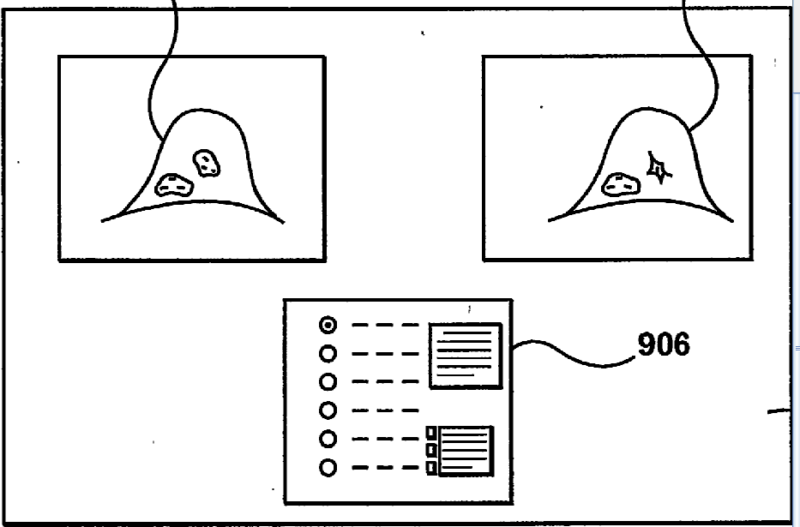

This place covers:

Defects, abnormality in biomedical context

Computer-aided detection [CAD]

Detecting, measuring, scoring, grading of

- Disease, pathology, lesions

- Cancer, tumor, tumour, malignancy, nodule

- Emphysema

- Microcalcifications

- Polyps

- Scar, non-viable tissue

- Osteoporosis, fracture risk prediction, Arthritis

- Alzheimer disease

- Scoring wrinkles, ageing

- Tissue abnormalities in microscopic images, e.g. inflammation, deformations

- Grading of living plants

Illustrative examples:

Characterising skin imperfections

Evaluating spine balance

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Apparatus for radiation diagnostics | |

Diagnosis using ultrasound | |

Signal processing for Nuclear Magnetic Resonance (NMR) imaging systems | |

Ultrasound imaging | |

ICT specially adapted for processing medical images, e.g. editing |

Attention is drawn to the following places, which may be of interest for search:

Recognising microscopic objects | |

Bioinformatics | |

Medical informatics |

When classifying in this group, the use of the indexing scheme G06T 2207/30004 - G06T 2207/30104 is mandatory for additional information related to biomedical image processing.

In this place, the following terms or expressions are used with the meaning indicated:

Biomedical | biological or medical |

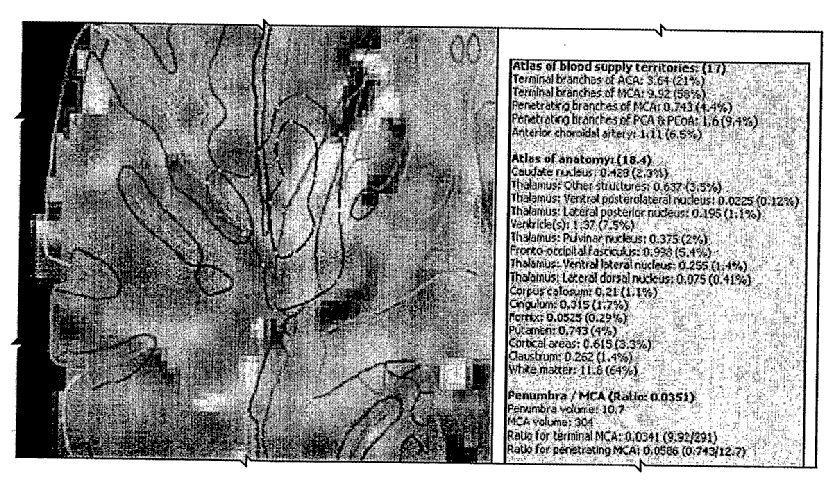

This place covers:

- Comparison to a reference image, standard image, atlas...

- Reference image taken from different patient or patients, or reference image taken from spatially different anatomical regions of the same patient, e.g. comparison of left and right body parts.

Illustrative examples

Superposition of a perfusion image and the brain atlas images in contour representation

Attention is drawn to the following places, which may be of interest for search:

Determining representative reference patterns or generating dictionaries | |

Determining representative reference patterns or generating dictionaries for image or video recognition or understanding |

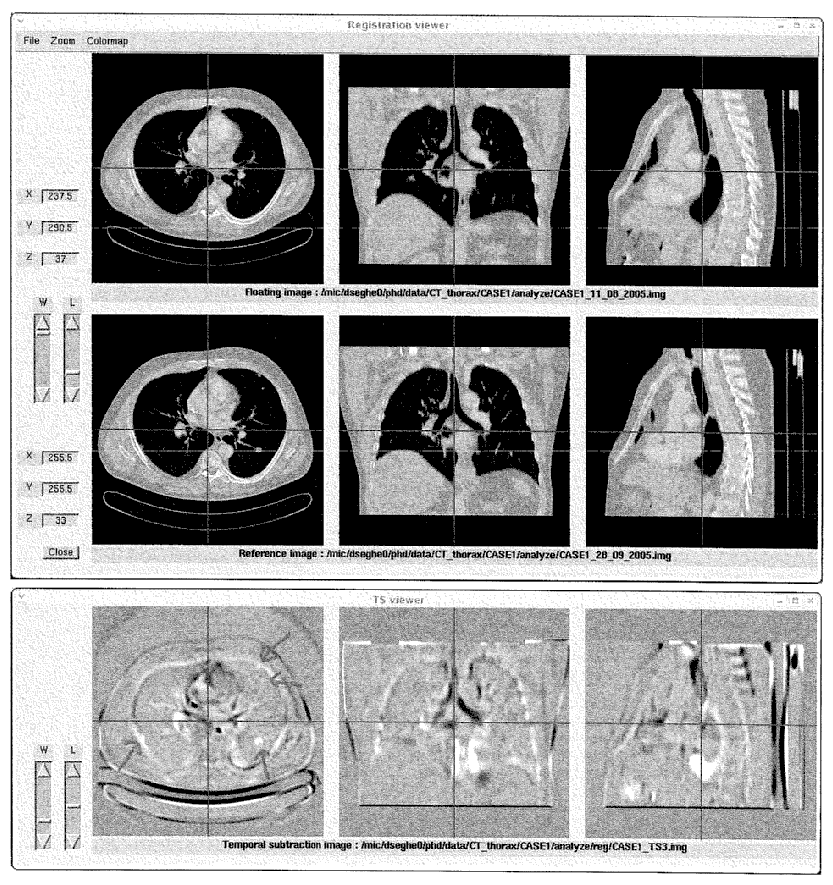

This place covers:

- Follow-up studies, comparison of images from different points of time, temporal difference images, temporal subtraction images, biomedical change detection.

- Reference image taken from the same patient and the same anatomical region.

- Subtraction angiography for abnormality detection.

- Assessment of dynamic contrast enhancement, wash-in/wash-out for abnormality detection.

- Plethysmography based on image analysis

Illustrative example:

Floating image, reference image and temporal subtraction image

Attention is drawn to the following places, which may be of interest for search:

Analysis of motion, e.g. change detection in general | |

Pattern matching criteria, e.g. proximity measures | |

Temporal feature extraction for image or video recognition or understanding | |

Image or video pattern matching |

For plethysmography based on image analysis, Indexing Code G06T 2207/30076 should be added.

This place covers:

- Segmentation, i.e. partitioning an image into regions

- Edge detection, i.e. detection of edge features in an image

This place does not cover:

Motion-based segmentation |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Separation of touching or overlapping patterns for pattern recognition,e.g. character segmentation for optical character recognition (OCR) | |

Extraction of image features/characteristics for pattern recognition | |

Detecting partial patterns, e.g. edges or contours, or configurations, e.g. loops, corners, strokes, intersections, for pattern recognition |

Attention is drawn to the following places, which may be of interest for search:

Analysis of texture | |

Determination of colour characteristics | |

Clustering techniques in pattern recognition | |

Classification techniques in pattern recognition | |

Feature extraction related to colour, for pattern recognition | |

Pattern recognition or machine learning using clustering within arrangements for image or video recognition or understanding |

This place covers:

Methods evaluating properties or features of image regions to determine the segmentation result, e.g.:

- Thresholding, fixed threshold binarisation, multiple and histogram-derived thresholds

- Region growing, splitting and merging

- Colour-based segmentation

- Texture-based segmentation

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Quantising the analogue image signal, e.g. histogram thresholding for discrimination between background and foreground patterns, for pattern recognition | |

Extraction of features or characteristics of the image related to colour, for pattern recognition | |

Cutting or merging image elements, e.g. region growing, watershed, clustering-based techniques, for pattern recognition |

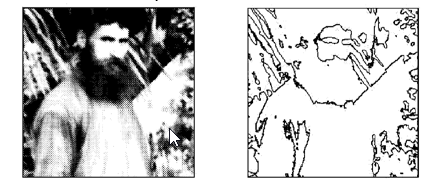

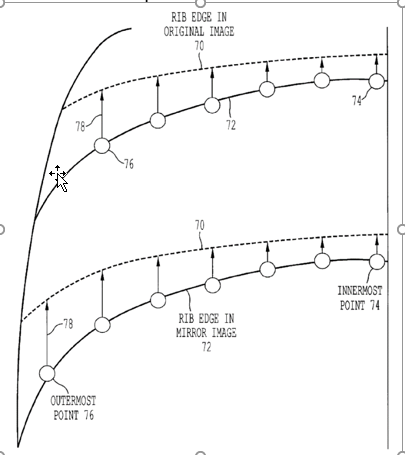

This place covers:

Methods evaluating (closed) contours, edges or outlines of image portions to determine the segmentation result, e.g.:

- Contour-based segmentation

- Detection of straight edge-lines (e.g. buildings or roads from aerial images) which partition an image into regions

- Finding and linking edge candidate points or segments (edgels)

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Detecting partial patterns, e.g. edges or contours, or configurations, e.g. loops, corners, strokes, intersections, for pattern recognition | |

Extraction of features or characteristics of the image by coding the contour of a pattern, for pattern recognition |

This place covers:

In contrast to G06T 7/12, this group covers documents pertaining purely to edge-detection without partitioning an image into regions, e.g.:

- Derivative methods (first-order or gradient, second order, e.g. Laplacian)

- Zero crossing

- Corner detection

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Detecting partial patterns, e.g. edges or contours, or configurations, e.g. loops, corners, strokes, intersections, for pattern recognition | |

Extraction of features or characteristics of the image by coding the contour of a pattern, for pattern recognition |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Quantising the analogue image signal, e.g. histogram thresholding for discrimination between background and foreground patterns, for pattern recognition |

This place covers:

- Statistical/Probabilistic methods for segmentation

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Classification techniques based on a parametric (probabilistic) model, for pattern recognition | |

Markov models or related models, Markov random fields or networks embedding Markov models for pattern recognition | |

Detecting partial patterns or configurations by analysing connectivity relationship of elements of the pattern, for pattern recognition | |

Pattern recognition or machine learning using classification within arrangements for image or video recognition or understanding |

This place covers:

- Model-based segmentation (in particular when applied to biomedical images)

- Methods based on active shape models

- Methods based on active appearance models

- Methods based on active contours, active surfaces, snakes or deformable templates

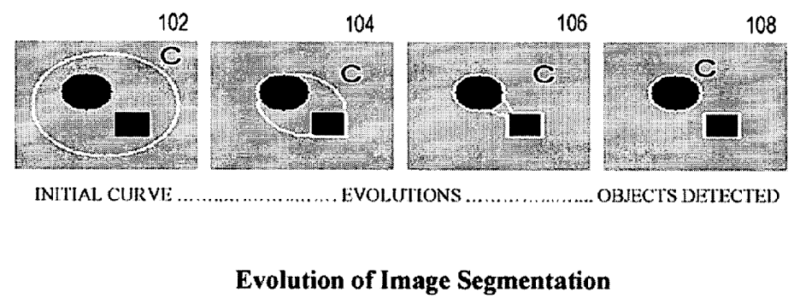

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Pattern recognition techniques involving a deformation of the sample or reference pattern or elastic matching | |

Matching of contours based on a local optimisation criterion, e.g. snakes or active contours, for pattern recognition | |

Matching based on shape statistics, e.g. active shape models, for pattern recognition | |

Matching based on statistics of image patches, e.g. active appearance models, for pattern recognition |

For Active shape model [ASM], Indexing Code G06T 2207/20124 should be added.

For Active appearance model [AAM], Indexing Code G06T 2207/20121 should be added.

For Active contour; Active surface; Snakes, Indexing Code G06T 2207/20116 should be added.

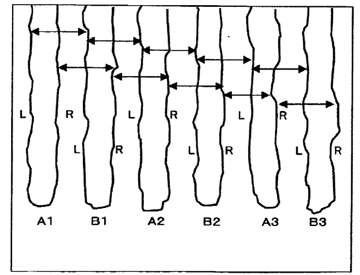

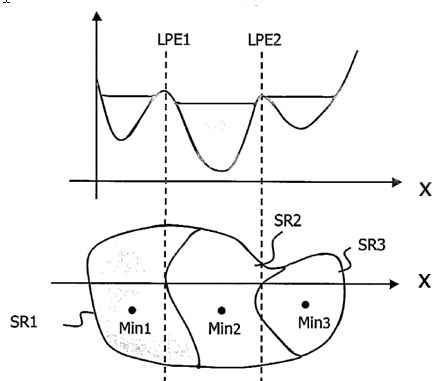

This place covers:

- Morphological methods

- Watersheds

- Toboggan-based methods

Illustrative examples:

Figure 1. The 1D profile I(x) representing the intensity of a dark object of interest on a light background, forms three basins which correspond to local minima Min1, Min2 and Min3 of the intensity of the segmented region. The three basins give rise to two watershed lines LPE1 and LPE2, which divide the segmented region into three sub-regions SR1, SR2 and SR3.

Figure 2. Toboggan-based object segmentation

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Smoothing or thinning the pattern, e.g. by morphological operators, for pattern recognition | |

Combinations of pre-processing functions using a local operator, for pattern recognition | |

Cutting or merging image elements, e.g. region growing, watershed, for pattern recognition |

For Morphological image processing, an Indexing Code from the range of G06T 2207/20036 - G06T 2207/20044 should be added.

This place covers:

- Graph-cut methods

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Feature extraction by graphical representation, e.g. directed attributed graphs, for pattern recognition |

Attention is drawn to the following places, which may be of interest for search:

Hierarchical clustering techniques, for pattern recognition | |

Non-hierarchical partitioning techniques based on graph theory, for pattern recognition | |

Graph matching, for pattern recognition |

This place covers:

- Fourier-, FFT-, Wavelet-based methods

- Gabor-, Laplace-transform-based methods

- Discrete cosine transform [DCT]-based methods

- Walsh-Hadamard transform [WHT]-based methods

- Hough transform

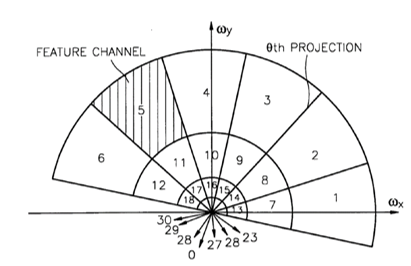

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Feature extraction by deriving mathematical or geometrical properties, frequency domain transformations, for pattern recognition | |

Detecting partial patterns using transforms (e.g. Hough transform), for pattern recognition | |

Feature extraction by deriving mathematical or geometrical properties, scale-space transformation, e.g. wavelet transform, for pattern recognition |

For Transform domain processing, an Indexing Code from the range of G06T 2207/20052 - G06T 2207/20064 should be added.

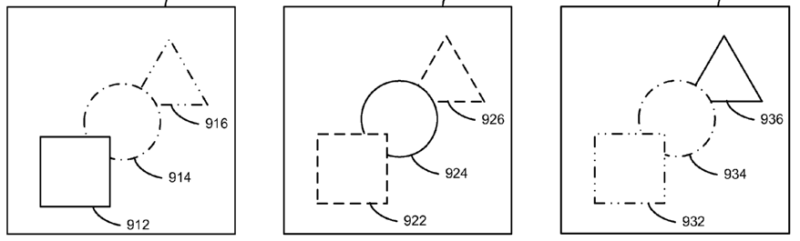

This place covers:

- Using information from multiple images to determine segmentation result

- Segmentation based on several images taken under varying illumination, focus, exposure, etc.

- Segmentation of a video frame involving several image frames of the video sequence, e.g. neighbouring frames

- Temporal and spatio-temporal segmentation, if not based on motion information

- Segmentation using several (neighbouring) slices of a tomographic data set (CT, MRI, PET, etc.), propagation of segmentation results between neighbouring slices

- Hierarchical segmentation methods (including wavelet-based schemes), if final segmentation result is derived from (partial) results at different resolution levels

- Multispectral image segmentation using information from different spectral bands (beyond the visible spectrum)

Illustrative example:

Attention is drawn to the following places, which may be of interest for search:

Motion-based segmentation |

This place covers:

Image segmentation or edge detection methods based on

- edge growing

- edge linking

- edge following

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Detecting partial patterns by analysis of the connectivity relationships of elements of the pattern, e.g. by edge linking, connected component or neighbouring slice analysis, for pattern recognition |

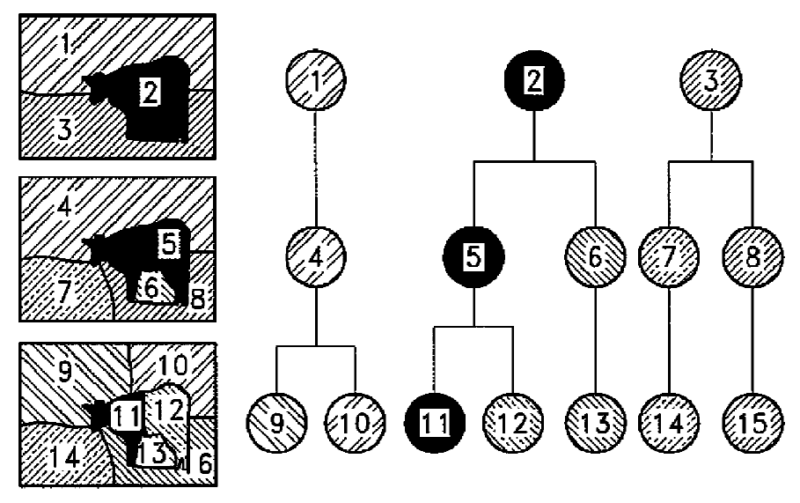

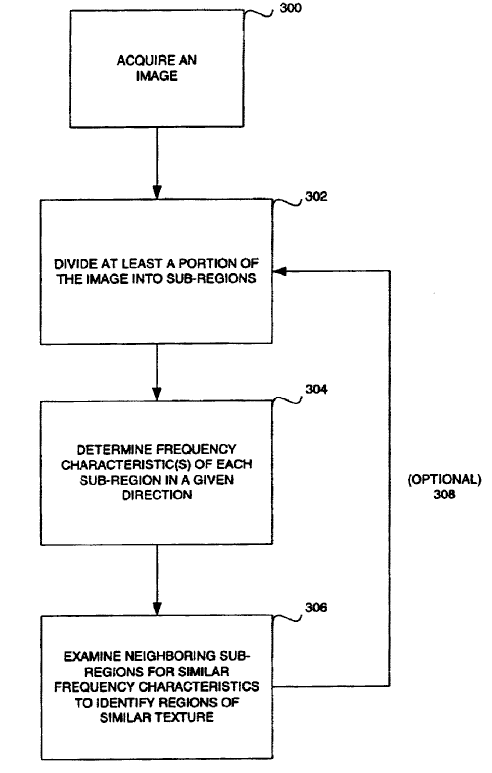

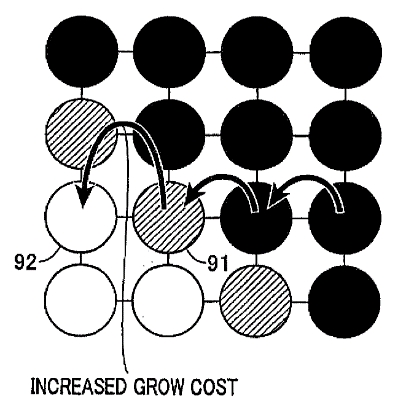

This place covers:

Image segmentation methods based on

- region growing; region merging

- split-and-merge

- connected component labelling

Illustrative example:

Figure 1. Region growing method which accumulates costs along a pixel path and as soon as the accumulated costs between neighbouring pixels (91, 92) become higher than a threshold, the growing is stopped.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Detecting partial patterns by analysis of the connectivity relationships of elements of the pattern, e.g. by edge linking, connected component or neighbouring slice analysis, for pattern recognition | |

Segmentation of touching or overlapping patterns, cutting or merging image elements, e.g. region growing, watersheds, for pattern recognition |

This place covers:

Image segmentation or edge detection methods based on a separation of foreground, i.e. relevant parts, and background, i.e. non-relevant parts of an image.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Quantising the analogue image signal, e.g. histogram thresholding for discrimination between background and foreground patterns, for pattern recognition |

This place covers:

- Image analysis algorithms for determining motion of an image subject, or of the camera having acquired the images. Determination of scene movement and between image frames, e.g. Change detection

- Tracking

- Motion capture

- Determining camera ego-motion add the Indexing Code G06T 2207/30244: Camera pose

- Medical motion analysis, e.g. of the left ventricle of the heart add the Indexing Code G06T 2207/30048: Heart; Cardiac

- Trajectory representation add the Indexing Code: G06T 2207/30241 Trajectory

- Stabilisation of video sequences (see also G06T 7/30)

This place does not cover:

Motion estimation for coding, decoding, compressing or decompressing digital video signals |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Scene recognition | |

Recognising video content | |

Recognising scenes under surveillance | |

Recognising scenes perceived from a vehicle | |

Recognising scenes inside a vehicle | |

Gesture recognition | |

Burglar, theft or intruder alarms using cameras and image comparison |

Attention is drawn to the following places, which may be of interest for search:

Determination of transform parameters for the alignment of images, i.e. image registration | |

Depth or shape recovery from motion | |

Determining position or orientation of objects | |

Video games | |

Target following using TV type tracking systems | |

Light barriers | |

Data indexing of video sequences | |

Surveillance systems using closed-circuit television systems (CCTV) |

For camera pose, Indexing Code G06T 2207/30244 should be added. For heart, cardiac, Indexing Code G06T 2207/30048 should be added. For trajectory details, Indexing Code G06T 2207/30241 should be added. For sports video, sports image, Indexing Code G06T 2207/30221 should be added

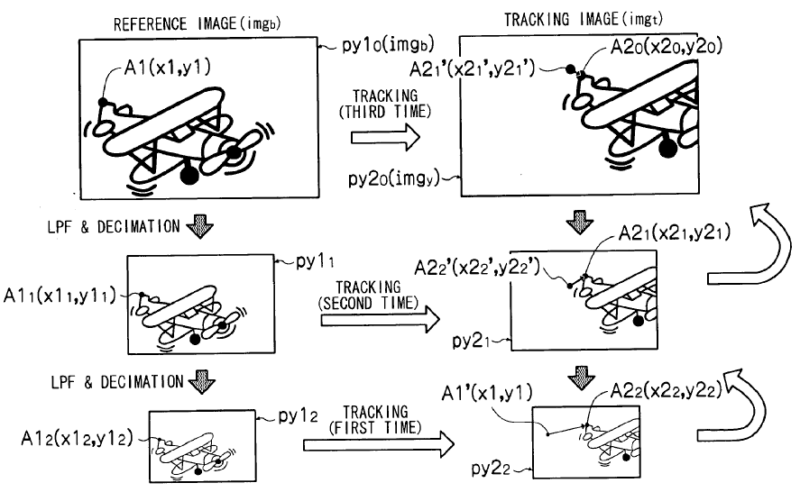

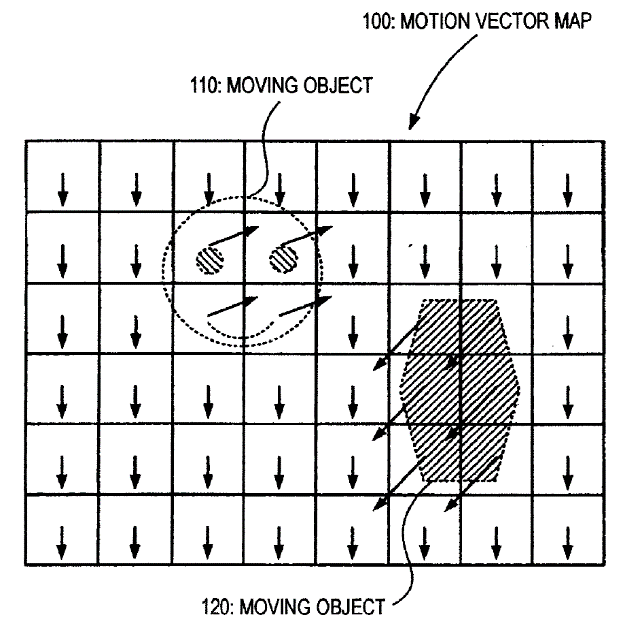

This place covers:

Illustrative example:

This place does not cover:

Multi-resolution motion estimation or hierarchical motion estimation for coding, decoding, compressing or decompressing digital video signals |

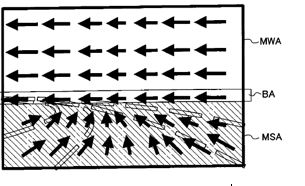

This place covers:

- Figure-ground segmentation by detection of moving object(s) from dense motion representation

- Partitioning an image into regions of homogenous 2D (apparent) motion

- Based on analysis of motion vector field or motion flow

- Grouping from optical flow

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Retrieval of video data using motion, e.g. objection motion | |

Segmenting video sequences, e.g. scene change analysis | |

Scene change analysis |

Attention is drawn to the following places, which may be of interest for search:

Segmentation; Edge detection |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Movement estimation for television pictures | |

Predictive coding in television systems using temporal prediction with motion detection |

Attention is drawn to the following places, which may be of interest for search:

Image coding using predictors | |

Use of motion vectors for image compression, coding using predictors, video coding |

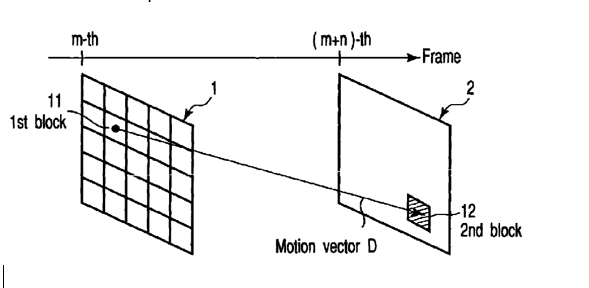

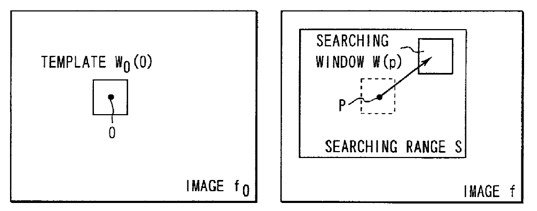

This place covers:

Full, exhaustive, brute force search

Illustrative example:

Figure 1. A motion vector between the m-th frame (1) and the (m+n)-th frame (2) is detected. At first, the image data of the m-th frame 1 is divided into a plurality of first blocks 11, and the first blocks 11 are extracted sequentially .The second block 12 of the same size and shape as the extracted first block 11 is extracted from the image data of the (m+n)-th frame 2. The absolute difference value of the corresponding pixels of the extracted first block 11 and the extracted second block 12 is computed every pixel.

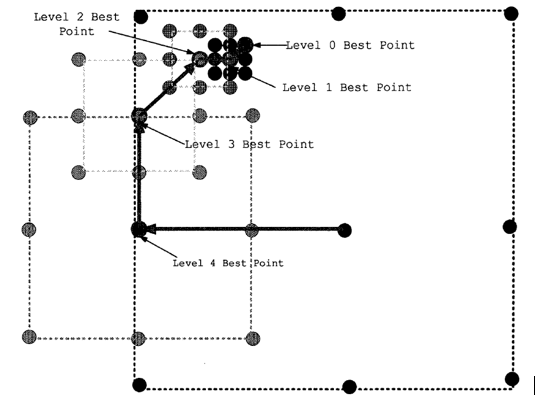

This place covers:

- Non-full, layered structure, fast, adaptive, efficient search

- Three-Step, New Three-Step, Four-Step Search

- Simple and Efficient Search

- Binary Search

- Spiral Search

- Two-Dimensional Logarithmic Search

- Cross Search Algorithm

- Adaptive Rood Pattern Search

- Orthogonal Search

- One-at-a-Time Algorithm

- Diamond Search

- Hierarchical search

- Spatial dependency check

Illustrative example of an hierarchical search:

For Hierarchical, coarse-to-fine, multiscale or multiresolution image processing; Pyramid transform, Indexing Code G06T 2207/20016 should be added.

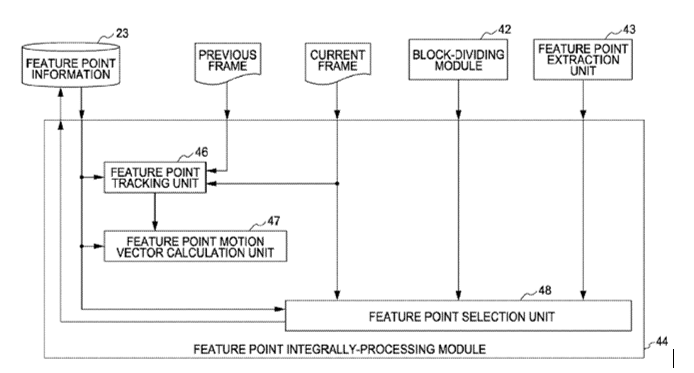

This place covers:

- Feature points, e.g. determined by image operators; also matching of point descriptors, feature vectors; significant segments, blobs

- Feature, landmark, marker, fiducial, edge, corner, etc.

Illustrative example:

In this place, the following terms or expressions are used with the meaning indicated:

Feature | a significant image region or pixel with certain characteristics |

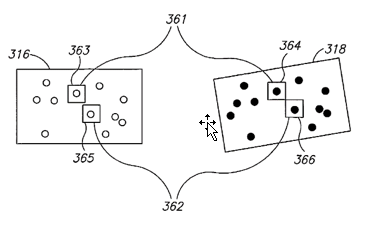

This place covers:

- Involving correlation of "true to reality" image patches, templates, regions of interest

- Correlation used for 1) finding features in each image or for 2) finding regions of interest from one image in the other images

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Face recognition using comparisons between temporally consecutive images |

Attention is drawn to the following places, which may be of interest for search:

Analysis of motion using block-matching (where blocks are arbitrarily defined by a grid, not as a significant image region) | |

Image matching for pattern recognition or image matching in general |

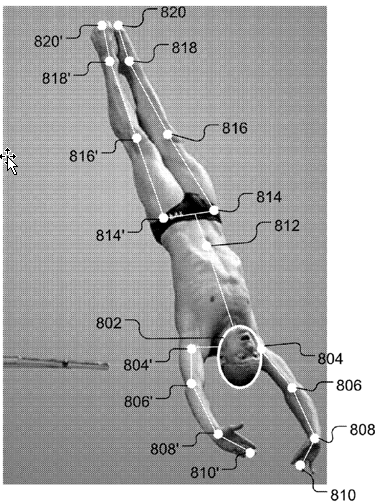

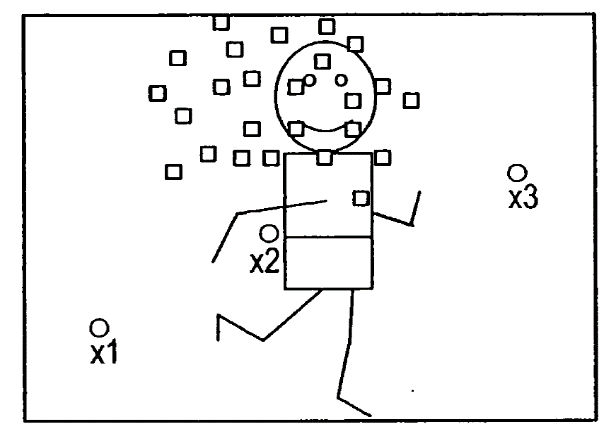

This place covers:

- Involving matching of intermediary 2D or 3D models extracted from each image before motion analysis, e.g. skeletons, stick models, ellipses, geometric models of all kinds, polygon models, active appearance and shape models, as opposed to reference images or patches

- Model matching used for 1) finding features in each image or for 2) finding structure of interest from one image in the other images

Illustrative example:

For each frame of a captured video sequence, a basic human body model 800 for diving competitions is superimposed on the frame and adjusted to provide an accurate representation of the diver's positioning in that frame, the sequence of adjusted models describing the entire motion sequence of the diver.

Attention is drawn to the following places, which may be of interest for search:

Matching of contours in general or matching of contours for pattern recognition | |

Syntactic or structural pattern recognition, e.g. symbolic string recognition |

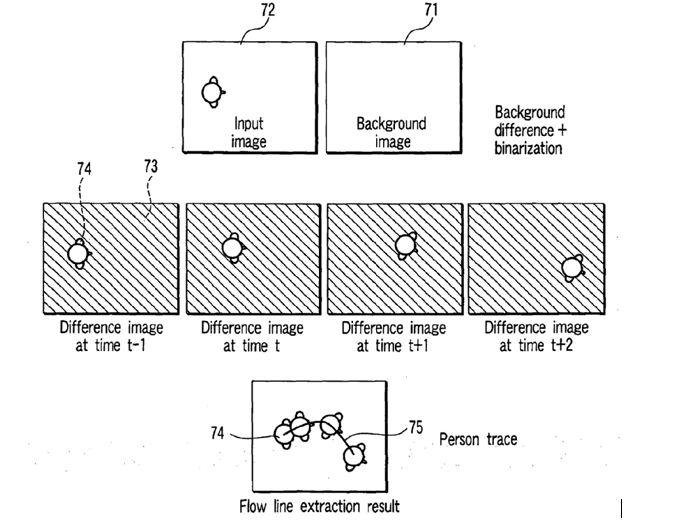

This place covers:

- Subtraction of previous image

- Subtraction of background image, background maintenance, background models therefor

- Also involving ratio or more general comparison of corresponding pixels in successive frames

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Burglar, theft or intruder alarms using cameras and image comparison |

Attention is drawn to the following places, which may be of interest for search:

Change detection in biomedical image inspection |

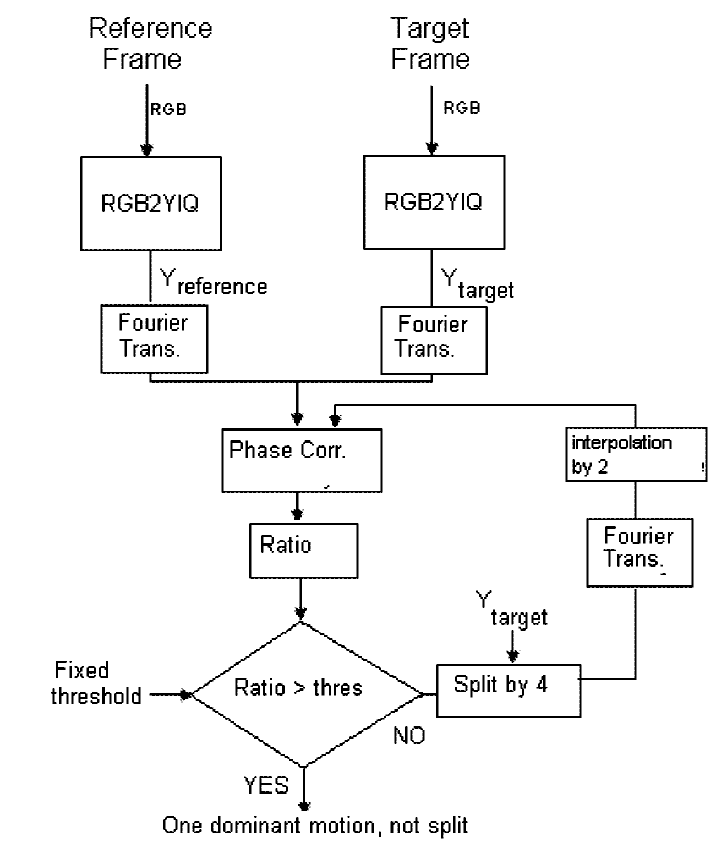

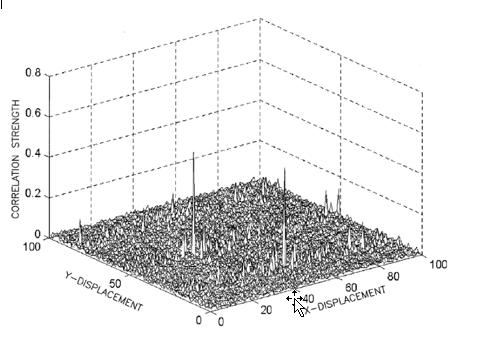

This place covers:

- Fourier, DCT, Wavelet, Gabor, etc.

- Using phase correlation

Illustrative examples:

Figure 1.

Figure 2.

Attention is drawn to the following places, which may be of interest for search:

Feature extraction by deriving mathematical or geometrical properties, frequency domain transformations, for pattern recognition | |

Detecting partial patterns using Hough transform for pattern recognition | |

Feature extraction by deriving mathematical or geometrical properties, scale-space transformation, e.g. wavelet transform, for pattern recognition |

For Transform domain processing, an Indexing Code from the range of G06T 2207/20052 - G06T 2207/20064 should be added.

This place covers:

Optic (optical) flow involving the calculation of spatial and temporal gradient

Illustrative example:

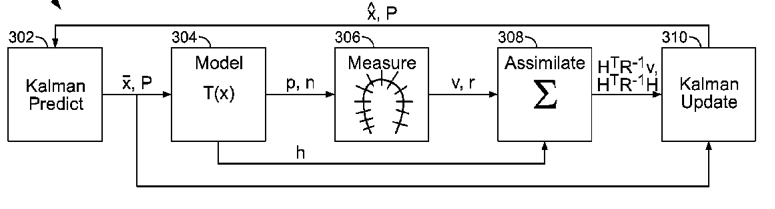

This place covers:

- Bayesian methods

- HMM

- Particle filtering

Illustrative examples:

Figure 1.

Figure 2. Kalman filter-based tracking of 3D heart model

Whenever possible, documents classified herein should also be classified in one of the other subgroups of G06T 7/20.

This place covers:

Illustrative example:

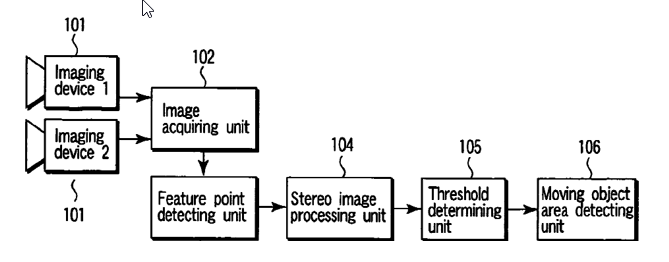

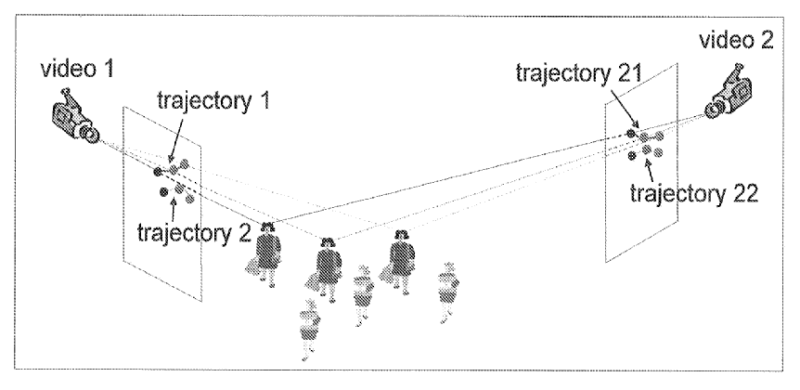

This place covers:

- Algorithms for camera networks

- Interaction, cooperation between trackers

- Multi-view tracking, multi-camera tracking

- The cameras view the same scene (cooperation, e.g. by voting, fusion)

- The cameras view different scenes (cooperation, e.g. by handover, tracklet joining, trajectory joining)

Illustrative example:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Classification of unknown faces, i.e. recognising the same non-enrolled faces, e.g. recognising the unknown faces across different face tracks |

Attention is drawn to the following places, which may be of interest for search:

Analysis of motion using a sequence of stereo pairs, e.g. cooperative motion analysis from a single stereo camera pair or motion analysis from at least three views, wherein at least one pair of views is processed as stereo pair |

Whenever possible, documents classified herein should also be classified in one of the other subgroups of G06T 7/20.

In particular, in the case of motion analysis from multiple monocular views with subsequent merging or joining of analysis results, details about the respective analysis algorithm per view should be classified in the subgroups of G06T 7/20 as well.

In this place, the following terms or expressions are used with the meaning indicated:

Multi-camera | Treatment of multiple image sequences, not in a pairwise manner |

Stereo | Treatment of two images, e.g. from two cameras or a single camera that is displaced, in a pairwise manner |

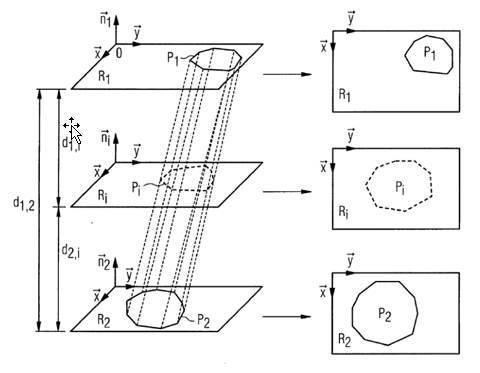

This place covers:

Image analysis algorithms for determining geometric transformations required to register (i.e. align) separate images. The process involves the estimation of transform parameters. Registration means determining the alignment of images or finding their relative position.

- Registration of image subparts for the construction of mosaics image

- Multi-modal, cross-modal, across-modal registration of medical image data sets

- Registration with medical atlas Registration of pre-operative and intra-operative medical image data sets

- Registration for change detection in biomedical or remote sensing images (change detection see also G06T 7/20

- Registration of models

- Registration of a model with an image

- Registration of range data, point clouds (ICP algorithm)

- 2D/2D, 2D/3D, 3D/3D registration

- Interactive registration

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Segmentation involving deformable models | |

Recognising three-dimensional objects, e.g. range data matching for pattern recognition |

Attention is drawn to the following places, which may be of interest for search:

Geometric image transformation in the plane of the image for image registration | |

Analysis of motion | |

Combining images from different aspect angles, e.g. spatial compounding | |

Pattern matching criteria, e.g. proximity measures | |

Image or video pattern matching | |