CPC Definition - Subclass G06V

This place covers:

Higher-level interpretation and recognition of images or videos, which includes pattern recognition, pattern learning and semantic interpretation as fundamental aspects. These aspects involve the detection, categorisation, identification, authentication of image or video patterns. For this purpose, image or video data are acquired and preprocessed. In the next step, distinctive features are extracted. Based on these features or representations derived from them, matching, clustering or classification is performed which may lead to one or several decisions, related confidence values (e.g. probabilities), classification or clustering labels. The aim is to find an explanation or to derive a specific meaning.

Pattern recognition or pattern learning in a specific, image or video-related context that includes:

- scene-related patterns and scene-specific elements – group G06V 20/00;

- character recognition or recognising digital ink; document-oriented image-based pattern recognition – group G06V 30/00;

- human-related, animal-related or biometric patterns in image or video data – group G06V 40/00.

Further details are given in the Definition statement of group G06V 10/00. Image or video recognition can be carried out by using electronic means (G06V 10/70) or by using optical means (G06V 10/88).

Typically, a pattern recognition system involves one or more of the following techniques:

Number of samples | Data entities (e.g. image objects) involved; Individual | Data entities (e.g. image objects) involved; Groups (classes) |

One data sample | Authentication | Categorisation |

Several data samples | Identification | Clustering |

Pattern recognition techniques in general are classified in group G06F 18/00.

Some techniques of image or video understanding performed in the preprocessing step — which start with a bitmap image as an input and derive a non-bitmap representation from it — can also be encountered in general image analysis. If these techniques do not involve one of the functions of image or video pattern authentication, identification, categorisation or clustering, classification should be made only in the appropriate subgroups of subclass G06T.

Some examples of these techniques are: general methods for image segmentation, e.g. obtaining contiguous image regions with similar pixels, for position and size determination of an object without establishing its identity, for calculating the motion of an image region corresponding to an object irrespective as to the identity of the object, for camera calibration, etc.

Techniques based on coding, decoding, compressing or decompressing digital video signals using video object coding are classified in group H04N 19/20.

Velocity or trajectory determination systems or sense-of-movement determination systems using radar, sonar or lidar are classified in groups G01S 13/58, G01S 15/58, G01S 17/58, respectively. Radar, sonar or lidar systems specially adapted for mapping or imaging are classified in groups G01S 13/89, G01S 15/89, G01S 17/89.

General purpose image data processing, in particular image watermarking, is classified in group G06T 1/00, while selective content distribution, such as generation or processing of protective or descriptive data associated with content involving watermarking is covered by group H04N 21/8358. General purpose image data acquisition and related pre-processing using digital cameras, and processing used to control digital cameras is classified in group H04N 5/00. Play-back, editing or synchronising of a music score, including interpretation therefor, as well as transmission of a music score between systems of musical instruments for play-back, editing or synchronising is classified in subclass G10H.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Detecting, measuring and recording for medical diagnostic purposes | |

Identifications of persons in medical applications | |

Sorting of mail or documents using means for detection of the destination | |

Input arrangements for interaction between user and computer | |

Testing to determine the identity or genuineness of paper currency or similar valuable papers or for segregating those which are unacceptable, e.g. banknotes that are alien to a currency |

Attention is drawn to the following places, which may be of interest for search:

Program-controlled manipulators | |

Optical viewing arrangements in vehicles | |

Photogrammetry or videogrammetry, e.g. stereogrammetry; Photographic surveying | |

Testing balance of machines or structures | |

Investigating or analysing materials by determining their chemical or physical properties | |

Radio direction-finding; Radio navigation; Determining distance or velocity by use of radio waves; Locating or presence-detecting by use of the reflection or reradiation of radio waves; Analogous arrangements using other waves | |

Geophysics | |

Optical elements, systems or apparatus | |

Photomechanical production of textured or patterned surfaces, e.g. for printing, for processing of semiconductor devices | |

Control or regulating systems in general | |

Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements | |

Comparing digital values in methods or arrangements for processing data by operating upon the order or content of the data handled | |

Content-based image retrieval | |

Fourier, Walsh or analogous domain transformations in digital computers | |

Security arrangements for protecting computer systems against unauthorised activity | |

Authentication, i.e. establishing the identity or authorisation of security principals | |

Computer-aided design [CAD] | |

Handling natural language data | |

Methods or arrangements for sensing record carriers | |

Record carriers for use with machines and with at least a part designed to carry digital markings | |

Computer systems based on specific computational models | |

Data processing for business purposes, logistics, stock management | |

General purpose image data processing, e.g. specific image analysis processor architectures or configurations | |

Geometric image transformation in the plane of the image, e.g. rotation of a whole image or part thereof | |

Image enhancement or restoration | |

Image analysis in general | |

Motion image analysis using feature-based methods | |

Image analysis using feature-based methods for determination of transform parameters for the alignment of images | |

Image analysis of texture | |

Image analysis for depth or shape recovery | |

Image analysis using feature-based methods for determining position and orientation of objects | |

Image analysis for determination of colour characteristics | |

Image coding | |

Image contour coding, e.g. using detection of edges | |

Two-dimensional [2D] image generation | |

Three-dimensional [3D] image rendering | |

Lighting effects in 3D image rendering | |

Three-dimensional [3D] modelling for computer graphics | |

Manipulating 3D models or images for computer graphics | |

Checking-devices for individual registration on entry or exit | |

Burglar, theft or intruder alarms using image scanning and comparing means | |

Traffic control systems for road vehicles | |

Labels, tag tickets or similar identification or indication means | |

Speech recognition | |

Speaker recognition | |

Bioinformatics | |

Chemoinformatics and computational material science | |

Healthcare informatics | |

Arrangements for secret or secure communications; Network security protocols | |

Scanning, transmission or reproduction of documents, e.g. facsimile transmission | |

Studio circuitry for television systems | |

Closed circuit television systems | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using video object coding | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals, region motion estimation for predictive coding | |

Semiconductor devices |

Pattern recognition or pattern learning techniques for image or video understanding involving feature extraction or matching, clustering or classification should be classified in groups G06V 10/40 or G06V 10/70 irrespective whether an application-related context provided by the groups G06V 20/00 - G06V 40/00 exists.

In this place, the following terms or expressions are used with the meaning indicated:

authentication | verifying the identity of a sample using a test of genuineness by undertaking a one-to-one comparison with the genuine (authentic) sample |

categorisation | assigning a sample to a class according to certain distinguishing properties (or characteristics) of that class; it generally involves a one-to-many test in which one data sample is compared with the characteristics of several classes. |

classification | assigning labels to patterns |

clustering | grouping or separating samples in groups or classes according to their (dis)similarity or closeness. It generally involves many-to-many comparisons using a (dis)similarity measure or a distance function. |

feature extraction | deriving descriptive or quantitative measures from data. |

identification | in the context of collecting of samples, identification means selecting a particular sample having a (predefined) characteristic which distinguishes it from the others. Several samples are generally matched against the one to be identified in a many-to-one process. |

image and video understanding | techniques for semantic interpretation, pattern recognition or pattern learning specifically applied to images and videos |

pattern | data having characteristic regularity, or a representation derived from it, having some explanatory value or a meaning, e.g. an object depicted in an image |

This place covers:

The functions performed at each step in the operation of an image or video recognition or understanding system.

These steps include:

Processing steps involved in a pattern recognition or understanding system

Classification of each of these steps may be made in groups as follows:

- G06V 10/10 – Image acquisition;

- G06V 10/20 – Image pre-processing;

- G06V 10/40 – Extraction of image or video features;

- G06V 10/70 – Arrangements for image recognition using pattern recognition or machine learning, e.g. matching, clustering or classification.

This place does not cover:

Character recognition in images or videos |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Image or video recognition or understanding of scene-related patterns and scene-specific elements | |

Image or video recognition or understanding of human-related, animal-related or biometric patterns in image or video data | |

Detecting, measuring and recording for medical diagnostic purposes | |

Identifications of persons in medical applications | |

Sorting of mail or documents using means for detecting the destination | |

Input arrangements for interaction between user and computer | |

Checking-devices for individual registration on entry or exit | |

Testing to determine the identity or genuineness of paper currency or similar valuable papers | |

Burglar, theft or intruder alarms using image scanning and comparing means | |

Traffic control systems for road vehicles | |

Scanning, transmission or reproduction of documents, e.g. facsimile transmission |

Attention is drawn to the following places, which may be of interest for search:

Optical viewing arrangements in vehicles | |

Investigating or analysing materials by determining their chemical or physical properties | |

Radio direction-finding; Radio navigation; Determining distance or velocity by use of radio waves; Locating or presence-detecting by use of the reflection or reradiation of radio waves; Analogous arrangements using other waves | |

Geophysics | |

Optical elements, systems or apparatus | |

Photomechanical production of textured or patterned surfaces, e.g. for printing, for processing of semiconductor devices | |

Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements | |

Content-based image retrieval | |

Fourier, Walsh or analogous domain transformations | |

Security arrangements for protecting computer systems against unauthorised activity | |

User authentication in security arrangements for protecting computers, components thereof, programs or data against unauthorised activity | |

Computer-aided design | |

Handling natural language data | |

Computer systems based on specific computational models | |

General purpose image data processing, e.g. specific image analysis processor architectures or configurations | |

Geometric image transformation in the plane of the image, e.g. rotation of a whole image or part thereof | |

Image enhancement or restoration | |

Image analysis in general | |

Motion image analysis using feature-based methods | |

Image analysis using feature-based methods for determination of transform parameters for the alignment of images | |

Image analysis of texture | |

Image analysis for depth or shape recovery | |

Image analysis using feature-based methods for determining position and orientation of objects | |

Image analysis for determination of colour characteristics | |

Image coding | |

Image contour coding, e.g. using detection of edges | |

Two-dimensional image generation | |

Three-dimensional [3D] image rendering | |

Lighting effects in 3D image rendering | |

Three-dimensional [3D] modelling for computer graphics | |

Manipulating 3D models or images for computer graphics | |

Bioinformatics | |

Chemoinformatics and computational material science | |

Healthcare informatics | |

Secret or secure communication | |

Studio circuitry for television systems | |

Closed circuit television systems | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using video object coding | |

Methods or arrangements for coding, decoding, compressing or decompressing digital video signals, region motion estimation for predictive coding |

In this place, the following terms or expressions are used with the meaning indicated:

classification | assigning category labels to patterns |

clustering | grouping or separating samples in groups or classes according to their (dis)similarity or closeness. It generally involves many-to-many comparisons using a (dis)similarity measure or a distance function. |

feature extraction | deriving descriptive or quantitative measures from data |

image and video understanding | techniques for semantic interpretation, pattern recognition or pattern learning specifically applied to images and videos |

pattern | data having characteristic regularity, or a representation derived from it, having some explanatory value or a meaning, e.g. an object depicted in an image |

pattern recognition | detection, categorisation, authentication and identification of patterns for explanatory purposes or to derive a certain meaning in images or video data, by acquiring, pre-processing or extracting distinctive features and matching, clustering or classifying these features or representations thereof |

This place covers:

The process of acquiring still images or video sequences for the purpose of subsequently recognising patterns in the acquired images.

Image capturing arrangements which visually emphasise those features of the objects that are relevant to the pattern recognition process.

Optimising the image capturing conditions, such as correctly placing the object with respect to a camera, choosing the right moment for triggering the image sensor, or suitably setting the parameters of the image sensor.

Devices for image acquisition including sensors that generate a conventional two-dimensional image irrespective of its nature (e.g. grey level image, colour image, infrared image, etc.), a three-dimensional point cloud, a sequence of temporally-related images or a video.

Notes – other classification places

Constructional details of the image acquisition arrangements are covered by a hierarchy of subgroups branching from group G06V 10/12:

- Group G06V 10/14 covers the design of the optical path, including the light source (if any), the different optical elements such as lenses, prisms, mirrors, apertures/diaphragms, filters, the individual optical characteristics of these elements (e.g. refraction indices, focal lengths, chromatic aberrations or distortions) and their optical arrangement;

- Group G06V 10/141 covers the control of the illumination, e.g. strategies for activating additional light sources if the ambient lighting is insufficient for a reliable pattern recognition or if individual facets of an object are obstructed by shadows;

- Group G06V 10/143 covers processes or devices which emit or sense radiation in different parts of the electromagnetic spectrum (e.g. infrared light, the visual spectrum and ultraviolet light) so as to obtain a comprehensive set of sensor readings, which when combined facilitate an automated distinction of different kinds of objects. For example, an infrared image could be used for isolating living bodies from the background to analyse the presence of a living body in a second image modality, like an RGB image, the second image being aligned with the infrared image. The images captured in infrared could be used for night vision, e.g. detecting pedestrians or animals for collision avoidance. Sensors using multiple wavelengths are also typically used in remote sensing (e.g. when detecting different kinds of crops, forests, lakes, rivers or urban areas in multispectral or hyperspectral satellite imagery; see also group G06V 20/13);

- Group G06V 10/145 covers illumination arrangements which are specially adapted to increase the reliability of the pattern recognition process. For example, mitigating shadow artefacts, which are likely to deteriorate the pattern recognition process, by providing specially designed arrangements of light sources (light domes, softboxes, ring flashes, etc). The pattern recognition process can also be supported by means of a structured light projector, which projects specific patterns (e.g. stripes or fringe patterns) onto the object so as to augment the two-dimensional image data with three-dimensional information and for this purpose, additional optical elements such as gratings or filter masks may be added to the illumination system. These various special illumination arrangements are also commonly used for recognising patterns in microscopic imagery (see also group G06V 20/69);

- Group G06V 10/147 covers technical details of the image sensor, such as the sensor technology (photodiodes, CCD, CMOS, etc.), the size and the geometrical distribution of light receiving elements on the sensor surface, or the presence of additional optical elements on the sensor (e.g. micro-lenses, diaphragms, collimators or coded aperture masks).

Illustrative examples of subject matter classified in this place:

1.

2.

Illumination by casting infrared (IR) light onto a person to highlight regions of a hand, to assist in gesture recognition.

CCTV and image transmission systems are classified in group H04N 7/00.

This place does not cover:

Image acquisition in photocopiers or fax machines | |

Controlling digital cameras |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Image acquisition arrangements specifically designed for optical character recognition | |

Image acquisition arrangements specifically designed for fingerprint or palmprint sensors | |

Image acquisition arrangements specifically designed for vascular sensors | |

Image acquisition arrangements specifically designed for taking pictures of the eye |

Attention is drawn to the following places, which may be of interest for search:

Recognising patterns in satellite imagery | |

Recognition of microscopic objects in scenes | |

Devices for illuminating a surgical field | |

Optical instruments for measuring contours or curvatures | |

Means for illuminating specimens in microscopes | |

Digital video cameras | |

Digital image sensors |

In this place, the following terms or expressions are used with the meaning indicated:

CCD | charge-coupled device |

CMOS | complementary metal-oxide-semiconductor |

visible light | light as seen by the eye, typically in the range 400 – 750 nm |

IR | infrared, wavelength longer than those of visible light, typically in the range 750 nm - 1 mm |

LIDAR | light detection and ranging, optical range sensing method, which targets a laser at objects and generates a three-dimensional representation (a point cloud) |

NIR | near-infrared, typically having wavelengths between 750 nm - 2,5 μm. |

UAV | unmanned aerial vehicle, a drone |

UV | ultraviolet light, wavelengths shorter than that of visible light, but longer than X-rays, typically having a range of 10 nm - 400 nm |

X-rays | electromagnetic radiation in the range 10 pm – 10 nm |

This place covers:

Any kind of processing of acquired image or video data before the steps of feature extraction and recognition; devices configured to perform this processing.

Processing to prepare an image for feature extraction.

Processing to enhance image quality with the intent to emphasise structures in the image, which inform the automated recognition of objects or categories of objects.

Processing to attenuate or discard elements of the image, which are unlikely to be useful for the pattern recognition process.

Processing converts image to a standard format suitable for feature extraction and pattern recognition routines.

Notes – other classification places

Specific aspects of pre-processing are covered by the subgroups of group G06V 10/20; they particularly relate to aspects such as:

- Processes or devices for identifying regions of the image, which should be subjected to the pattern recognition process, or which are likely to contain image information that is relevant for an object recognition task – covered by group G06V 10/22;

- Correcting wrongly oriented images (e.g. changing the orientation from an erroneous portrait mode to landscape mode), compensating for the pose change of the object by performing affine transformations (translation, scaling, homothety, similarity, reflection, rotation, shear mapping and compositions of them in any combination and sequence), or for correcting geometrical distortions induced by the image capturing – covered by group G06V 10/24;

- Determination of a bounding box containing the pattern of interest, processing within a region-of-interest [ROI] or volume-of-interest [VOI] to emphasise the pattern for recognition – covered by group G06V 10/25;

- Devices or processes for separating a candidate object from other, non-interesting image regions or the background; image segmentation to the extent that it is adapted to support a subsequent recognition step – covered by group G06V 10/26;

- Adjusting the bit depth, e.g. conversion to black-and-white images, and setting thresholds therefor, e.g. by analysis of the histogram of the image grey levels; Converting the image data to a predetermined numerical range, e.g. by scaling pixel values – covered by group G06V 10/28;

- Techniques for improving the signal-to-noise [SNR] ratio or denoising the image for the purpose of improving the recognition – covered by group G06V 10/30;

- Adjusting the size or the resolution of the image to a standard format, e.g. by scaling; adjusting the size of the detected object to a certain format – covered by group G06V 10/32;

- Smoothing or thinning to obtain an alternative, less complex representation of the pattern; applying morphological operators (e.g. morphological dilation, erosion, opening or closing) for filling in gaps or merging elements, with the aim of emphasising the structures relevant for recognition; skeleton extraction for characterising the shape of a pattern – covered by group G06V 10/34;

- Enhancing the contrast by convolving the image with a filter mask or by applying a non-linear operator to local image patches – covered by group G06V 10/36.

Illustrative example of subject matter classified in this place:

Alignment of the image of a face by affine transformations to obtain a pose-invariant image.

Different image pre-processing in general is covered in groups as follows:

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Recognising scenes; Scene-specific elements | |

Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition | |

Image or video recognition or understanding of human-related, animal-related or biometric patterns in image or video data |

Attention is drawn to the following places, which may be of interest for search:

Filter operations to reveal edges, corners or other image features, which are used to characterise objects | |

Image enhancement or restoration | |

Image segmentation | |

Morphological operators for image segmentation |

In this place, the following terms or expressions are used with the meaning indicated:

DCT | discrete cosine transform |

FFT | fast Fourier transform |

FOV | field of view, the region of the environment that an image sensor observes |

ROI | region of interest, an image patch that is likely to contain relevant information |

skeletonisation | process of shrinking a shape to a connected sequence of lines, which are equidistant to the boundaries of the shape |

SNR | signal-to-noise ratio |

VOI | volume of interest, a cuboid that encloses three-dimensional data points that are likely to represent relevant information |

This place covers:

Guiding a pattern recognition process or device to a specific region of an image where the pattern recognition algorithm is to be applied, e.g. using fiducial markers.

The use of reference points in images, e.g. patterns having unique combinations of colours or other image properties, which make them useful for guiding a pattern recognition process.

Illustrative examples of subject matter classified in this place:

1.

A fiducial marker placed in the centre of an object is used for its object detection and recognition.

2.

3.

A pattern present on a marker gives additional information about the scene to be recognised.

Determination of position or orientation of image objects using fiducial markers is covered by group G06T 7/70.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Devices for tracking or guiding surgical instruments | |

Fiducial marks and measuring scales in optical systems | |

Marks applied to semiconductor devices |

Attention is drawn to the following places, which may be of interest for search:

Aligning, centring, orientation detection or correction of the image | |

Image pre-processing for image or video recognition or understanding involving the determination of region of interest [ROI] or volume of interest [VOI] | |

Image analysis for determining position or orientation of objects or cameras |

In this place, the following terms or expressions are used with the meaning indicated:

AR | augmented reality |

ARTag | fiducial marker system based on ARToolKit |

ARToolKit | open-source software library for augmented reality |

FFT | fast Fourier transform |

fiducial marker | an image element which is explicitly designed for serving as a visual landmark point. A fiducial marker can be as simple as a set of lines forming crosshairs or a rectangle, but it can also be a more elaborate pattern such as an augmented reality tag, which additionally conveys information encoded as a two-dimensional barcode. Fiducial markers generally provide information about the position and, often, the orientation or the three-dimensional arrangement of objects in images. Additionally, they can comprise unique identifiers to support the recognition process. Fiducial markers are designed for being easily distinguishable from other image elements; therefore, they commonly have sharp image contrasts (e.g. by limiting their colours to black and white), and they are often designed to generate sharp peaks in the frequency space, allowing them to be easily recognisable by a two-dimensional Fourier transform. Commonly known fiducial markers are those defined by the augmented reality toolkit (ARToolKit). |

This place covers:

Methods or arrangements for aligning or centring the image pattern so that it meets the requirements for successfully recognising it; for example, adjusting the camera's field of view such that a face, a person or another object of interest is located at the centre of the image.

Adjusting the field of view such that the object is entirely visible without any parts of the object extending beyond the boundaries of the image

Correcting the image alignment by changing from landscape to portrait mode.

Detecting or correcting images that were flipped upside-down or left-right.

Compensating for image skew.

Illustrative examples of subject matter classified in this place:

1A.

1B.

Compensation for the tilt angle of a face captured by a mobile phone by aligning the image.

Attention is drawn to the following places, which may be of interest for search:

Image pre-processing by selection of a specific region containing or referencing a pattern; Locating or processing of specific regions to guide the detection or recognition | |

Image pre-processing for image or video recognition or understanding involving the determination; Determining of region of interest [ROI] or volume of interest [VOI] |

In this place, the following terms or expressions are used with the meaning indicated:

FOV | field of view, the region of the environment that an image sensor observes |

This place covers:

Methods or arrangements for identifying regions in two-dimensional images, or volumes in three-dimensional point cloud data sets, which contain information relevant for recognition.

Identifying regions or volumes of interest in an image, point cloud or distance map which are likely to lead to successful object recognition.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

A region or volume of interest [RoI or VoI] could include, for example, a human face (in case of a CCTV system), a vehicle or a pedestrian (in case of a camera-based traffic monitoring system), an obstacle on the road (in case of an advanced driver assistance system) or an item on a conveyor belt (in case of an industrial automation system).

The determination of a region or volume of interest is in essence a task of object detection, that is to say detecting the presence of a particular kind of object in images and localising the object(s).

It is the necessity of localising an object and, in particular, of describing the position and the spatial extent of the object (e.g. by outputting a bounding box around it) that distinguishes "object detection" algorithms from "object recognition" algorithms. This is because an "object detection" algorithm will merely assess whether a given visual object exists at a given image location. It may automatically generate a bounding box (e.g. around weeds in a field of vegetables) without solving the problem of "object classification" (e.g. analysing an image of a weed to determine its species and to output its botanical name).

Algorithms for detecting ROIs or VOIs in video sequences typically use frame differencing or more advanced optical flow methods for detecting moving objects.Algorithms that determine a region or volume of interest [ROI or VOI] may use visual cues to establish the location of a boundary box, e.g. by evaluating features such as colour distributions or local textures.

The determination of a region or volume of interest may be facilitated by using special illumination, such as casting light in a specific direction where an object is to be expected in autonomous driving, or by treating the images of specimens with special staining, as is the case in classification of objects in microscopic imagery.

More recently developed algorithms use neural networks [NN] which integrate object detection and recognition. An example is the region-based convolutional neural network [R-CNN] which uses segmentation algorithms for splitting the image into individual segments to find candidate ROIs, followed by inputting each ROI to a classifier for subsequent object recognition.

Other solutions, such as the you only look once [YOLO], region-proposal networks [RPN] or single shot detector [SSD] networks integrate the ROI detection into the actual object recognition step.

Illustrative example of subject matter classified in this place:

Using a mixed architecture based on region-proposal convolutional networks [R-CNN or RPN] to define a region of interest [ROI] and classifying it by another mixed convolutional neural network [CNN] using 2D and 3D information.

Determination of a ROI for character recognition is classified in group G06V 30/146.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Devices for radiation diagnosis | |

Diagnostic systems using ultrasound, sound or infrasound | |

Computer-aided diagnosis systems |

Attention is drawn to the following places, which may be of interest for search:

Region-based segmentation image analysis |

In this place, the following terms or expressions are used with the meaning indicated:

AOI | area of interest, synonym for ROI |

FOV | field of view, the region of the environment that an image sensor observes |

R-CNN | convolutional neural network using a region proposal algorithm for object detection (variants: fast R-CNN, faster R-CNN, cascade R-CNN) |

ROI | region of interest, an image region that is likely to contain relevant information concerning an object to be detected and recognised |

RPN | region proposal network, an artificial neural network architecture which defines a ROI |

SSD | single shot (multibox) detector, a neural network for object detection |

VOI | volume of interest, a cuboid that encloses three-dimensional data points that are likely to represent relevant information concerning an object to be detected and recognised |

YOLO | you only look once, an artificial neural network used for object detection (comes in various versions: YOLO v2, YOLO v3, etc.). |

This place covers:

Methods and arrangements for segmenting patterns in images or video frames, e.g. segmentation algorithms. Note: segmentation algorithms divide images or video frames into distinct regions, so that boundaries between neighbouring regions coincide with changes of some image properties.

Segmentation algorithms which operate directly on the image by considering the pixel values and their neighbourhood relationships, e.g. mathematical-morphology based algorithms, such as region growing, watershed methods and level-set methods.

Segmentation algorithms which generate a hierarchy of segmentations by starting with a coarse segmentation, which includes only few segments, and successively refine this coarse segmentation by splitting (possibly recursively) the coarse image segments into finer segments (coarse-to-fine approaches).

Graph-cut algorithms such as normalised cuts or min-cut which use graph-based clustering algorithms for image segmentation.

Region growing algorithms which start with few seed points and iteratively expand these into larger regions until some optimality criterion is fulfilled.

The use of classifiers for foreground-background separation. Note: classifiers calculate a score function which expresses a probability (or belief) that a given region of the image is a foreground object or part of the background. The image is then segmented based on these score values.

Deep learning models, in particular different encoder-decoder architectures based on convolutional neural networks [CNN's], applied to semantic image segmentation (a task which requires not only splitting the image into regions, but also consistently assigning labels to image object categories, e.g. "sky", "trees", "road").

Detection of occlusion. Note: Sometimes an object (e.g. a trunk of a tree) partly occludes another object, e.g. a dog behind the tree, which may cause the other object to be split into multiple disjoint segments; occlusion detection algorithms deal with such situations so as to join semantically linked segments into a single segment.

Other algorithms (e.g. some active contour models) which start from an initial image region, which is large enough to surely enclose an object in the image, and they iteratively shrink this region until its boundary is tightly aligned with the contour of the object.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

Segmentation algorithms divide images or video frames into distinct regions, so that boundaries between neighbouring regions coincide with changes of some image properties.

Segmentation algorithms may determine regions of homogeneous texture, regions having characteristic colours, regions enclosing individual objects, etc.

Some segmentation algorithms are in essence clustering algorithms. They disregard the spatial arrangement of pixels in the image and compute clusters in a feature space (e.g. by running the k-means algorithm on all colour values in an image). They then group spatially-connected pixels belonging to the same cluster into a region (a "segment").

Illustrative examples of subject matter classified in this place:

1.

Colour segmentation of a skin region of a face using the clustering in a colour space.

2.

Example of a scene frequently encountered in autonomous driving and its semantic segmentation map with regions such as "road", "sky", "trees", etc.

Variational methods used for object recognition, such as active contour models [ACM, or "snakes"], active shape models [ASM] or active appearance models [AMM] are classified in group G06V 10/74.

Attention is drawn to the following places, which may be of interest for search:

Clustering algorithms for image or video recognition or understanding | |

Image segmentation in general | |

Region-based segmentation image analysis | |

Edge-based image segmentation in general | |

Motion-based image segmentation in general |

In this place, the following terms or expressions are used with the meaning indicated:

BSD | Berkeley segmentation data set, a collection of manually segmented images |

K-Means | clustering algorithm |

NCUTS | normalised cuts, a graph-based segmentation algorithm |

PASCAL VOC | collection of image data sets for evaluating the performance of computer vision algorithms; it includes a dedicated data set for evaluating segmentation algorithms. |

This place covers:

Methods and arrangements for quantising the image with the effect that the number of possible pixel values does not exceed a predetermined maximum number.

Note: In the limit, this quantisation generates a binary two-tone (black-and-white) image, e.g. an image in which foreground objects appear white and any objects in the background appear black. Quantisation to other numbers of pixel values is also possible.

Quantisation algorithms which calculate a histogram of the grey value distribution and then use one or more thresholds to divide the grey values into different ranges, and then map grey values in the same range to the same target value.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

Image quantisation refers to a technique in which the number of possible pixel values is set so that it does not exceed a predetermined maximum number. The source image may be an analogue image, which is quantised for being storable in digital form, or a digital image, which is quantised to a smaller bit depth.

Quantisation may be uniform or non-uniform. The subsequent encoding might adapt the number of bits to encode the representation, using more bits to represent those ranges of grey values which are considered to be particularly relevant for the subsequent image recognition step.

Colour or grey value quantisation can cause artefacts in the resulting image, such as apparent edges or quantisation boundaries which did not exist in the original image (e.g. colour banding). These artefacts can be mitigated by dithering techniques (e.g. by using the Floyd-Steinberg algorithm).

Quantisation may be performed globally (using the histogram of the whole image) or locally (using statistics of local image patches).

Illustrative examples of subject matter classified in this place:

1.

2.

Detection of faces in colour images by creating a single-channel image, e.g. a greyscale image, and subsequent binarisation by thresholding.

Image enhancement or restoration by the use of histograms is covered by group G06T 5/40.

Attention is drawn to the following places, which may be of interest for search:

Image coding | |

Circuits or arrangements for halftone screening | |

Systems for transmitting or storing colour picture signals | |

Quantisation for adaptive video coding |

In this place, the following terms or expressions are used with the meaning indicated:

bit depth | number of bits, which is available for indicating the grey level or colour of an individual pixel |

This place covers:

Techniques for noise removal or filtering such as thresholding in the frequency domain (e.g. after a Fourier or wavelet transform), edge-preserving smoothing techniques such as anisotropic diffusion (also called Perona-Malik diffusion) or deep learning approaches to image denoising, e.g. using convolutional neural networks [CNN's].

Linear smoothing filters (e.g. for convolving the original image with a low-pass filter such as a Gaussian kernel matrix or applying a Wiener filter) and non-linear filtering such as median filtering or bilateral filtering (see also group G06V 10/36), when applied for the purpose of noise removal.

Noise estimation techniques based on a reference image, wherein the reference image may be:

- a previously captured image which was obtained with the same camera set-up;

- a previously captured image which was obtained with an optical system of higher quality, potentially downscaled or otherwise converted to match the expected performance parameters of a lower-quality system;

- artificially generated patterns, obtained, e.g. by blurring or smoothing the original image or by means of computer graphics techniques (e.g. rendered from a 3D model of an object).

Estimation of noise parameters based on different noise models, e.g. additive white Gaussian noise, speckle noise, etc.

Detection of blur or defocusing of the image pattern.

Illustrative examples of subject matter classified in this place:

1.

Face image denoising

2.

Face denoising using an autoencoder convolutional neural network architecture (above), followed by face recognition using a discriminator architecture (below).

3.

Attention is drawn to the following places, which may be of interest for search:

Aligning, centring, orientation detection or correction for image or video recognition or understanding | |

Segmentation of patterns in the image field | |

Local image operators for image or video recognition or understanding, e.g. median filtering | |

Enhancement or restoration for general image processing |

In this place, the following terms or expressions are used with the meaning indicated:

DCT | discrete cosine transform |

FFT | fast Fourier transform |

probability density function | |

SNR | signal to noise ratio |

This place covers:

Processes and devices for bringing image or video data to a standard format, so that it may be compared with reference data (e.g. with images in an image database or gallery images serving as reference templates).

Normalisation or standardisation of the size of images, e.g. by cropping, by reducing the image size via downscaling or sub-sampling, or by enlarging images via up-scaling and interpolation.

Note – technical background

These notes provide more information about the technical subject matter that is classified in this place:

Normalisation can involve adjustments to guarantee that all objects to be recognised have similar size or appearance (e.g. by rescaling facial images so that they are centred and that the area of the face covers a predetermined fraction of the image, or by only selecting frontal images).

Illustrative example of subject matter classified in this place:

Correction of the region detected for the face image by cropping around the face region.

Attention is drawn to the following places, which may be of interest for search:

Image enhancement or restoration in general, e.g. dynamic range modification |

This place covers:

Techniques for binary and grayscale morphological analysis of image patterns. These techniques include:

- Basic morphological operators: erosion, dilatation, opening, closing, watershed analysis, etc.;

- Detection of patterns or arrangements of pixels in a binary or a greyscale image, e.g. by using the hit-or-miss transform;

- Finding the outline or contour of a foreground object by morphological processing (morphological edge detection, watershed processing, etc.);

- Finding the skeleton of a foreground object, e.g. by thinning, medial axis transformation, contour-based erosion, etc.;

- Distance transformation.

Illustrative example of subject matter classified in this place:

Extraction of the skeleton representation of a human body by applying morphological operations.

In this place, the following terms or expressions are used with the meaning indicated:

skeletonisation | process of shrinking a shape to a connected sequence of lines, which are equidistant to the boundaries of the shape |

This place covers:

Image or video pre-processing techniques which examine a local neighbourhood around a pixel and assign a value to the pixel, which is a function of the values (e.g. colour values or luminance values) of the pixels in this local neighbourhood.

The application of local operators in the spatial domain (e.g. by convolving the image with a predefined kernel matrix) or in the frequency domain (e.g. by calculating the Fourier transform and performing a point-wise multiplication in the frequency domain).

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

1. Usually the local neighbourhood is defined as a rectangular region of pixels with the pixel of interest placed at its centre, e.g. a 3*3 pixels neighbourhood or a 5*5 pixels neighbourhood; neighbourhoods having other shapes are also possible, but they are less common.

2. Local operators include:

- Linear operators, e.g. convolutions with low-pass filter matrices (such as a Gaussian kernel or a boxcar function; and convolutions with high-pass filter matrices for sharpening the image, or convolutions with spatial band-pass filters (such as the difference of Gaussians filter);

- Non-linear operators, e.g. median filters and more complex operators such as those for evaluating local luminance differences in order to detect sparkle points which are significantly brighter than their immediate surroundings;

- Non-linear operators, e.g. the Sobel operator and the Marr Hildreth operator are also frequently used for emphasising object boundaries or elongated structures in images;

- Differential operators such as the Laplace operator and filter matrices for calculating image gradients.

Notes – other classification places

Use of low-pass filter matrices for noise removal – group G06V 10/30.

Use of median filters for noise removal – group G06V 10/30.

Use of the Sobel operator and the Marr Hildreth operator for edge detection – group G06V 10/44.

Illustrative example of subject matter classified in this place:

Analysis of local image patches of a face image using a local operator and encoding the representation for subsequent face recognition.

Attention is drawn to the following places, which may be of interest for search:

Noise removal for image or video recognition or understanding | |

Detecting edges or corners for image or video recognition or understanding | |

Extracting features from image blocks | |

Local operators for general image enhancement |

In this place, the following terms or expressions are used with the meaning indicated:

BPF | band-pass filter |

DCT | discrete cosine transform |

DoG | difference of Gaussians |

DWT | discrete wavelet transform |

FFT | fast Fourier transform |

HPF | high-pass filter |

Kernel | filter kernel, a matrix which an image is convolved with |

LPF | low-pass filter |

This place covers:

Methods and arrangements for extracting visual features which are subsequently input to an object recognition algorithm.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

Formerly, the choice of suitable feature extraction algorithms was a crucial design choice in the art of pattern recognition algorithms. It had a strong influence of the overall performance. With the advent of deep learning, particularly in convolutional neural networks, the need for the hand-picked design of dedicated feature extraction algorithms has decreased to some extent, because the inner layers of the neural networks are trained to automatically find suitable features from the training data.

Notes – other classification places

Subgroups of group G06V 10/40 focus on specific kinds of feature extraction techniques. These include:

- Features which describe characteristics of the entire image or an entire object (group G06V 10/42);

Note: Global feature extraction techniques often involve domain transformations, such as frequency domain transformation. The global descriptors contain numerical data, such as vectors or matrices, but they can also represent the image or object in an abstract form as a string of symbols from a predetermined alphabet, which are integrated using a grammar (covered by group G06V 10/424).

- Graph structures having vertices and edges (e.g. directed attributed graphs or trees) are another way of representing patterns in images; the vertices of such graph structures represent qualitative or quantitative feature measurements; the edges represent relations between them (covered by group G06V 10/426);

- Local features (covered by group G06V 10/44) build representations of the local image content. Examples of local features include luminance values or colour characteristics, potentially from more than three colour channels, local edges, corners, gradients and texture. Edges can be extracted by convolutions with specially designed filter masks (e.g. Prewitt, Sobel) or by convolutions with a numerical filter, e.g. wavelet filters (Haar, Daubechies), or by difference of Gaussians, Laplacian of Gaussians, Gabor filters etc. Local features such as edges and corners, which can be extracted by applying a pre-defined image operator, are also referred to as low-level features to distinguish them from features such as objects or events, which are extracted using a machine learning algorithm;

- Higher-level features, obtained e.g. by detecting silhouettes of shapes and describing them, e.g. using a chain code, by a Fourier expansion of the contour, by curvature scale-space analysis or by sampling points along object boundaries and quantifying their relative locations;

- Algorithms for evaluating the saliency of local image regions; selecting salient points as key points (covered by group G06V 10/46);

- For the purpose of feature extraction, techniques for converting image or video data to a different parameter space, e.g. using a Hough transform for detecting linear structures in images, or performing a conversion from the spatial domain to the frequency domain or vice versa (group G06V 10/48);

- Techniques for combining individual low-level features into feature vectors by first calculating local statistics of low-level image features in a block of pixels and subsequently generating histograms or deriving other statistical measures in a local neighbourhood (group G06V 10/50);

- Multi-scale feature extraction algorithms for analysing image or video data at different resolutions; scale space analysis, e.g. wavelet decompositions (group G06V 10/52);

- Techniques for describing textures, such as convolution with Gabor wavelets, grey-level co-occurrence matrices or edge histograms (group G06V 10/54);

- Descriptors which capture colour properties of the image, such as colour histograms, possibly after conversion to a suitable colour space (group G06V 10/56);

- Descriptors which are specially designed for more than three colour channels, in particular for hyperspectral images which contain sensor readings in a multitude of different wavelengths not limited to the visual spectrum (group G06V 10/58);

- Descriptors obtained by integrating information about the imaging conditions, such as the position, the orientation and the spectral properties of light sources, diffuse or specular reflections at object surfaces, etc. (group G06V 10/60);

- Temporal descriptors derived from object movements, e.g. optical flow (group G06V 10/62).

Illustrative examples of subject matter classified in this place:

1.

2.

Quantifying local image properties, in particular the local gradient, using a local probe.

3.

Different types of features used for object recognition, e.g. contours, line segments, continuous lines.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Recognition of scene and scene-specific elements | |

Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition | |

Image or video recognition or understanding of human-related, animal-related or biometric patterns in image or video data | |

Recognition of fingerprints or palmprints | |

Recognition of vascular patterns | |

Recognition of human faces, e.g. facial parts, sketches or expressions within images or video data | |

Recognition of eye characteristics within image or video data, e.g. of the iris |

Attention is drawn to the following places, which may be of interest for search:

In this place, the following terms or expressions are used with the meaning indicated:

BoW | bag of words, a model originally developed for natural language processing; when applied to images, it represents an image by a histogram of visual words, each visual word representing a specific part of the feature space. |

edge | region in the image, at which the image exhibits a strong luminance gradient |

GLCM | grey-level co-occurrence matrix |

HOG | histogram of oriented gradients, a feature descriptor described by N. Dalal and B. Triggs |

SIFT | scale-invariant feature transform, a feature detection algorithm |

SURF | speeded up robust features, a feature descriptor |

This place covers:

Feature extraction techniques in which additional (invariant) information is calculated from certain image regions or patches or at certain points, which are visually more relevant in the process of comparison or matching.

Feature extraction techniques in which information from multiple local image patches can be combined into a joint descriptor by using an approach called "bag of features" (from its origin in text document matching), "bag of visual features" or "bag of visual words".

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

1. The image regions referred to in this place are called "salient regions", and the points are called "keypoints", "interest points" or "salient points". The information assigned to these regions or points is referred to as a local descriptor due to the inherent aspect of locality in the image analysis.

A local descriptor aims to be invariant to transformations of the depicted image object (e.g., invariant to affine transforms, object deformations or changes in image capturing conditions such as contrast or scene illumination, etc.).

A local descriptor may capture image characteristics across different scales for reliably detecting objects at different sizes, distances or resolutions. Typical descriptors of this kind include:

- Blob detectors (e.g. SIFT, SURF);

- Region detectors (e.g. MSER, SuperPixels).

At a salient point, the pixels in its immediate neighbourhood have visual characteristics, which are different from those of the vast majority of the other pixels. The visual appearance of patches around a salient point is, therefore, somewhat unique; this uniqueness increases the chance of finding a similar patch in other images showing the same object.

Generally, salient points can be expected to be located at boundaries of objects and at other image regions having a strong contrast.

2. A "bag of visual words" is a histogram, which indicates the frequencies of patches with particular visual properties; these visual properties are expressed by a codebook, which is commonly obtained by clustering a collection of typical feature descriptors (e.g. SIFT features) in the feature space; each bin of the histogram corresponds to one specific cluster in the codebook.

The process of generating a bag of features typically involves:

A training phase comprising:

- Extracting local features (e.g. SIFT) from a set of training images;

- Clustering these features into visual words (e.g. with k-means).

And an operating phase comprising:

- Extracting local features from a target image;

- Associating each feature with its closest visual word;

- Building a histogram of visual words over the whole image and match them with templates using a statistical distance (e.g. Mahalanobis distance).

Illustrative example of subject matter classified in this place:

Defining key-patches for different object classes from a training set, computing features from them and using a set of support vector machine [SVM] classifiers to detect those objects in new images.

This place does not cover:

Colour feature extraction |

Attention is drawn to the following places, which may be of interest for search:

Image preprocessing for image or video recognition or understanding involving the determination of a region or volume of interest [ROI, VOI] | |

Global feature extraction, global invariant features (e.g. GIST) | |

Local feature extraction; Extracting of specific shape primitives, e.g. corners, intersections; Computing saliency maps with interactions such as reinforcement or inhibition | |

Local feature extraction, descriptors computed by performing operations within image blocks (e.g. HOG, LBP) | |

Organisation of the matching process; Coarse-fine approaches, e.g. multi-scale approaches; using context analysis; Selection of dictionaries | |

Obtaining sets of training patterns, e.g. bagging | |

Extracting salient feature points for character recognition | |

Image retrieval systems using metadata |

The present group does not cover biologically inspired approaches of feature extraction based on modelling the receptive fields of visual neurons, such as Gabor filters, and convolutional neural networks [CNN].

The use of neural networks for image or video pattern recognition or understanding is classified in group G06V 10/82.

When a document presents details on a sampling technique and a clustering technique (bagging), then it should also be classified in group G06V 10/774.

Classical "bag of words" techniques remove most image localisation information (geometry).

When local features are matched directly from one image to another without involving a bagging technique (and thereby retaining geometric information), e.g. when triplets of features are matched using a geometric transformation with a RANSAC algorithm, then the document should also be classified in group G06V 10/75.

In this place, the following terms or expressions are used with the meaning indicated:

BOF | bag of features, see BOW |

BOVF | bag of visual features, see BOVF |

BOVW | bag of visual words, see BOW |

BOW | bag of words, a model originally developed for natural language processing; when applied to images, it represents an image by a histogram of visual words, each visual word representing a specific part of the feature space. |

MSER | maximally stable extremal regions, a technique used for blob detection |

RANSAC | random sample consensus, a popular regression algorithm |

SIFT | scale-invariant feature transform |

superpixels | sets of pixels obtained by partitioning a digital image for saliency assessment |

SURF | speeded up robust features |

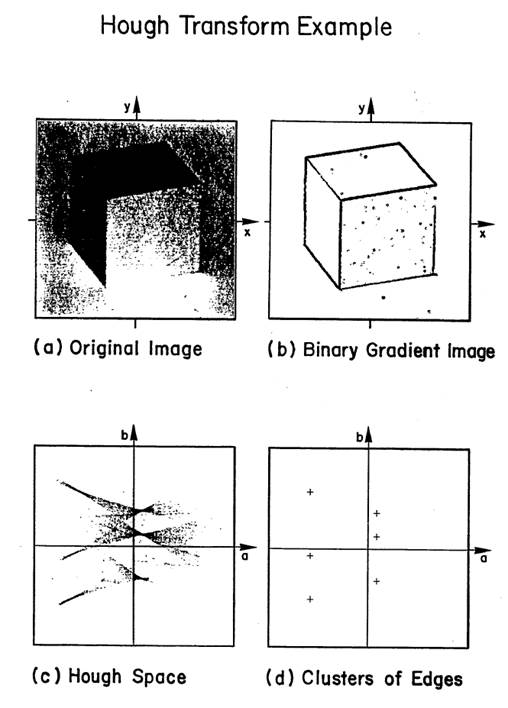

This place covers:

Techniques that map the image space into a parameter space using a transformation, such as the Hough transform.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

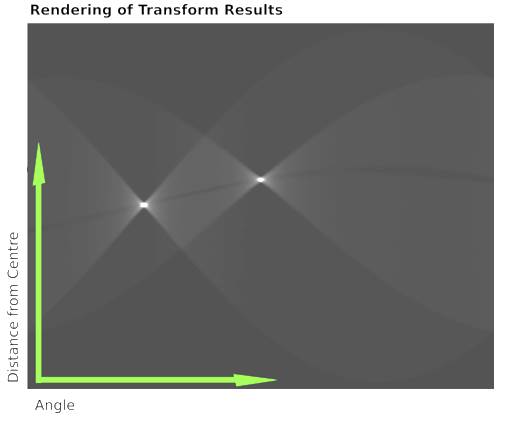

The object of the transformations classified in this place is to allow better interpretation and increase the separability between the pattern classes. Each dimension of the parameter space may be linked to a specific feature parameter of an object, e.g. its distance from the origin of the image coordinate system and its orientation. The function which performs the mapping to the parameter space may be invertible, i.e. the original representation could be recovered from the representation in the parameter space.

In case of the Hough transform, the parameter space is partitioned into individual bins, which form a so-called accumulator array (a two-dimensional histogram). A voting process maps features in images to individual bins of the accumulator array to finally determine the most probably parameter configuration by retrieving the bin, which has received the maximum bin count.

The generalised Hough transform can be applied for recognising arbitrary shapes, e.g. analytic curves such as lines and circles, or binary or grey-value pattern templates.

Other examples are the generalised Radon transform, the Trace transform, etc.

Illustrative examples of subject matter classified in this place:

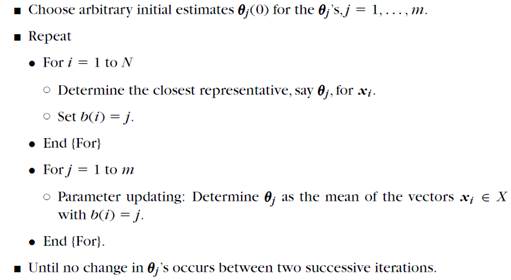

1.

A line in the plane is described by the parameters "d" and "ϕ" (distance to the origin and angle).

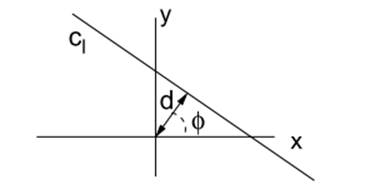

2A.

2B.

The two lines in the input image (fig. 2A) are mapped by the Hough transform in the parameter space (d,ϕ), and the representation leads to two distinct corresponding bright spots (fig. 2B).

3.

Detection of the visible edges of a cube as points in the Hough parameter space.

Attention is drawn to the following places, which may be of interest for search:

Global feature extraction by analysis of the whole pattern | |

Local feature extraction by analysis of parts of the pattern | |

Descriptors for shape, contour or point-related descriptors, e.g. SIFT | |

Image analysis in general |

Global feature extraction for image or video recognition or understanding is classified in group G06V 10/42.

Fourier-transform based representations, scale-space representations or wavelet-based representations have a different aim than improving the discriminability in the representation space. The Fourier transform is usually chosen for its geometric invariance properties in the Fourier space (e.g. translation invariance), while the scale-space and wavelet-based representations aim at capturing the variability of the pattern at multiple representation scales. For this reason, the latter two representations are classified as global feature extraction (group G06V 10/42) and, respectively, local feature extraction by scale-space analysis (group G06V 10/52).

This place covers:

Feature extraction techniques that perform operations within image blocks or by using histograms.

Summation of image intensity values and projection along an axis, e.g. by binning the values into a histogram, to arrive at a more compact feature representation.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

The processing classified in this group might involve:

Block-based arithmetic or logical operations (including non-linear operators such as "max", "min", etc.);

Histograms of various measurements computed on a block-basis, e.g. histogram of oriented gradients [HOG];

Quantification of local geometric arrangements of features by block-based analysis, e.g. local binary patterns [LBP].

The blocks need not necessarily be arranged in a form of a grid. They can overlap or can be arranged in different geometrical patterns.

Frequently used local feature descriptors which are classified in this group include:

- Histogram of oriented gradients [HOG];

- Edge oriented histogram [EOH];

- Local binary pattern [LBP] and its refinements:

- Local Gabor binary pattern [LGBP];

- Local edge pattern [LEP];

- Heat kernel local binary pattern [HKLBP];

- Oriented local binary pattern [OLBP];

- Elliptical binary patterns [EBP];

- Local ternary Patterns [LTP];

- Probabilistic LBP [PLBP];

- Elongated quinary patterns [EQP];

- Thee-patch local binary patterns [TPLBP], four-patch local binary patterns [FPLBP];

- Local line binary patterns, etc.;

- Shape context;

- Gradient location and orientation histogram [GLOH];

- Local energy-based shape histogram [LESH];

- Oriented histogram of flows [OHF];

- Binary robust independent elementary features [BRIEF];

- Spin image.

Illustrative examples of subject matter classified in this place:

1.

The local oriented histograms of the gradients or HOG descriptor.

2.

The "shape context", a representation which performs binning of the contours of the shape in a circular-like pattern.

Attention is drawn to the following places, which may be of interest for search:

Global feature extraction by analysis of the whole pattern | |

Local feature extraction by analysis of parts of the pattern | |

Descriptors for shape, contour or point-related descriptors, e.g. SIFT | |

Image analysis in general |

In this place, the following terms or expressions are used with the meaning indicated:

BRIEF | binary robust independent elementary features |

EOH | edge oriented histogram |

GLOH | gradient location and orientation histogram |

HOG | histogram of oriented gradients |

LBP | local binary pattern |

LESH | local energy-based shape histogram |

OHF | oriented histogram of flows |

OLBP | oriented local binary pattern |

This place covers:

Scale-space representations which allow analysis of the image or video at multiple scales.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

One primary goal of scale space methods is to achieve scale-invariance, i.e. being able to detect and recognise objects regardless of their size in the image. The scale is usually selected by convolving the image with a parametric "size function", also called a kernel. After the convolution, which typically blurs the fine-scale structures to a certain degree and which is often followed by a suitable sub-sampling of the blurred image, the actual feature extraction can take place at the selected scale.

A very common example of a kernel is the Gaussian kernel:

![]()

Given the input image f, the scale-space representation is obtained by convolving it with the Gaussian kernel: ![]() where t is the scale of analysis.

where t is the scale of analysis.

Scale space approaches can also use Gaussian derivatives, Laplacians of Gaussians, difference of Gaussians (DoG's), Gabor functions, wavelets (in continuous or discrete form, e.g. Haar, Daubechies).

Other alternatives to constructing a scale space which do not use a kernel exist, for instance, by applying to the image a diffusion equation ![]() starting with the initial condition

starting with the initial condition ![]() . In more general terms, these techniques analyse the differential intrinsic structure of the image in order to construct scale-space representations.

. In more general terms, these techniques analyse the differential intrinsic structure of the image in order to construct scale-space representations.

Techniques based on morphological scale-space construct representations at different scales using mathematical morphology methods, e.g. erosion, dilation, opening, closing.

Some other techniques construct multi-scale temporal representations based on the analysis of optical flow for feature extraction in video.

Multi-resolution methods implicitly provide representations at multiple scales; such methods are also classified in the present group in as far as they concern image or video feature extraction.

Illustrative examples of subject matter classified in this place:

1.

2.

Wavelets applied at different scales for the extraction of facial features.

This place does not cover:

Multi-scale boundary representations |

Attention is drawn to the following places, which may be of interest for search:

Descriptors for shape, contour or point-related descriptors, e.g. SIFT | |

Image analysis in general |

In this place, the following terms or expressions are used with the meaning indicated:

CWT | continuous wavelet transform |

DoG | difference of Gaussians |

DWT | discrete wavelet transform |

LoG | Laplacian of Gaussian |

Haar wavelets | family of wavelets constructed from rescaled square-shaped functions |

steerable filter | class of orientation-selective convolution kernels used for feature extraction that can be expressed via a linear combination of a small set of rotated versions of themselves. As an example, the oriented first derivative of a 2D Gaussian is a steerable filter |

This place covers:

Texture feature extraction for image or video recognition or understanding, either by identifying the boundaries of texture regions, or by analysing the content of the regions themselves.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

Examples of algorithms used for feature extraction include:

- Statistical approaches which characterise the texture by local statistical measures such as "edgeness" (local variation of the image gradient), co-occurrence matrices and Haralick features, Laws texture energy, local histogram-based measures, autocorrelation, power spectrum, etc.;

- Structural approaches based on primitives, morphological operations or representations derived from them, or graph-based methods in which image quantities (e.g. pixels or local patches) are represented as graph nodes and are clustered together using graph-based clustering algorithms (e.g. graph-cuts) to identify texture regions;

- Model-based approaches such as auto-regressive models, fractal models, random fields, texton model;

- Transform methods such as Fourier (spectral) analysis, Gabor filters, wavelets, curvelet transform.

Illustrative example of subject matter classified in this place:

Texture feature extraction allows identification of an animal (zebra) in natural images.

Attention is drawn to the following places, which may be of interest for search:

Global feature extraction by analysis of the whole pattern | |

Local feature extraction by analysis of the parts of the pattern, e.g. by detecting edges, contours, loops, corners, intersections; Connectivity analysis, e.g. connected component analysis | |

Descriptors for shape, contour or point-related descriptors, e.g. SIFT | |

Colour feature extraction | |

Feature extraction related to illumination properties | |

Pattern recognition or image understanding, using clustering | |

Analysis of texture in general |

In this place, the following terms or expressions are used with the meaning indicated:

GLCH | grey-level co-occurrence histogram (synonym of GLCM) |

GLCM | grey-level co-occurrence matrix (Haralick invariant texture features) |

Texton | basic component of an image that may be recognised visually before the entire image is recognised, and that repeats itself to generate a texture region |

This place covers:

Colour feature extraction for image or video recognition or understanding.

Colour feature extraction based on colour invariance.

Colour feature extraction based on colour descriptors.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place:

1. Colour invariance or, conversely, compensation of colour variations, is important for increasing the robustness in image matching or object recognition. Colour variations are often caused by changing lighting conditions (e.g. the colour of an object typically looks different under ambient light or when the object is being illuminated by an incandescent light bulb). They can also be caused by other factors (e.g. sun-tanned skin has a different colour than pale skin).

2. Colour descriptors associate colour information with various image structures such as points, contours or blobs/regions. Colour descriptors (e.g. colour histograms, average colour values etc.) are frequently used in image recognition or image understanding. Typical applications include feature detection based on a model of skin colour, traffic sign detection based on colour information, colour image object detection or video analysis for finding objects with a special colour (e.g. nudity detection).

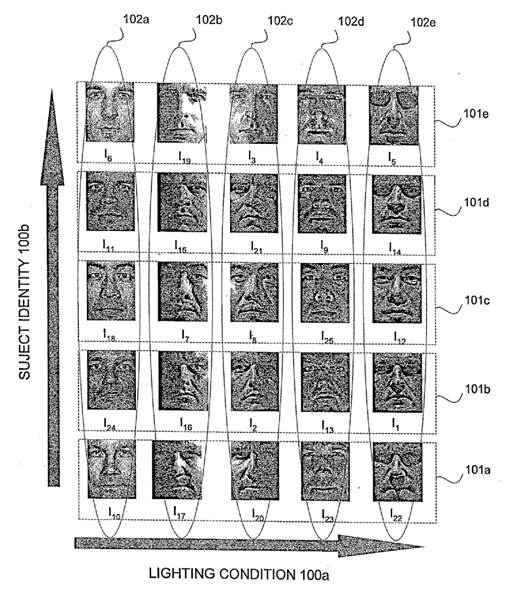

Illustrative examples of subject matter classified in this place:

1.

Colour histograms used to detect vegetation in natural scenes.

2.

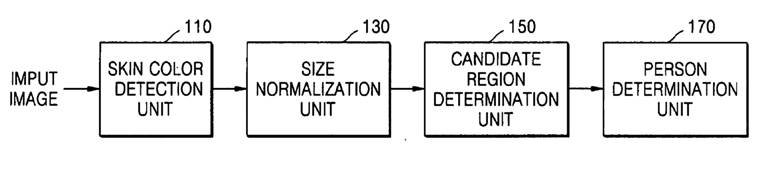

Detection of a person based on his/her skin colour.

3.

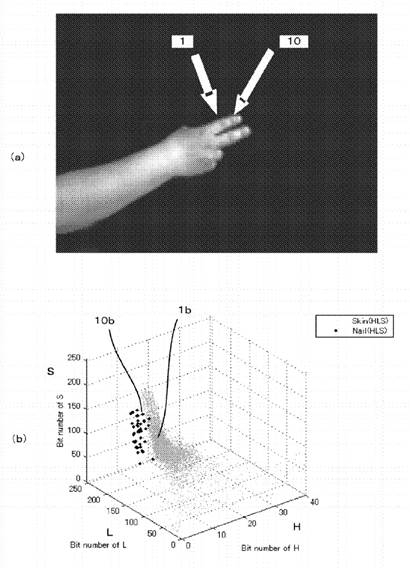

Discrimination between skin image regions and nail image regions by clustering in a three-dimensional colour space.

Attention is drawn to the following places, which may be of interest for search:

Global feature extraction by analysis of the whole pattern | |

Descriptors for shape, contour or point-related descriptors, e.g. SIFT | |

Local feature extraction by performing operations within image blocks or by using histograms | |

Image analysis for determination of colour characteristics | |

Colour picture communication systems |

In this place, the following terms or expressions are used with the meaning indicated:

CIELAB, L*a*b* | colour space representation using a lightness value L*, a value a* on a red-green axis and a value b* on a blue yellow axis; these axes reflect human perception |

CMYK | colour space representation using cyan, magenta, yellow and black |

HSB | colour space representation using separate channels for hue, saturation and brightness (also called HSV) |

HSL | colour space representation using separate channels for hue, saturation and lightness |

HSV | colour space representation using separate channels for hue, saturation and value (also called HSB) |

RGB | colour space representation using red, green and blue colour channels |

YCbCr | colour space representation using separate channels for a luminance component Y, a blue-difference component Cb, and a red-difference component Cr, respectively |

YUV | colour space representation using separate channels for a luminance component Y and two chrominance components U and V |

This place covers:

Techniques for feature extraction in hyperspectral image data.

Notes – technical background

These notes provide more information about the technical subject matter that is classified in this place: