CPC Definition - Subclass H04N

This place covers:

Television systems

- Television systems, whether general or specially adapted for colour television

- Details of television systems of general applicability, or specific to colour television, and also including scanning details of television systems

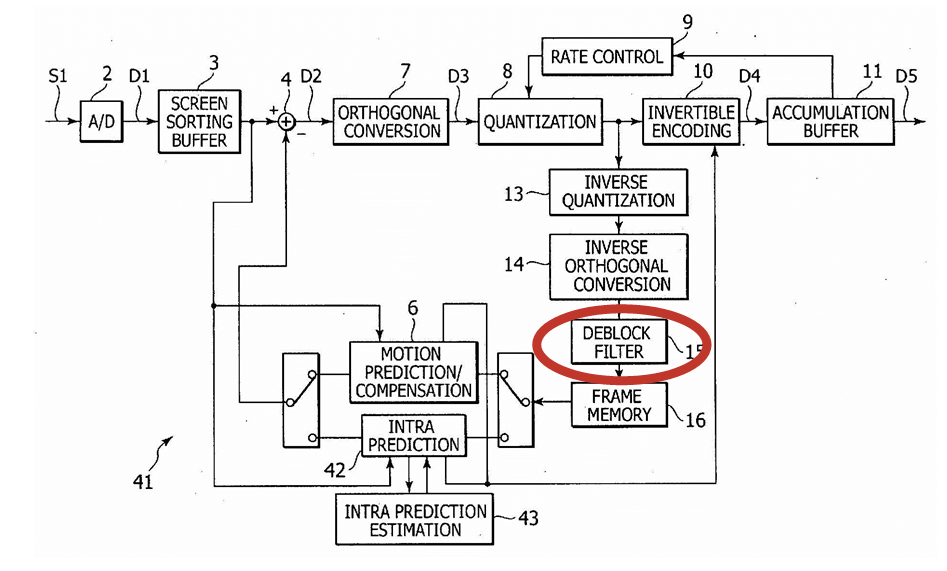

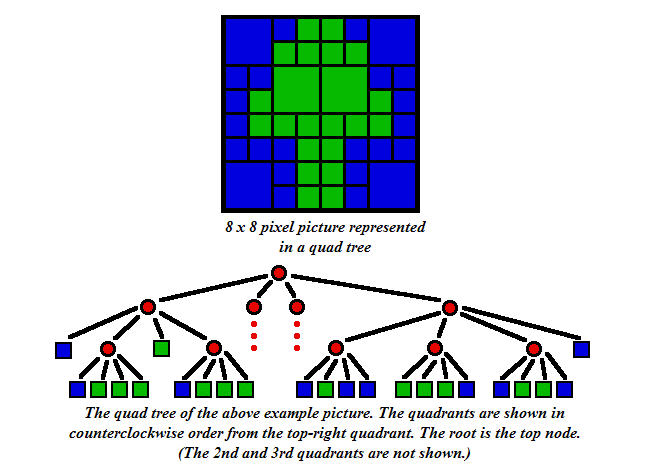

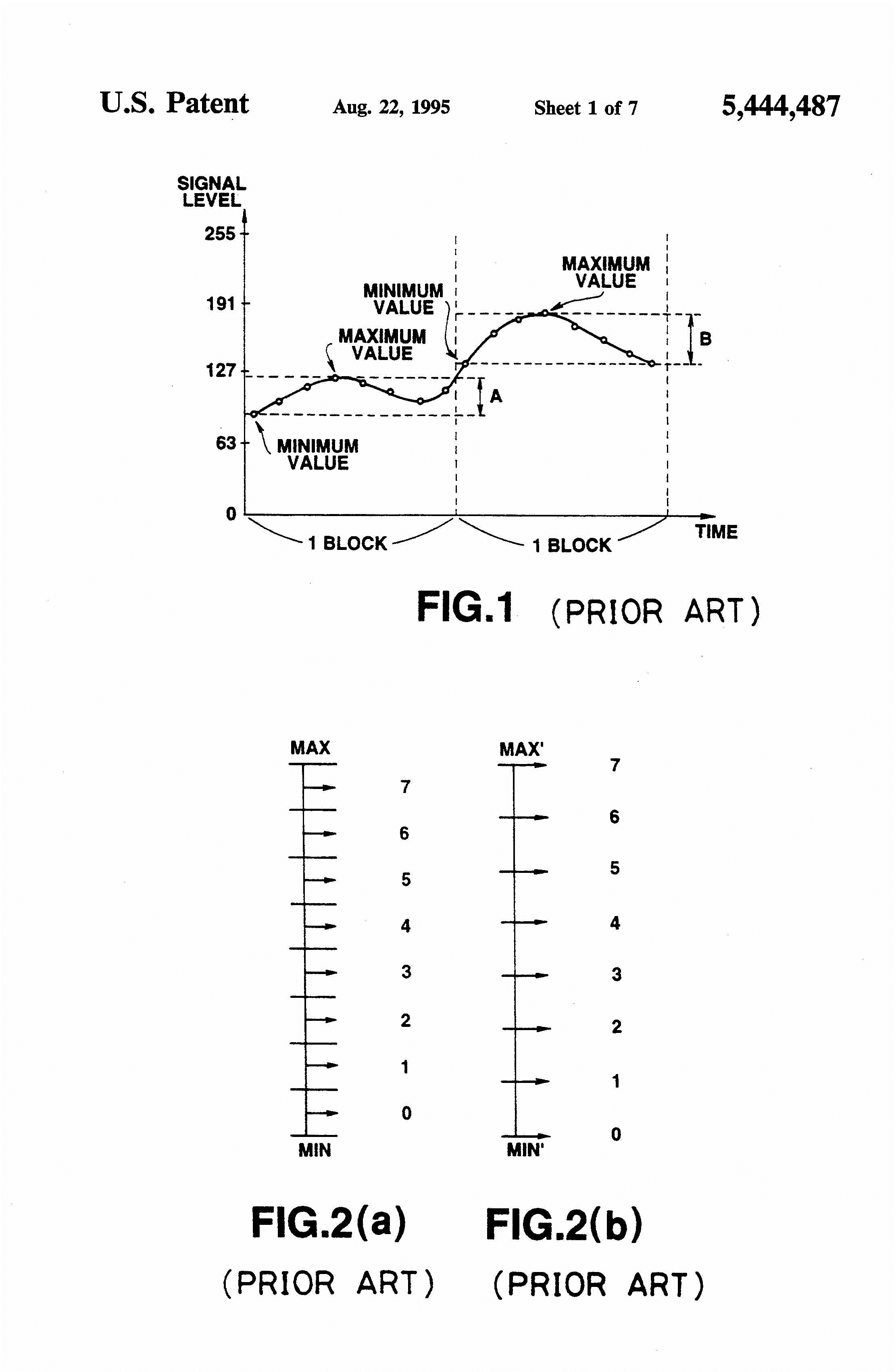

- Coding, decoding, compressing or decompressing of digital video signals

- Stereoscopic television systems, whether general or specially adapted for colour television, or details therefor

- Selective distribution of pictorial content, in particular interactive television or video on demand [VOD]

- Diagnosis, testing or measuring for television systems or details therefor

- Transmission of pictures or their transient or permanent reproduction either locally or remotely, by methods involving both the following steps:

- Step (a): the scanning of a picture, i.e. resolving the whole picture-containing area into individual picture-elements and the derivation of picture-representative electric signals related thereto, simultaneously or in sequence;

- Step (b): the reproduction of the whole picture-containing area by the reproduction of individual picture-elements into which the picture is resolved by means of picture-representative electric signals derived therefrom, simultaneously or in sequence;

- (In group H04N 1/00) Systems for the transmission or the reproduction of arbitrarily composed pictures or patterns in which the local light variations composing a picture are not subject to variation with time, e.g. documents (both written and printed), maps, charts, photographs (other than cinematograph films);

- Circuits specially designed for dealing with pictorial communication signals, e.g. television signals, as distinct from merely signals of a particular frequency range.

Other pictorial communication

- Scanning, transmission or reproduction of documents or the like, in particular facsimile transmission

- Details pertaining to scanning, transmission or reproduction of documents or the like, in particular details of facsimile transmission

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Instruments for performing medical examinations of the interior of cavities or tubes of the body combined with television appliances | |

Arrangements of television sets in vehicles; Arrangement of controls thereof | |

Mounting of cameras operative during drive of a vehicle; | |

Arrangements of cameras in aircraft | |

Controlling or regulating single-crystal growth by pulling from a melt, using television detectors | |

Inspecting textile materials by television means | |

Scanning a visible indication of a measured value and reproducing this indication at a remote place, e.g. on the screen of a cathode-ray tube | |

Burglar, theft, or intruder alarms using television cameras | |

Structural combination of reactor core or moderator structure with television camera |

Attention is drawn to the following places, which may be of interest for search:

Video games, i.e. games using an electronically generated display having two or more dimensions | |

Systems for the reproduction according to the above-mentioned step (b) of pictures comprising alphanumeric or like character forms and involving the generation according to the above-mentioned step (a) of picture-representative electric signals from a pre-arranged assembly of such characters, or records thereof, forming an integral part of the systems | |

Printing, duplication or marking processes, or materials therefor | |

Systems for the reproduction according to step (b) of Note (1) of pictures comprising alphanumeric or like character forms but involving the production of the EQUIVALENT of a signal which would be derived according to the above-mentioned step (a), e.g. by cams, punched card or tape, coded control signal, or other means | |

Systems for the direct photographic copying of an original picture in which an electric signal representative of the picture is derived according to the said step (a) and employed to modify the operation of the system, e.g. to control exposure, | |

Systems in which legible alphanumeric or like character forms are analysed according to step (a) of Note (1) to derive an electric signal from which the character is recognised by comparison with stored information | |

Image data processing or generation, in general | |

Circuits or other parts of systems which form the subject of other subclasses | |

Broadcasting |

In this place, the following terms or expressions are used with the meaning indicated:

television systems | Systems for the transmission and reproduction of arbitrarily composed pictures in which the local light variations composing a picture MAY change with time, e.g. natural "live" scenes, recordings of such scenes such as cinematograph films |

CCD | Charge-coupled device, that is, a device made up of semiconductors arranged in such a way that the electric charge output of one semiconductor charges an adjacent one |

MPEG | Motion Picture Experts Group; a family of standards used for coding audio-visual information in a digital compressed format |

NTSC | National Television System Committee |

PAL | Phase alternating line |

Picture signal generator | Circuits or arrangements receiving as input an image of a scene and delivering as output an electric signal that contains all the information required to reproduce the image of the scene |

Picture reproducer | Circuits or arrangements receiving as input an electric signal characteristic of an image of a scene and producing as output a visual display of that image |

SECAM | Séquentiel couleur à mémoire (Sequential Colour with Memory) |

This place covers:

- systems for the transmission or the reproduction of arbitrarily composed pictures or patterns in which the local light variations composing a picture are not subject to variation with time, e.g. documents (both written and printed), maps, charts, photographs (other than cinematograph films);

- transmission of time-invariant pictures, e.g. documents (both written and printed), maps, charts, photographs (other than cinematograph films), or their transient or permanent storage or reproduction either locally or remotely by methods involving both scanning and reproduction;

- systems involving the generation, transmission, storage or reproduction of time-invariant pictures; image manipulation for such reproduction on particular output devices;

- devices applied to the transmission, storage or reproduction of time-invariant pictures, e.g. facsimile apparatus, digital copiers, (digital) scanners, multifunctional peripheral devices;

- circuits specially designed for dealing with pictorial communication signals, e.g. facsimile signals or colour image signals, as distinct from merely signals of a particular frequency range;

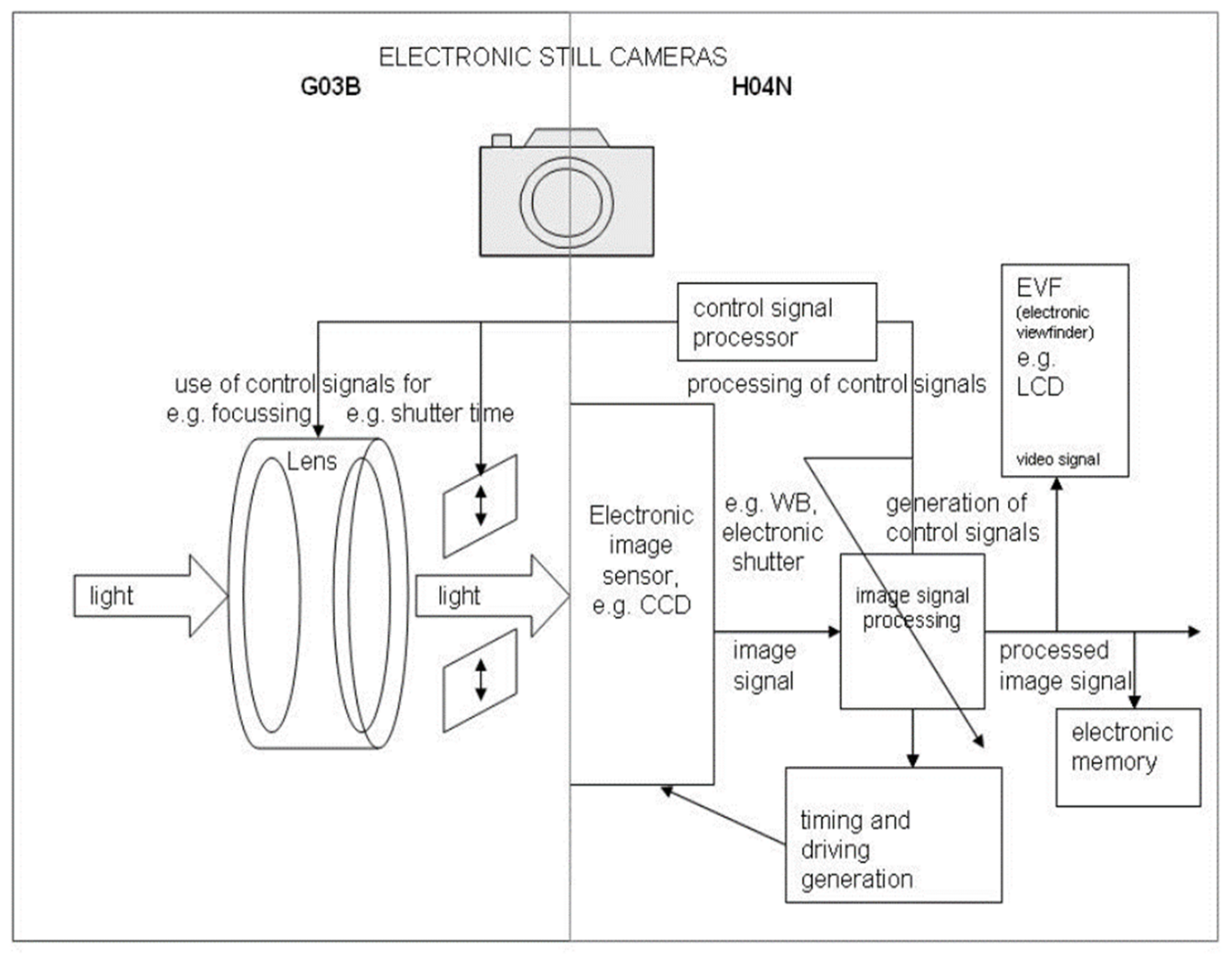

- storage or transmission aspects of still video cameras.

- H04N 1/00 is an application place for a large number of IT technologies, which are covered per se by the corresponding functional places

- Image servers, hosts and clients use internally specific computing techniques. Corresponding techniques used in general computing are found in G06F or G06Q. This concerns data storage, software architectures, error detection or correction in general computing, monitoring, image retrieval, browsing, Internet browsing, computer security, billing or advertising

- Image servers, hosts and clients use specific telecommunication techniques for the image transmission process. Corresponding techniques used in generic telecommunication networks are found in subclasses H04B, H04H, H04L, H04M, H04W. This concerns monitoring or testing of transmitters/receivers, broadcast or multicast, maintenance, administration, testing, data processing in data switching networks, home networks, real-time data network services, data network security, applications for data network, wireless networks per se

- Image scanners use specific scanning techniques. Corresponding techniques are found in G02B. This concerns optical scanning systems

- General image processing techniques are found in G06T

Attention is drawn to the following places, which may be of interest for search:

Scanning details of electrically scanned solid-state devices | |

Scanning of motion picture films | |

Television signal recording | |

Circuits for processing colour television signals | |

Capture aspects of still video cameras | |

Printing mechanisms | |

Supporting or handling copy material in printers | |

Handling thin or filamentary material | |

Colorimetry | |

Electrography; Magnetography | |

Handling of copy material in photocopiers | |

Constructional details of equipement or arrangements specially adapted for portable computer application | |

Power management in computer systems | |

Input and output arrangements for computers | |

Interaction techniques for graphical user interfaces | |

Storage management | |

Digital output to printers | |

Adressing or allocating within memory systems or architectures | |

Image retrieval | |

Retrieval from Web | |

Computer security | |

Sensing record carriers | |

Producing a permanent visual presentation of output data | |

Payment schemes, Commerce | |

General-purpose image data processing | |

Image watermarking | |

Geometric image transformation in the plane of the image | |

Image enhancement or restoration | |

Image analysis | |

Image coding | |

Editing figures and text; Combining figures or text | |

Methods or arrangements for recognising scenes | |

Character recognition, recognising digital ink or document-oriented image-based pattern recognition | |

Methods or arrangements for recognising human body or animal bodies or body parts | |

Methods or arrangements for acquiring or recognising human faces, facial parts, facial sketches, facial expressions | |

Access-control involving the use of a pass | |

Access-control by means of a password | |

Coding, decoding or code conversion, for error detection or error correction | |

Monitoring or testing of transmitters/receivers | |

Broadcast communication | |

Secret communication; Jamming of communication | |

Arrangements for detecting or preventing errors in the information received | |

Arrangements for secret or secure communication; Encryption | |

Charging arrangements in data networks | |

Data processing in data switching networks | |

Data network security | |

Real-time data network services | |

Applications for data network services | |

Simultaneous speech and telegraphic or other data transmission over the same conductors | |

Telephonic metering arrangements | |

Connection management in wireless communications networks |

In this main group Indexing Codes are used:

The numbering of the codes is based on the numbering of the subgroups;

- codes, e.g. H04N 1/0455 , which have a numbering the first part of which corresponds to a subgroup which is at the tip end of a subgroup branch, e.g. H04N 1/0402, are used to classify detailed information and may be applied to that subgroup, e.g. H04N 1/0455 may be used in combination with H04N 1/0402;

- codes, e.g. H04N 2201/0402, which have a numbering the first part of which corresponds to a subgroup which is at the head or node end of a subgroup branch, e.g. H04N 1/04, are used to classify orthogonal information and may be applied to any subgroups in the corresponding subgroup branch, e.g. H04N 2201/0434 may be used in combination with H04N 1/0402 and/or H04N 1/1013.

In this place, the following terms or expressions are used with the meaning indicated:

Additional information | any information other than the still picture itself, but nevertheless associated with the still picture |

Documents or the like | documents (both written and printed), maps, charts, photographs (other than cinematograph films) |

Main-scan | the first completed scan |

Mode | way or manner of operating |

Scanning | the displacement of active reading or reproducing elements relative to the original or reproducing medium, or vice versa |

Still picture apparatus | any apparatus generating, storing, transmitting or reproducing non-transient images |

Single-mode communication | a communication in which the mode is not changed |

In patent documents, the following abbreviations are often used:

IP | Internet Protocol |

OS | Operating System |

PC | Personal Computer |

GPS | Global Positioning System |

MFP | Multifunctional peripheral |

MFD | Multifunctional device |

RFID | Radio-frequency identification |

In patent documents, the following words/expressions are often used as synonyms:

- Complex device and Multifunctional peripheral

- Complex machine and Multifunctional peripheral

- Hybrid device and Multifunctional peripheral

- Hybrid machine and Multifunctional peripheral

- Digital camera and Still video camera

- Metadata and Additional information

- Fast scan and Main scan

- Slow scan, Subscan and Sub scan

This place does not cover:

Determining the necessity for preventing unauthorised reproduction | |

Detecting scanning velocity or position | |

Fault detection in circuits or arrangements for control supervision between transmitter and receiver or between image input and image output device | |

Discrimination between different image types | |

Discrimination between the two tones in the picture signal of a two-tone original | |

Control or modification of tonal gradation or extreme levels, e.g. dependent on the contents of the original or references outside the picture, |

This place does not cover:

Transmitting or receiving computer data via an image communication device | |

Transmitting or receiving image data via a computer or computer network | |

Circuits or arrangements for control or supervision between transmitter and receiver |

Attention is drawn to the following places, which may be of interest for search:

Data processing systems for commerce |

Attention is drawn to the following places, which may be of interest for search:

Message switching systems, e.g. e-mail systems |

This place does not cover:

Push-based network services |

Attention is drawn to the following places, which may be of interest for search:

Portable computers comprising integrated printing or scanning devices |

Typically with apparatus of the kind classified in G03G.

Typically with apparatus of the kind classified in B41J or G06K 15/00.

Typically with apparatus of the kind classified in other H04N main groups.

This place does not cover:

Television studio circuitry, devices or equipment per se |

Typically with apparatus of the kind classified in H04N 5/222 and subgroups.

Attention is drawn to the following places, which may be of interest for search:

Supporting or handling copy material in printers | |

Handling thin or filamentary material | |

Handling of copy material in photocopiers |

This place does not cover:

Marking an unauthorised reproduction with identification | |

Restricting access |

Attention is drawn to the following places, which may be of interest for search:

Preventing copies being made in photocopiers |

Attention is drawn to the following places, which may be of interest for search:

Details of scanning arrangements |

Attention is drawn to the following places, which may be of interest for search:

Television cameras |

Attention is drawn to the following places, which may be of interest for search:

Light-guides per se |

This place does not cover:

Means for collecting light from a line or an area of the original and for guiding it to only one or a relatively low number of picture element detectors |

This place does not cover:

Composing, repositioning or otherwise modifying originals |

Attention is drawn to the following places, which may be of interest for search:

Details of scanning heads | |

Optical scanning systems | |

Projection optics in photocopiers | |

Character printers involving the fast moving of a light beam in two directions |

Where possible both the main and sub scanning arrangements should be classified, using a class for the invention and an Indexing Code for subsidiary information. Manual scanning and scanning using two-dimensional arrays are exceptions to this rule.

In this place, the following terms or expressions are used with the meaning indicated:

Main scan direction | The direction of the first completed scan line |

This place does not cover:

Scanning different formats; Scanning with different densities of dots per unit length, e.g. different numbers of dots per inch (dpi); Conversion of scanning standards | |

The scanning speed being dependent on content of picture |

Attention is drawn to the following places, which may be of interest for search:

Detection, control or error compensation of scanning velocity or opposition in photographic character printers involving the fast moving of an optical beam in the main scan direction |

Where possible, when classifying in this subgroup, details of the main and subscan should also be classified using other subgroups of H04N 1/04.

Where possible, when classifying in this subgroup, details of the main scan should also be classified using other subgroups of H04N 1/04.

Where possible, when classifying in this subgroup, details of the subscan should also be classified using other subgroups of H04N 1/04.

Attention is drawn to the following places, which may be of interest for search:

Feeding a sheet in the subscanning direction by rotation about its axis only |

Attention is drawn to the following places, which may be of interest for search:

Arrangements for the main-scanning |

This place does not cover:

Optical details of the scanning system |

Attention is drawn to the following places, which may be of interest for search:

Character printers involving the fast moving of a light beam in two directions |

This place does not cover:

Optical details of the scanning system |

Attention is drawn to the following places, which may be of interest for search:

Optical printers using dot sequential main scanning by means of a light deflector | |

Character printers involving the fast moving of an optical beam in the main scan direction |

This place does not cover:

Scanning arrangements using multi-element arrays |

Attention is drawn to the following places, which may be of interest for search:

Character printers involving the fast moving of an optical beam in the main scan direction |

Attention is drawn to the following places, which may be of interest for search:

Optical printers using arrays of radiation sources | |

Photographic character printers simultaneously exposing more than one point |

Attention is drawn to the following places, which may be of interest for search:

Photographic character printers simultaneously exposing more than one point on more than one main scanning line |

Attention is drawn to the following places, which may be of interest for search:

Details of the sub-scanning | |

Photographic character printers simultaneously exposing more than one point on one main scanning line |

This place covers:

Where the storage results in a record that is not merely transient.

This place does not cover:

Storage resulting in a transient record, for control or supervision between image input and image output device | |

Composing, repositioning or otherwise modifying originals | |

Bandwidth or redundancy reduction |

In this place, the following terms or expressions are used with the meaning indicated:

Intermediate | having no limiting meaning |

Attention is drawn to the following places, which may be of interest for search:

Image capture in digital cameras | |

Still video cameras |

This place covers:

Arrangements providing the output copy of a document in a system performing the scanning, transmission and reproduction of documents or the like, e.g. printing arrangements integrated within a facsimile device

Attention is drawn to the following places, which may be of interest for search:

Details of scanning heads | |

Scanning arrangements | |

Perforating or marking objects by electrical discharge | |

Selective printing mechanisms per se |

Attention is drawn to the following places, which may be of interest for search:

Magnetography |

This place covers:

Where the control or supervision is between two devices that can be embedded within the same apparatus or be embedded in multiple apparatuses.

Where the front-end device has images that are intended to be sent to the back-end device and the control can be from either the front-end device, the back-end device or both.

For example:

- a still-image camera or scanner and an another separate device (i.e. printer, display, server)

- a still-image camera or multi-function peripheral [MFP] and its internal memory

This place does not cover:

Circuits or arrangements for blanking or otherwise eliminating unwanted parts of pictures | |

Composing, repositioning or otherwise modifying originals |

Attention is drawn to the following places, which may be of interest for search:

Devices for controlling television cameras or cameras comprising electronic image sensors | |

Circuits or arrangements for control or supervision between transmitter and receiver or between image input and image output device | |

Digital output from electrical digital data processing unit to print unit | |

Real-time session management in data packet switching networks | |

Session management in data packet switching networks |

Attention is drawn to the following places, which may be of interest for search:

Automatic arrangements for answering calls in telephonic equipment |

Attention is drawn to the following places, which may be of interest for search:

Telephonic equipment for signalling identity of wanted subscriber |

Attention is drawn to the following places, which may be of interest for search:

Television systems for the transmission of television signals using pulse code modulation, using bandwidth reduction involving the insertion of extra data | |

Television bitstream transport arrangements involving transporting of additional information | |

Broadcast communication systems specially adapted for using meta-information |

In patent documents, the following words/expressions are often used as synonyms:

- "metadata" for "additional information"

Attention is drawn to the following places, which may be of interest for search:

Image watermarking | |

Audio watermarking |

This place does not cover:

In colour image data |

Attention is drawn to the following places, which may be of interest for search:

Transmission of digital television signals using bandwidth reduction and involving the insertion of extra data |

This place covers:

Storage results in a transient record, e.g. buffering

Attention is drawn to the following places, which may be of interest for search:

Stored and forward switching systems in transmission of digital information |

Attention is drawn to the following places, which may be of interest for search:

Coding, decoding or code conversion, for error detection or error correction | |

Arrangements for detecting or preventing errors in received digital information |

Attention is drawn to the following places, which may be of interest for search:

Simultaneous speech and other data transmission over the same conductors in telephonic communication systems |

Attention is drawn to the following places, which may be of interest for search:

Negotiation of communication capabilities for communication control in transmission of digital information |

Attention is drawn to the following places, which may be of interest for search:

Systems modifying digital information transmission characteristics according to link quality |

Attention is drawn to the following places, which may be of interest for search:

Coin-freed or like apparatus per se | |

Telephonic metering |

This place covers:

Obsolete subject matter, analog facsimile communication.

Attention is drawn to the following places, which may be of interest for search:

Synchronisation of pulses |

This place covers:

Removing parts of the image e.g. smudges, extracting part of an image, screen out unwanted image regions, removing finger shadow, removing perforated holes when copying a perforated paper.

This place does not cover:

Composing, repositioning or otherwise modifying originals |

Drop out for parts of the image while changing color is in H04N 1/62, form drop out data in H04N 1/4177.

This place covers:

Composing e.g. combining 2 images. Reading of books and correction for geometric distortions due to curved (book page) original. Geometric modifications caused through warping of image.

Attention is drawn to the following places, which may be of interest for search:

Photoelectric composing of characters | |

Editing, producing a composite image by copying with focus on copy machine | |

Text processing | |

Pagination | |

Image data processing or generation, in general | |

Geometric modification and warping in general | |

Teaching/communicating with deaf, blind, mute people |

This place covers:

Eg. combining chart, text, logo (low resolution/bit depth) and photo (high resolution/bit depth) or foreground and background, with focus on image processing. Also high dynamic range (HDR) imagery when combined with H04N 1/407.

Attention is drawn to the following places, which may be of interest for search:

Inserting foreground into background with focus on camera | |

Combining objects while rendering PDL |

This place covers:

Image cropping, cutting out, masking with arbitrary shape.

Attention is drawn to the following places, which may be of interest for search:

Selection / ordering of images (from movies) |

This place covers:

User defines the corner coordinates to extract image for repositioning. Cutting out, cropping, number of points is important. Low resolution pre-scan and high-resolution main scan of part of platen.

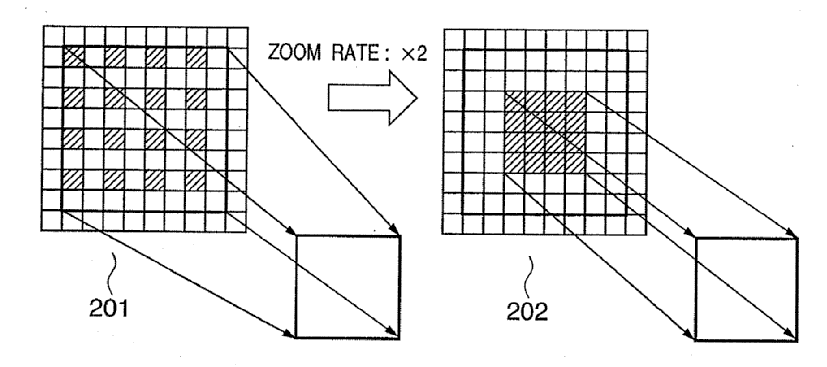

This place covers:

Part of the image is enlarged/reduced to fit new position. Reducing for medium, zoom, belief map.

This place does not cover:

Corrections or small zoom factors | |

Enlarging or reducing |

This place covers:

Combining two images which have been scanned by a scanner which does not cover the entire image. Panoramic image creation, combination, stitching. Process is done digitally and not mechanically.

Attention is drawn to the following places, which may be of interest for search:

Mechanical corrections | |

Mosaic images or mosaicing. | |

Determination of transform parameters for the alignment of images, i.e. image registration |

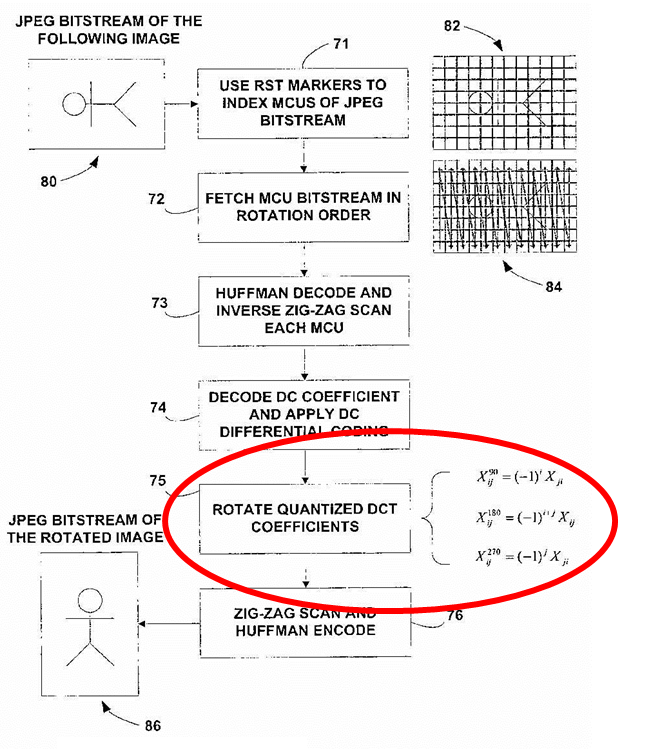

This place covers:

Rotating the image by any amount, e.g. 90degree. Also when printing double sided or 4 images on 1 page.

Attention is drawn to the following places, which may be of interest for search:

When focus is on image processing. |

This place covers:

Limited to detecting and correcting skew, i.e. errors during scanning: normally less than 45degree.

See also in G06V 10/243.

Attention is drawn to the following places, which may be of interest for search:

Mechanical skew detection |

This place covers:

Mainly the mechanical enlargement process, whole image, DIN A4 to DIN A3 (larger than DIN A4).

This subgroup takes precedence over H04N 1/04.

This place covers:

Digitally enlarging or reducing images with a change of resolution including e.g. interpolation (digital).

Beware of H04N 1/40068 which has resolution conversion where physical size is irrelevant.

Attention is drawn to the following places, which may be of interest for search:

Interpolation in general |

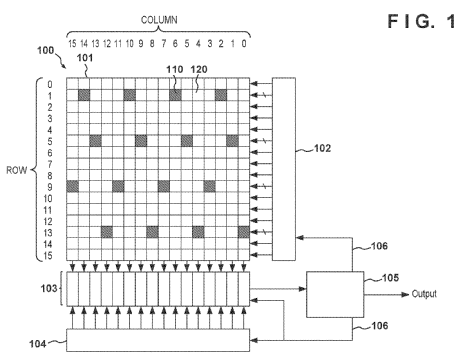

This place covers:

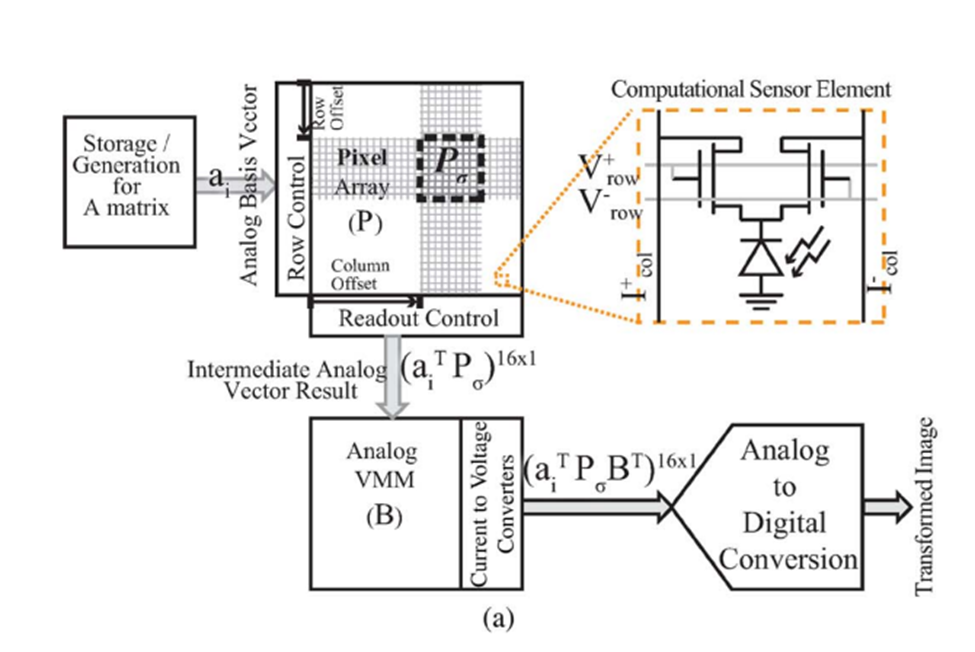

General documents regarding quality aspects, quantization (errors), scanning either B/W or color, video printer, frame grabber, memory arrangement or management, smear reduction for CCD.

This place does not cover:

Composing, repositioning or otherwise modifying originals |

Attention is drawn to the following places, which may be of interest for search:

Moving images, e.g. television |

This place covers:

Converting coloured documents into B&W so they can be printed on monotone printers, e.g. changing green into stripes, red into dots.... Converting from RGB via thresholding to grayscale.

This place covers:

Writing: control of print heads, stilus heads, electrostatic heads. Continuous driving signals.

Overlap with G06K 15/12.

This place does not cover:

Compensating positionally unequal response of the pick-up or reproducing head | |

Control or modification of tonal gradation or of extreme levels |

Attention is drawn to the following places, which may be of interest for search:

Multipass inkjet |

This place covers:

Writing: multiple print elements, essentially LED or thermal printheads, but also using several lasers in parallel.

This place covers:

Mainly continuous tone laser printers.

This place covers:

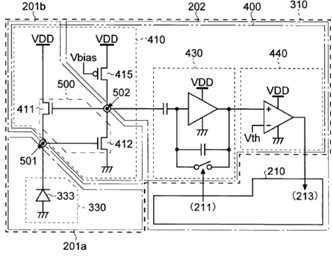

Control of light during reading of a document; circuits for driving diodes, analogue switches for light control. Also exposure time of sensor etc.

This place does not cover:

Compensating positionally unequal response of the pick-up or reproducing head | |

Control or modification of tonal gradation or of extreme levels |

Attention is drawn to the following places, which may be of interest for search:

Mechanical details | |

Lamps per se |

This place covers:

Image segmentation, finds regions in bitmap image e.g. text, table, photo, line image; also "mixed raster content" or "MRC".

Attention is drawn to the following places, which may be of interest for search:

Segmentation; Edge detection in general | |

Character recognition, OCR |

This place covers:

Change resolution while physical size is irrelevant, e.g. original image is 600dpi and printer is only capable of printing 300dpi, so conversion is necessary.

This place does not cover:

With modification of image resolution, i.e. determining the values of picture elements at new relative positions |

Attention is drawn to the following places, which may be of interest for search:

Increasing or decreasing spatial resolution |

This place covers:

Descreening and/or rescreening, self-explanatory.

Attention is drawn to the following places, which may be of interest for search:

Resolution enhancement by intelligently pacing sub-pixels when focus is on write head control. | |

General edge enhancement |

This place covers:

Provides more than just level 0 and level 255 for image, e.g. has levels 0, 127 and 255, i.e. multi-level halftoning. Typical documents: EP817464 (Seiko) shows two types of ink C1 and C2 (color multi-toning in H04N 1/52), EP889639 (Xerox) shows levels of white, light gray, dark gray and black.

Attention is drawn to the following places, which may be of interest for search:

Variation of dot size | |

General bit depth reduction |

This place covers:

Very few applications. Local modifications, e.g. making lighter and posterization of natural images.

This place covers:

Limited to image readers, mostly line sensors. Shading correction, illumination profile, head calibration, positionally varying noise etc. Also defects in the image sensors. Compensation of ambient illumination.

This place does not cover:

Discrimination between the two tones in the picture signal of a two-tone original |

Attention is drawn to the following places, which may be of interest for search:

Ambient illumination also in | |

Control of light source | |

Correction of isolated defects in image | |

Defect maps for area sensors |

This place covers:

Printers, corrects misaligned or defective heads; head calibration.

Attention is drawn to the following places, which may be of interest for search:

Malfunctioning inkjet nozzles |

Attention is drawn to the following places, which may be of interest for search:

Shaping pulses by limiting or thresholding, in general |

This place covers:

Halftoning in general, either B&W only or each color layer separately. Examples are EP126782, EP673150.

This place covers:

Dispersed dots, i.e. dots that are not concentrated in clusters which spread out from a central point. Examples are US5317418 - e.g. Gaussian filter, blue noise; US5426122 - FM rasters.

This place covers:

Error diffusion for halftoning, note that error diffusion is also used for other purposes in other parts of H04N 1/00. Examples are EP507354, EP808055.

This place covers:

Illustrative examples of subject matter classified in this group are WO8906080, EP715451.

This place covers:

Halftone dots grow from a central point and spread in all directions. Also dispersed clusters. Illustrative examples are US3688033, EP651560.

This place covers:

Growth of halftone dot in one direction only, includes Pulse Width Modulation. Illustrative examples are EP212990, US4951152.

This place covers:

Different dot sizes, each dot has the same density. Illustrative examples are EP647059 (fig.5), US4680645 (fig.1).

For dots of different densities (inks) classify in H04N 1/40087.

This place covers:

Illustrative examples are GB2026283, WO9307709.

This place does not cover:

Pattern varying in one dimension only |

This place covers:

Selection of particular gamma correction table, correction depending on media scanned or printed on, film type correction, correction of tone scale for dot gain.

Similar to H04N 1/6027 for colour.

This place covers:

Analysis of image content to determine final correction to be applied, e.g. automatic background deletion.

Attention is drawn to the following places, which may be of interest for search:

Conversion to binary |

This place covers:

Histogram analysis to determine tone correction parameters.

Attention is drawn to the following places, which may be of interest for search:

In context of pure image processing |

This place covers:

Pre-scanning to read reference strips (B&W), which is used to set max and min levels. Very limited test patterns containing only black (offset correction) and white (gain correction), e.g. printed next to an image or as separate image. Standard pattern on monitor (no light for black reference and light on for white reference).

Attention is drawn to the following places, which may be of interest for search:

Monitor calibration per se |

This place covers:

Test pattern analysis for gray scale corrections.

Attention is drawn to the following places, which may be of interest for search:

For colour |

This place covers:

Noise or error correction. Elimination of "streaky effects".

Attention is drawn to the following places, which may be of interest for search:

Scanning correction due to reader error | |

Image enhancement or restoration | |

Noise filtering in arrangements for image or video recognition or understanding |

This place covers:

Fairly self-explanatory. Has also first edge detection and then correction. Edge emphasis, sharpness correction, unsharp masking, smoothing.

Attention is drawn to the following places, which may be of interest for search:

For color | |

For cameras | |

Deblurring; Sharpening |

In patent documents, the following words/expressions are often used as synonyms:

- "show-through" and "see-through"

This place covers:

Removal of streaks, dust, blemishes, tears, scratches, hairs. Removing scratches from photographs using infrared image.

This place covers:

General coding groups for still images, B&W, gray scale or each color component separately. This head group has using different coding techniques within the same document, combination of different techniques, or choosing from different available coding methods (e.g. characters with technique 1, pictures with technique 2).

This place does not cover:

Bandwidth or redundancy reduction by scanning | |

Television systems for the transmission of television signals using bandwidth reduction | |

For mixed image compression |

Attention is drawn to the following places, which may be of interest for search:

Coding of color images | |

Bandwidth or redundancy reduction for data acquisition | |

Coding for image data processing in general | |

Data Compression in general |

This place covers:

Image to be coded must be halftoned image only.

This place covers:

B&W images, i.e. binary coding.

Attention is drawn to the following places, which may be of interest for search:

Continuous tone compression |

This place covers:

Eg. huffman coding.

This place covers:

Lossless coding, has a variety of coding methods, e.g. comparing different codings of a line and choosing shortest code; universal coding.

This place covers:

Block coding, also mix of Huffman and run-length coding.

This place covers:

Predictive coding, arithmetic coding.

This place covers:

Different resolutions of the image, wavelet coding for binary images.

This place covers:

Differential coding, i.e. coding the change data between two lines.

This place covers:

Templates, encodes the data change only; encode difference of image when template is known, e.g. scanned images of filled out form sheets.

Attention is drawn to the following places, which may be of interest for search:

Color form drop-out |

This place covers:

B&W runlength encoding.

This place does not cover:

Baseband signal showing more than two values or a continuously varying baseband signal is transmitted or recorded | |

Systems or arrangements allowing the picture to be reproduced without loss or modification of picture-information using predictive or differential encoding |

This place does not cover:

Circuits or arrangements for control or supervision between transmitter and receiver |

Attention is drawn to the following places, which may be of interest for search:

Television systems for two-way working | |

Selective content distribution, e.g. interactive television |

This place does not cover:

Preventing unauthorised reproduction |

Attention is drawn to the following places, which may be of interest for search:

Analogue secrecy television systems | |

Security arrangements for protecting computers or computer systems against unauthorised activity | |

Secret communication in general | |

Arrangements for secret or secure communication in transmission of digital information |

Attention is drawn to the following places, which may be of interest for search:

Restricting access to computer systems | |

Access-control involving the use of a pass | |

Verifying the identity or authority of a user of a system for the transmission of digital information | |

Protecting transmitted digital information from access by third parties | |

Access control in transmission of digital information |

Attention is drawn to the following places, which may be of interest for search:

Systems rendering a television signal unintelligible and subsequently intelligible | |

Ciphering or deciphering apparatus for cryptographic purposes | |

Secret communication by adding a second signal to make the desired signal unintelligible |

Attention is drawn to the following places, which may be of interest for search:

Arrangements for secret or secure communication using public key encryption algorithm |

This place covers:

Colour edit systems, printers with different recording modes for color and monochrome, decision as to print/scan color or B&W, general color applications for fax. Very general group.

Attention is drawn to the following places, which may be of interest for search:

Colorimetry |

This place covers:

Very straightforward, conversion into color documents, e.g. pattern chart to color (opposite to H04N 1/40012). Generating false color representations.

This place covers:

Filter wheels to separate components.

This place covers:

The use of different lights to read the image, e.g. first R, then G, finally B, e.g. successive RGB LED lighting.

This place covers:

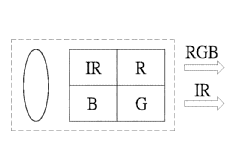

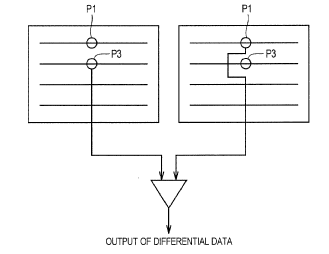

Using separate R, G and B sensor elements, typically line sensors. Has also documents on correcting chromatic aberrations of 3-line CCD sensor, also RGB sensor with additional monochrome sensor.

Attention is drawn to the following places, which may be of interest for search:

For area sensors (Bayer matrix) | |

Demosaicing |

This place covers:

Splitting light using prisms, half (dichroic) mirrors, diffraction grating - most applications deal with line sensors.

This place covers:

Dot by dot printing, point-wise scanning, essentially either inkjet or laser beam printer.

Attention is drawn to the following places, which may be of interest for search:

More details on inkjets |

This place covers:

Line-by-line printing.

Attention is drawn to the following places, which may be of interest for search:

Alignment of dots |

This place covers:

Picture-by-picture printing, i.e. one complete color separation after the other. Focus on image signal circuits, e.g. start-of-scan determination, sync marks on print medium, misregistration correction correcting misalignment of individual print heads with respect to each other. Facet or face-to-face errors. This is the typical way color laser printers work, when one latent image is generated after the other and one after the other developped and transferred.

Attention is drawn to the following places, which may be of interest for search:

Trapping is also used against misregistration, but is an image modification | |

Temperature | |

Purely mechanical corrections | G09G15/01 |

This place covers:

Using one drum for more than one color, thermal transfer printers.

This place covers:

Colour halftoning, colour multi-toning e.g. with use of more than one cyan (C1 and C2), screens, error diffusion.

H04N 1/40087 or some subgroup of H04N 1/405 may be applied additionally to H04N 1/52.

This place covers:

Printing with additional colours, e.g. using orange and brown pigments additionally or white or gold, CMYKRGB printers.

This place covers:

General color image processing, color to 2-color converstion (e.g.. RGB to black/red). Film type, document type, slide type, text+image, detection of mouse marker.

This place does not cover:

Circuits or arrangements for halftone screening |

This place covers:

Self-explanatory regarding noise and edge. A substantial part of this subgroup deals with trapping (spreading and choking image objects), either on bitmap or on page description language (PDL) level.

This place does not cover:

Retouching, i.e. modification of isolated colours only or in isolated picture areas only |

Attention is drawn to the following places, which may be of interest for search:

For integration of trapping in PDL workflow |

This place covers:

All kinds of color correction. Estimating spectrum from XYZ input.

This place does not cover:

Conversion of colour picture signals to a plurality of signals some of which represent particular mixed colours |

This place covers:

Color corrections involving representation of the image on monitor, e.g. for interactive correction or for use as soft proofer.

This place does not cover:

Matching printer and monitor for softproofing per se | |

With simulation on a subsidiary picture reproducer |

This place covers:

Fairly self-explanatory, typically the user selects one of the several similated, corrected images.

This place covers:

Usually transformations from RGB to CMY, but also used generally for transformations to output device values, as far as the focus is on the transformation. Here (matrix) equations are used.

This place covers:

Look-up tables for color conversion, typically to CMY. Also interpolation methods to calculate the in-between values not stored in the tables, e.g. tetrahedal or cubic interpolations.

This place does not cover:

Generating a fourth subtractive colour signal using look-up tables |

This place covers:

Essentially the transformations to CMYK which involve use of equations. Gray component replacement (GCR), undercolor removal (UCR).

This place covers:

Four-colour look-up tables, also their interpolation.

This place covers:

General control and correction of tone reproduction curves. Gray balance, white balance as result thereof. Aspects of saturation correction.

This place does not cover:

Reduction of colour to a range of reproducible colours |

Attention is drawn to the following places, which may be of interest for search:

When focus is on white balance per se. | |

White balance in cameras |

This place covers:

Device profiles, e.g. ICC profiles, profile management for several devices, profile editing.

This place covers:

Printer or scanner calibration using color test patterns.

This place does not cover:

Matching two or more picture signal generators or two or more picture reproducers using test pattern analysis |

Attention is drawn to the following places, which may be of interest for search:

For B&W | |

Camera calibration | |

Color charts as such | G01G3/52 |

In electrophotography |

This place covers:

Specifically matching two (or more) devices to each other, e.g. for proofing, i.e. printer to printer or printer to monitor.

This place covers:

Limited to the two device scenario.

This place covers:

Gamut mapping and gamut conversion. Mainly within a device-independent space in order to map color reproducability of one device onto that of another device.

Attention is drawn to the following places, which may be of interest for search:

In relation to general image processing and computer graphics |

This place covers:

Corrections to an image which depends on the type of image object, i.e. different corrections within one page, e.g. text and picture differently corrected.

Attention is drawn to the following places, which may be of interest for search:

Discrimination of image (object) types per se - (B&W), | |

Discrimination of image (object) types per se - (colour). |

This place covers:

Only hue changes, not luminance or chroma or saturation.

This place does not cover:

Saturation correction |

This place covers:

Correction of e.g. color fog or blue shift in image.

H04N 1/6027 has precedence.

This place covers:

Eg. histogram technique in L*a*b* color space.

This place covers:

Environmental factors.

This place covers:

Eg. correction for sunlight on monitor, artifical lighting, flare.

This place covers:

Different film types have different properties, thus need to be corrected. For newspaper, correction due to the yellowing is necessary.

This place covers:

Correction limited to particular colors, e.g. the red of a red apple is selected and enhanced. Changing color information in a region.

Attention is drawn to the following places, which may be of interest for search:

For skin color |

This place covers:

With display of image on monitor for user selection and editing.

This place covers:

Colour coding closely related to apparatus.

Attention is drawn to the following places, which may be of interest for search:

Compression of B&W | |

Compression per se |

This place does not cover:

For different coding for different image types, but limited to B&W | |

Similar but for colour correction and not coding |

This place covers:

Palletized colors, including methods of obtaining the palletization and their coding. Rounding, change from true color to 8bit using a pallette.

This place covers:

Limited to e.g. YUV, Lab, etc.

This place does not cover:

Adapting to different types of images, e.g. characters, graphs, black and white image portions | |

Using a reduced set of representative colours, e.g. each representing a particular range in a colour space |

This place covers:

Limited to CMY or RGB, raw sensor data.

This place does not cover:

Adapting to different types of images, e.g. characters, graphs, black and white image portions | |

Transmitting or storing colour television type signals |

Attention is drawn to the following places, which may be of interest for search:

Demosaicing |

This place covers:

- Scanning arrangements using moving aperture, refractor, reflector or lens

- Scanning arrangements using switched light sources, solid-state devices or cathod-ray tube by deflecting elctron beams

- Scanning arrangements for motion picture films

This place does not cover:

Scanning of motion picture films |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture signal generators using optical-mechanical scanning means only, for colour television systems |

Attention is drawn to the following places, which may be of interest for search:

Scanning systems using movable or deformable optical elements for controlling the intensity, colour, phase, polarisation or direction of light |

This place does not cover:

Scanning of motion picture films |

Attention is drawn to the following places, which may be of interest for search:

Devices or arrangements for the control of the direction of light arriving from an independent light source |

This place covers:

Scanning details of electrically scanned solid-state picture reproducers.

This place does not cover:

Transforming light or analogous information into electric information using solid-state image sensors |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture reproducers using solid-state colour display devices |

This place covers:

Deflection circuits for cathode-ray tubes, when specially adapted for television

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Picture reproducers, specially adapted for colour television systems, using cathode-ray tubes |

Attention is drawn to the following places, which may be of interest for search:

Cathode ray oscillographs | |

Deflection circuits, of interest only in connection with cathode-ray tube indicators | |

Control arrangements or circuits using single beam cathode-ray tubes, the beam directly tracing characters, the information to be displayed controlling the deflection as a function of time in two spatial coordinates | |

Electric discharge tubes of discharge lamps | |

Linearisation of ramp of a sawtooth shape pulse |

Attention is drawn to the following places, which may be of interest for search:

Regulation of dc voltage in general |

This place does not cover:

Circuits for controlling dimensions of picture on screen by maintaining the cathode-ray tube high voltage constant |

Attention is drawn to the following places, which may be of interest for search:

Control arrangements or circuits using single beam cathode-ray tubes, the beam tracing a pattern independent of the information to be displayed, this latter determining the parts of the pattern rendered respectively visible and invisible |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Arrangements for convergence or focusing in cathode-ray tubes specially adapted for colour television systems |

Attention is drawn to the following places, which may be of interest for search:

Focussing circuits in general |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Scanning of colour motion picture films, e.g. for telecine |

Attention is drawn to the following places, which may be of interest for search:

Picture signal generating by scanning motion picture films or slide opaques, e.g. for telecine |

This place covers:

Hardware-related or software-related aspects of television signal processing at the transmitter side or the receiver side

- H04N 5/00 features transmitter techniques specially adapted to analog transmission of television signals. The corresponding function place for generic transmission are found in subclasses H04N 21/00, H04B, H04H, H04L, H04W. This concerns servers, broadcast or multicast, home networks, wireless networks per se.

- H04N 5/00 features receiver techniques specially adapted to the reception of analog television signals. The corresponding place for digital television receivers is H04N 21/00.

This place does not cover:

Scanning details of television systems; Combination thereof with generation of supply voltages |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Details of colour television systems | |

Wall TV displays | |

Details of stereoscopic television systems | |

Servers specifically adapted for the selective distribution of content | |

Client devices specifically adapted for the reception of, or interaction with, content in selective content distribution |

Attention is drawn to the following places, which may be of interest for search:

Selective content distribution | |

Constructional details related to the housing of computer displays | |

Constructional details or arrangements for portable computers | |

Power management in computer systems | |

Image enhancement or restoration | |

Image analysis | |

Control arrangements or circuits, of interest only in connection with visual indicators other than cathode-ray tubes | |

Control arrangements or circuits for visual indicators common to cathode-ray tube indicators and other visual indicators | |

Diversity receivers | |

Broadcast synchronizing | |

Broadcast receivers | |

Synchronizing in TDMA | |

Receiver synchronizing | |

Home automation networks |

H04N 5/00 features a number of symbols corresponding to a same number of Indexing Codes (e.g., H04N 5/4448 as symbol and H04N 5/4448 as Indexing Code symbol).

Allocation of symbols and/or Indexing Code symbols:

- A document containing invention information relating to details of television elements will be given a H04N 5/00 group.

- A document containing additional information relating to details of television elements will be given a H04N 5/00 group.

- A document merely mentioning further details of television elements will not be given a group, but it may receive an Indexing Code if the disclosure is considered relevant, e.g. when conversion of interlace to progressive scanning (H04N 7/012 ) involves motion estimation, H04N 5/145 is added.

In this place, the following terms or expressions are used with the meaning indicated:

Edging | detection of edges |

Movement estimation | motion vector generation |

KTC | Thermal noise on capacitor |

In patent documents, the following abbreviations are often used:

GPS | global positioning system |

PC | personal computer |

STB | set top box |

This place does not cover:

Synchronising systems used in the transmission of pulse code modulated video signals with other pulse code modulated signal |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Colour synchronization | |

Synchronisation processes at server side for selective content distribution |

Attention is drawn to the following places, which may be of interest for search:

Synchronisation between a display unit and other units, e.g. other display units, video-disc players | |

Synchronisation of pulses having essentially a finite slope or stepped portions | |

Synchronisation of generators of electronic oscillations or pulses | |

Arrangements for synchronising receiver with transmitter in the transmission of digital information |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuits for reinsertion of dc and slowly varying components of colour signals |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Circuits for controlling the amplitude of colour signals |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Suppression of noise in television signal recording |

This place covers:

Circuitry, devices and other equipment specially adapted to be used in television studio, e.g. for mixing images or generation of special effects.

This place does not cover:

Cameras or camera modules comprising electronic image sensors; Control thereof |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Studio equipment related to broadcast communication |

Attention is drawn to the following places, which may be of interest for search:

Buildings for studios for broadcasting, cinematography, television or similar purposes |

This place covers:

Picture signal generation by scanning motion picture films i.e. cinematographic films in video signals e.g. telecine.

This place does not cover:

Scanning details therefor | |

Standards conversion therefor |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Scanning of colour motion picture films, e.g. for telecine |

This place covers:

Obsolete technology.

This place does not cover:

Picture signal generating by scanning motion picture films or slide opaques |

This place covers:

Studio circuits providing video special effects like combining different images, changing image aspect (geometric, orientation, etc.) or aesthetic/artistic aspect, providing transitions between images, background and foreground images synthesizing, mixing and switching.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Arrangements for broadcast or for distribution combined with broadcast |

This place covers:

Mobile studios, e.g. television studio equipment installed in vehicles for outdoor broadcasting.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Broadcast communication aspects of mobile studios |

This place covers:

Circuitry (electronic circuits) and driving.

This place does not cover:

Scanning details of television systems | |

Cameras or camera modules comprising electronic image sensors or control thereof | |

Circuitry of solid-state image sensors [SSIS]; Control thereof |

This place covers:

X-ray imaging systems that directly or indirectly detect incident X-ray photons.

This place does not cover:

Cameras or camera modules for generating image signals from X-rays | |

Circuitry of solid-state image sensors [SSIS] for transforming X-rays into image signals |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Apparatus for radiation diagnosis, e.g. combined with radiation therapy equipment | |

Measuring length, thickness or similar linear dimensions, angles, areas or irregularities of surfaces or contours, using X-rays | |

Investigating or analysing materials by the use of wave or particle radiation, e.g. X-rays, by transmitting the radiation through the material and forming a picture |

Attention is drawn to the following places, which may be of interest for search:

Measurement performed on radiation beams, e.g. position or section of the beam; Measurement of spatial distribution of radiation | |

Photographic processes for X-rays, infrared or ultraviolet light | |

Electrographic processes using X-rays, e.g. electroradiography | |

Apparatus for electrographic processes using X-rays, e.g. electroradiography |

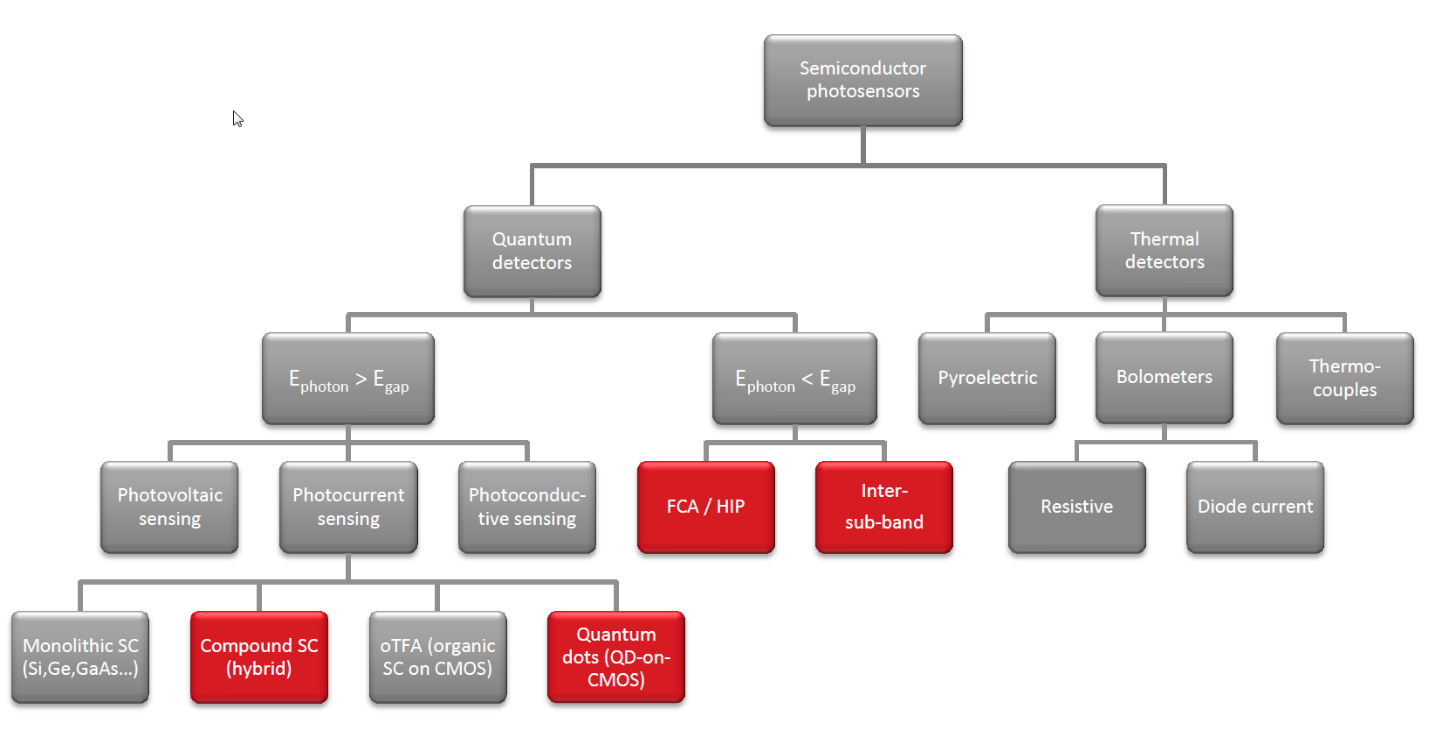

This place covers:

Image sensors other than solid state image sensors and control thereof for near and far infrared [IR] cameras, for example pyroelectric imaging tubes or image intensifier tubes.

This place does not cover:

Cameras or camera modules for generating image signals from infrared radiation | |

Circuitry of solid-state image sensors [SSIS] for transforming only infrared radiation into image signals |

Attention is drawn to the following places, which may be of interest for search:

Radiation pyrometry, e.g. infrared or optical thermometry | |

Investigating or analysing materials using infrared light | |

Photographic processes for X-ray, infrared or ultraviolet ray | |

Organic devices sensitive to infrared radiation | |

Thermoelectric devices comprising a junction of dissimilar materials | |

Thermoelectric devices without a junction of dissimilar materials |

This place does not cover:

Picture signal circuitry for video frequency region |

Attention is drawn to the following places, which may be of interest for search:

Characteristics or internal components of servers | |

Transmitter circuits per se |

Attention is drawn to the following places, which may be of interest for search:

Digital communication modulator circuits | |

Modulation per se |

This place does not cover:

Picture signal circuitry for video frequency region |

Attention is drawn to the following places, which may be of interest for search:

Characteristics or internal components of clients | |

Receiver circuits per se |

Attention is drawn to the following places, which may be of interest for search:

Characteristics of or internal components of the client for processing graphics | |

Generation of individual character patterns for visual indicators | |

Graphics pattern generators for visual indicators |

Attention is drawn to the following places, which may be of interest for search:

Displaying supplemental content in a region of the screen |

Attention is drawn to the following places, which may be of interest for search:

Decoding digital information by demodulating in clients | |

Digital communication demodulator circuits | |

Demodulation per se |

Attention is drawn to the following places, which may be of interest for search:

Accessing communication channels in clients, tuning | |

Tuning resonant circuits per se | |

Automatic frequency control per se |

Attention is drawn to the following places, which may be of interest for search:

Muting the audio signal in clients |

Attention is drawn to the following places, which may be of interest for search:

Invisible or silent tuning | |

Processing of audio elementary streams in clients |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Generation of supply voltages, in combination with electron beam deflecting |

Attention is drawn to the following places, which may be of interest for search:

Power management in clients | |

Regulating of voltage or current in general | |

Transformers | |

Supplying or distributing electric power, in general | |

Static converters |

This place does not cover:

Furniture aspects of television cabinets |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Combinations of a television receiver with apparatus having a different main function |

This place does not cover:

Scanning details of television systems |

This place covers:

Circuit details of cathode-ray display tubes pertaining to the conversion of electrical information into light information, when specially adapted for television displays.

This place does not cover:

Scanning in television systems by deflecting electron beam in cathode-ray tube |

This place covers:

Circuit details of the conversion of electric information into light information in electroluminescent television displays.

Attention is drawn to the following places, which may be of interest for search:

Control arrangements or circuits using electroluminescent elements for presentation of a single character by selection from a plurality of characters, or by composing the character by combination of individual elements | |

Control arrangements or circuits using electroluminescent panels for presentation of an assembly of a number of characters, by composing the assembly by combination of individual elements arranged in a matrix |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Projection devices for colour picture display |

This place covers:

Video data recording:

- Specially adapted recording devices such as a VCR, PVR, high speed camera, camcorder or a specially adapted PC

- Interfaces between recording devices and other devices for input and/or output of video signals such as TVs, video cameras, other recording devices

- Video recorder programming

- Adaptations of the video signal for recording on specific recording media such as HDD, tape, drums, holographic support, semiconductor memories

- Adaptations for reproducing at a rate different from the recording rate such as trick play modes and stroboscopic recording

- Processing of the video signal for noise suppression, scrambling, field or frame skip, bandwidth reduction

- Impairing the picking up, for recording, of a projected video signal

- Regeneration of either a recorded video signal or for recording the video signal

- Video signal recording wherein the recorded video signal may be accompanied by none, one or more video signals (stereoscopic signals or video signals corresponding to different story lines)

- Production of a motion picture film from a television signal

Details specific to this group:

- The recording equipment is for personal use and not for studio use

- The subgroups H04N 5/92, H04N 5/93, H04N 5/94 and H04N 5/95 are for black and white (monochrome) video signals only while the remaining subgroups H04N 5/7605, H04N 5/765, H04N 5/78, H04N 5/80, H04N 5/84, H04N 5/89, H04N 5/903, H04N 5/907 and H04N 5/91 are for both black and white and colour video signals

- The subject-matter in the range H04N 5/92 - H04N 5/956 deals with recording and processing for recording of only black and white video signals while H04N 9/79 - H04N 9/898 deals with recording and processing for recording colour video signals.

- H04N 5/76 (video recording) distinguishes itself from editing, which is found in G11B 27/00, in that the signals recorded and reproduced are video signals.

- H04N 5/76 is a function place for recording or processing for recording. H04N 21/433 describes applications for recording in a distribution system.

- H04N 5/76 features recording devices specially adapted to video data recording that can be programmed. The programming may be done by a user or a using an algorithm. Business methods where the video recording feature or step is well known is generally classified in G06Q 30/02 .

- H04N 5/76 contains video cameras that record video data to a recording medium. Video cameras constructional details are found in H04N 23/00.

- H04N 5/76 is an application place for video data trick play. Reproducing data in general at a rate different from the recording rate is found in G11B 27/005.

- H04N 5/76 contains applications of video data processing for scrambling/encrypting video data for recording. Systems for rendering a video signal unintelligible are found in H04N 7/16 and H04N 21/00.

- H04N 5/76 is an application place for video data reduction for recording. Video data compression is found in H04N 19/00.

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Video surveillance | |

Selective content distribution | |

Controlling video cameras | |

Alarm system using video cameras |

Attention is drawn to the following places, which may be of interest for search:

Production of a video signal from a motion picture film | |

Interfaces | |

Video data coding | |

Network video distribution | |

Personal video recorder in selective content distribution systems | |

User interface of set top boxes | |

Video camera constructional details | |

Video data processing for printing | |

Systems for buying and selling, i.e. video content | |

Business methods related to the distribution of video data content | |

Information storage based on relative movement between record carrier and transducer | |

Video editing | |

Recording techniques specially adapted to a recording medium for recording digital data in general | |

Control of video recorders where the video signal is not substantially involved | |

Static stores |

A document does not explicitly mention that the video signal is a monochrome video signal is to be interpreted as being a colour video signal. As a consequence some classes in H04N 5/76 specific to monochrome signal recording have fallen out of use. Instead the corresponding colour symbols should be given to such documents:

Allocation of CPC symbols:

- A document containing invention information relating to video data recording will be given an H04N 5/76 CPC group.

- A document containing additional information relating to video data recording (in particular, if the document discloses a detailed video recording device) will be given a H04N 5/76 Indexing Code symbol.

- A document containing invention information for more than one invention it may be given more than one H04N 5/76 CPC group.

- A document merely mentioning recording will not be given an CPC group, but it may receive an Indexing Code if the disclosure is considered relevant.

Allocation of Indexing Code symbols in combination with CPC:

- When assigning H04N 5/76 as CPC group, giving an additional Indexing Code is mandatory.

Combined use of Indexing Code symbols:

- Indexing Code symbols maybe allocated as necessary to describe additional information in document.

Symbol allocation rules:

- Documents defining recording devices that have an interface, e.g., connected to a network, should have at least one of the more specific H04N 5/765 Indexing Code symbols.

- Documents dealing with invention information about measures to prevent recording of projected images should be given the H04N 2005/91392 Indexing Code symbol.

In this place, the following terms or expressions are used with the meaning indicated:

Video or video data | Video signal, analogue or digital, with or without accompanying audio |

Attention is drawn to the following places, which may be of interest for search:

Arrangements for the associated working of recording or reproducing apparatus with related apparatus |

This place covers:

Video cameras as recording devices.

Attention is drawn to the following places, which may be of interest for search:

Television cameras |

Attention is drawn to the following places, which may be of interest for search:

TV-receiver details | |

Recording/reproduction devices integrated in TV-receivers | |

Synchronisation between a display unit and video-disc players |

This place covers:

Magnetic disks.

Attention is drawn to the following places, which may be of interest for search:

Recording on, or reproducing or erasing from, magnetic drums | |

Recording on, or reproducing or erasing from, magnetic disks | |

Magnetic drum carriers | |

Magnetic disk carriers |

This place covers:

Video recording programming applications, although it reads (recording) "on tape".

Video recorder programming (reservation recording).

Attention is drawn to the following places, which may be of interest for search:

Recording on, or reproducing or erasing from, magnetic tapes | |

Magnetic tape carriers | |

Arrangements for device control affected by the broadcast information |

Attention is drawn to the following places, which may be of interest for search:

Fixed mountings of heads relative to magnetic record carriers |

Attention is drawn to the following places, which may be of interest for search:

Disposition or mounting of heads on rotating support |

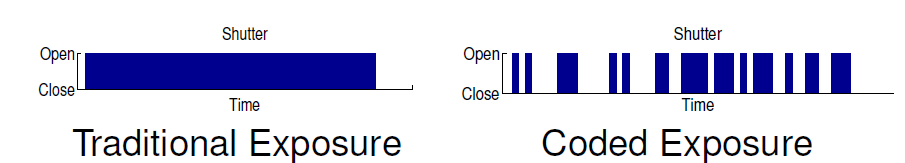

This place covers:

- Trick play modes as well as processing for recording to enable the reproduction of video data at a rate different from the recording rate.

- High speed recording cameras.

- Speed control during recording, reproducing, reproducing at variable speed.

This place does not cover:

Television signal processing recording |

Attention is drawn to the following places, which may be of interest for search:

Recording or reproducing using electrostatic charge injection; record carriers therefor |

This place does not cover:

Attention is drawn to the following places, which may be of interest for search:

Recording or reproducing by optical means; record carriers therefor |

This place covers:

Optical discs.

Attention is drawn to the following places, which may be of interest for search:

Recording or reproducing by optical means with cylinders | |

Recording or reproducing by optical means with disks |

This place does not cover:

Television signal processing recording |

Attention is drawn to the following places, which may be of interest for search:

Recording, reproducing or erasing by using optical interference patterns, e.g. holograms |

This place does not cover:

Television signal processing recording |

Attention is drawn to the following places, which may be of interest for search:

Recording or reproducing using record carriers having variable electrical capacitance; Record carriers therefor |

This place does not cover:

Television signal processing recording |

Attention is drawn to the following places, which may be of interest for search:

Television signal recording based on relative movement between record carrier and transducer | |

Static stores per se |

Examples of places where the subject matter of this place is covered when specially adapted, used for a particular purpose, or incorporated in a larger system:

Processing of colour television signals in connection with recording |

This place does not cover:

Regeneration of analogue synchronisation signals |

Attention is drawn to the following places, which may be of interest for search:

Circuitry for suppressing or minimizing disturbance in television systems in general |

This place covers:

- Scrambling and encryption of video data for recording.

- Copy-protection systems.

At least one Indexing Code H04N 5/913 symbol should be allocated to such document to further specify the scrambling method.

This place covers:

Compression of analogue video signals.

Attention is drawn to the following places, which may be of interest for search: